Evaluating the Tool Utilization Capabilities of LLMs: The T-Eval Benchmark

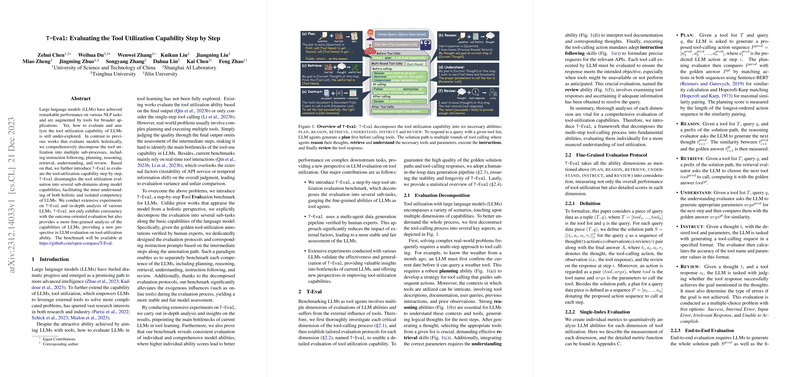

The paper entitled "T-Eval: Evaluating the Tool Utilization Capability of LLMs Step by Step" presents a novel framework for evaluating the capabilities of LLMs in employing external tools. The researchers propose a detailed methodology that dissects tool utilization into several essential processes, namely, instruction following, planning, reasoning, retrieval, understanding, and review. This multifaceted decomposition allows for a comprehensive assessment of LLMs' capacities, both in isolation and in combination, offering insights into the nuanced proficiencies of LLMs in interacting with the external world.

Key Contributions

- Introduction of T-Eval Benchmark: A pivotal contribution of this research is the establishment of the T-Eval benchmark. Unlike traditional evaluations that predominantly focus on the end result or single-step tool interactions, T-Eval provides a fine-grained analysis of the LLMs' tool-utilization ability across multiple dimensions. This enables more poignant insights into the areas where LLMs excel or falter.

- Detailed Evaluation Protocols: The benchmark is distinctive for its extensive evaluation protocols tailored to each tool utilization dimension. This includes planning, reasoning, retrieval, understanding, instruction following, and reviewing. Each dimension is assessed using specific metrics that ensure the evaluation is comprehensive and nuanced.

- Stabilizing Evaluation via Human-Verified Annotations: The paper emphasizes the stability and fairness of evaluations by using a human-in-the-loop approach for generating the gold standard annotations. This approach mitigates the variance introduced by real-time tool interactions and external API instability.

- Extensive Experimental Validation: By extensively testing multiple LLMs using T-Eval, the paper not only validates the benchmark's effectiveness and generalizability but also discerns the major limitations inherent in current LLMs, especially regarding their tool-use capabilities.

Implications for Future Research

The introduction of T-Eval paves the way for more refined evaluation methodologies in the field of AI and LLMs. By distinguishing between various cognitive processes, this benchmark allows for targeted improvements and training regimens, potentially leading to more robust and general-purpose LLMs. Furthermore, the focus on fine-grained analysis and sustainable evaluation metrics may influence future practices in the training and deployment of LLMs, thereby accelerating progress in AI applications that rely on tool utilization.

Conclusive Reflections and Future Directions

In synthesizing a novel framework for LLM evaluation through the lens of tool utilization, the paper provides a substantive foundation for both theoretical explorations and practical advancements. The implications of these methodologies extend beyond immediate evaluation, suggesting avenues for enhancing LLM training through feedback mechanisms tailored to specific skill sets.

Looking forward, it is plausible to anticipate evolutions in the T-Eval framework that incorporate even wider arrays of tools and interactions, driven by an increasingly diverse range of real-world applications. Similarly, the insights derived from T-Eval's deployment can guide architectural improvements in LLMs, fostering developments that align with the nuanced demands of interacting with complex external systems in dynamic environments.

In conclusion, "T-Eval: Evaluating the Tool Utilization Capability of LLMs Step by Step" sets an impressive precedent in the precise evaluation of LLMs' tool-utilization capabilities, marking a significant stride in both machine cognition and applied AI research.