Comprehensive Survey on Retrieval-Augmented Generation for AI-Generated Content

Introduction to Retrieval-Augmented Generation (RAG)

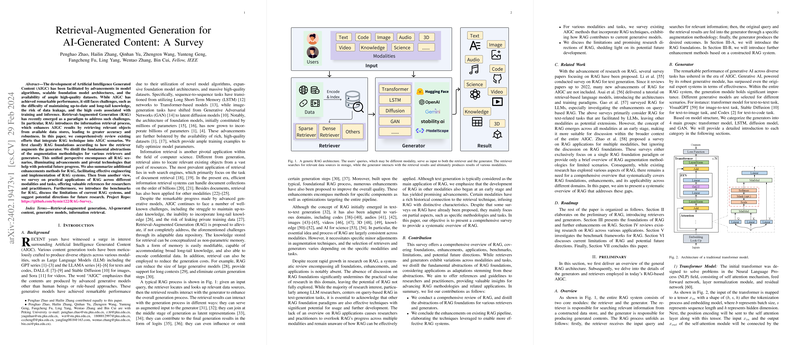

In the landscape of Artificial Intelligence Generated Content (AIGC), Retrieval-Augmented Generation (RAG) has emerged as a pivotal paradigm, aiming to enhance generative models' performance by incorporating relevant external information through retrieval mechanisms. Despite its substantial impact across various modalities and tasks, a holistic review on the foundational strategies, enhancements, applications, and benchmarks of RAG has been notably absent. This survey endeavors to bridge this gap by providing an exhaustive overview of RAG's development, underscoring its methodologies, enhancements, diverse applications, and potential future directions.

Methodologies in RAG

RAG methodologies can be broadly classified into four main paradigms based on how the information retrieval process augments the generation:

- Query-based RAG: Often known as prompt augmentation, where retrieved information is directly integrated into the initial stage of the generation input.

- Latent Representation-based RAG: Centers on the interaction between generative models and the latent representations of retrieved objects to improve content quality during generation.

- Logit-based RAG: Focuses on combining information from the retrieval process at the logit (the inputs to the final softmax function) level during the generation sequence.

- Speculative RAG: Utilizes retrieval to potentially replace certain generation steps, aiming at saving resources and enhancing response speeds.

Enhancements in RAG

The enhancements in RAG are aimed at elevating the efficiency and effectiveness of the RAG pipeline, which includes:

- Input Enhancement: Techniques such as query transformation and data augmentation to refine the initial input for better retrieval results.

- Retriever Enhancement: Strategies like recursive retrieval, chunk optimization, and finetuning the retriever to improve the quality and relevance of retrieved content.

- Generator Enhancement: Incorporating methods such as prompt engineering and decoding tuning to enrich the generator's capacity to produce high-quality output.

- Result Enhancement: Techniques like rewrite output that ensure the final generated content is more accurate and factually correct.

- RAG Pipeline Enhancement: Approaches aimed at optimizing the entire RAG pipeline, including adaptive retrieval and iterative RAG for efficient processing and improved results.

Applications of RAG

RAG's adaptability makes it applicable across a wide range of domains and tasks, including but not limited to text, code, audio, image, video, 3D content generation, and knowledge incorporation. Each of these applications demonstrates RAG's ability to significantly improve content relevancy, accuracy, and overall quality by leveraging additional external information retrieved in real-time.

Benchmarks and Current Limitations

RAG systems are evaluated across several benchmarks that measure aspects such as noise robustness, negative rejection, and information integration. Various limitations still pose challenges, including noises in retrieval results, extra overhead, and the intricacies in the interaction between retrieval and generation components. Moreover, restrictions related to long context generation present additional obstacles to be addressed.

Future Directions

The future development of RAG could focus on more advanced research methodologies, efficient deployment and processing, incorporating long-tail and real-time knowledge, and combining RAG with other techniques to enhance generative models further.

Conclusion

As RAG continues to evolve, its capabilities in enhancing the quality of AIGC are undeniable. By addressing its current limitations and exploring new enhancements and applications, RAG stands to significantly contribute to the advancement of AIGC across a plethora of domains, marking an exciting phase in the development of intelligent generative models.