Infini-gram: Scaling Unbounded n-gram Language Models to a Trillion Tokens (2401.17377v4)

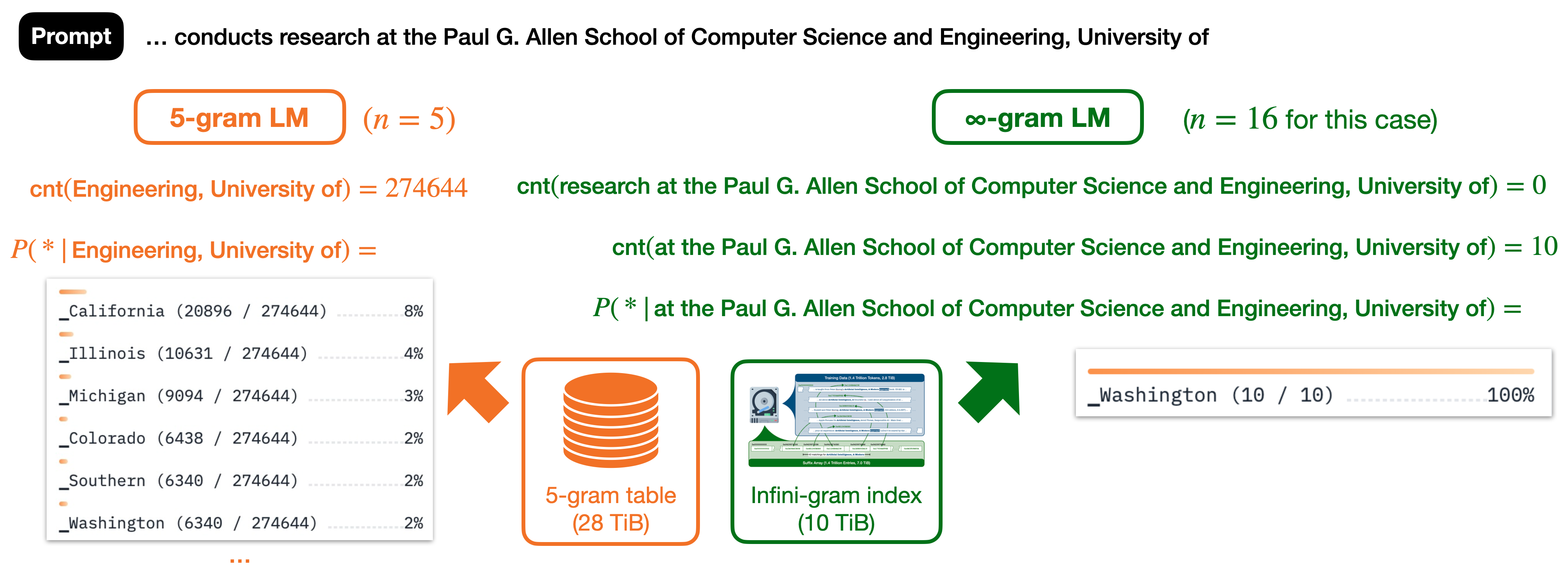

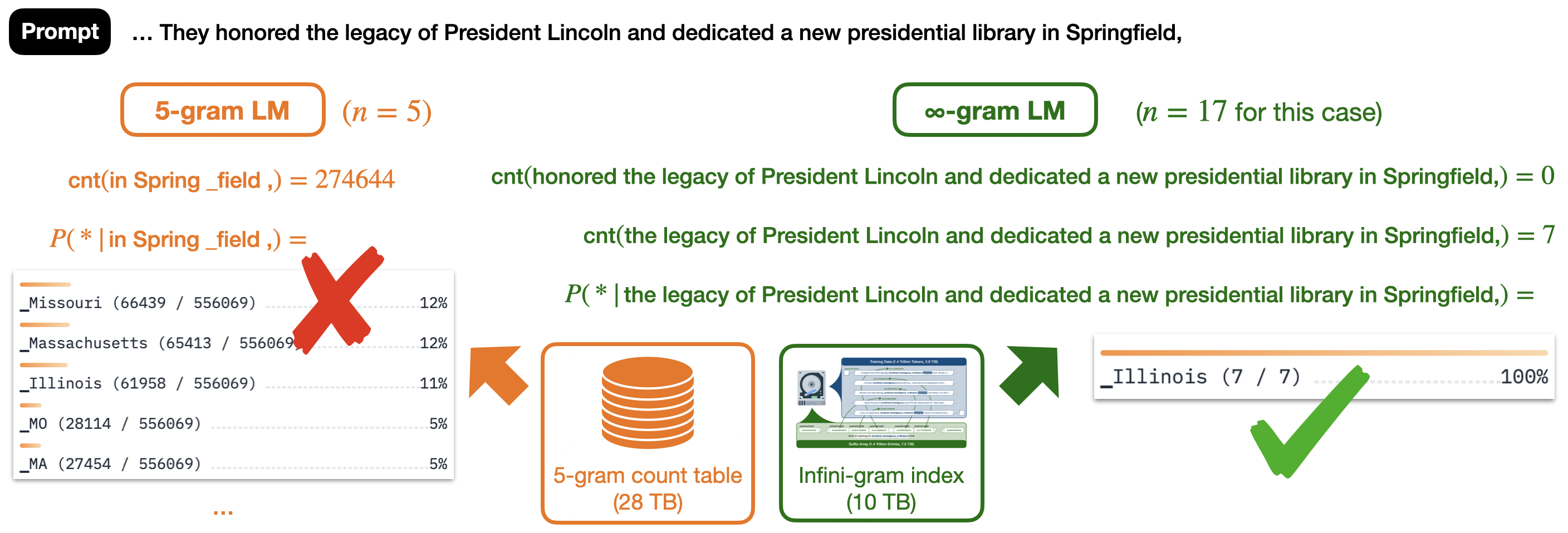

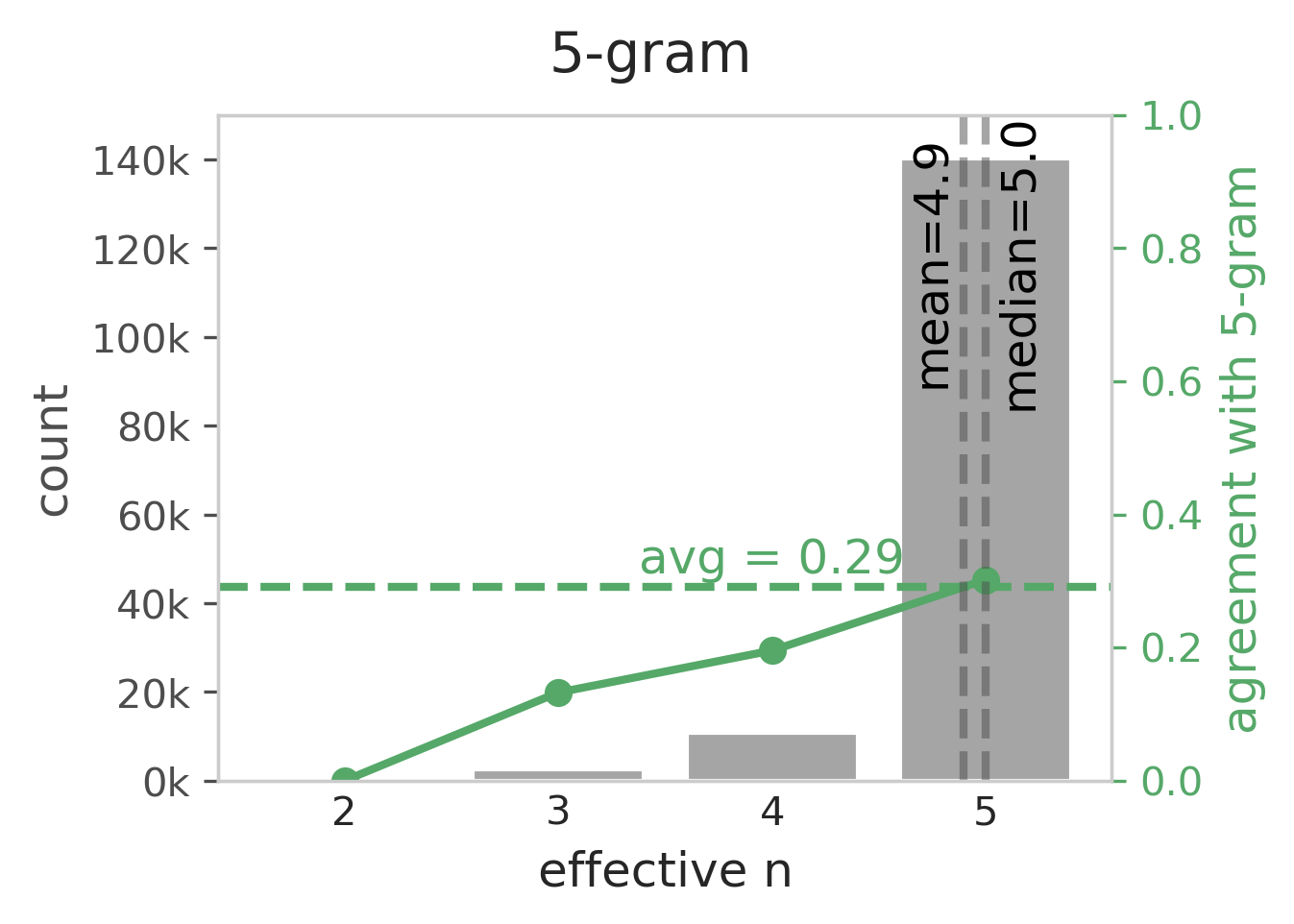

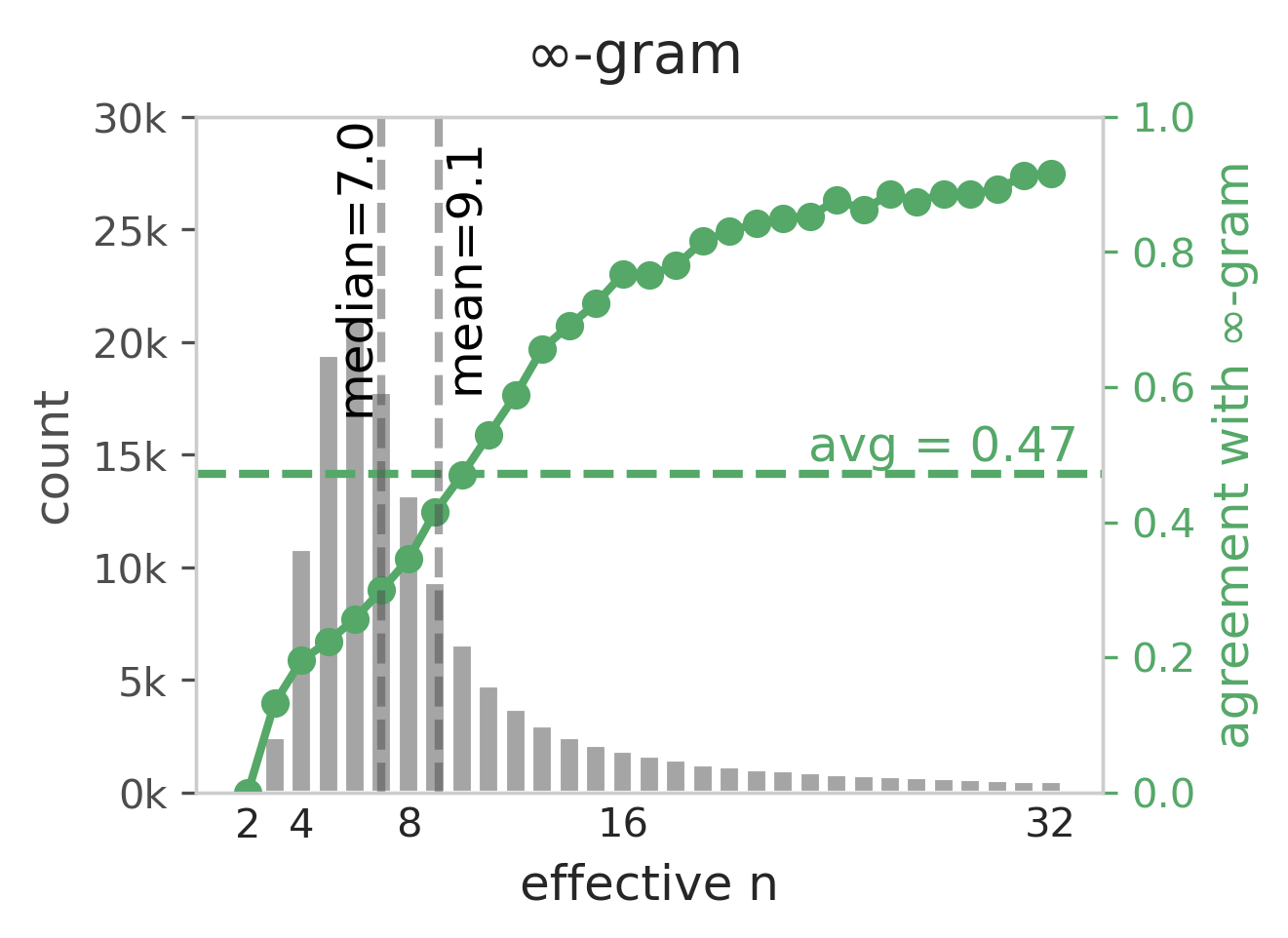

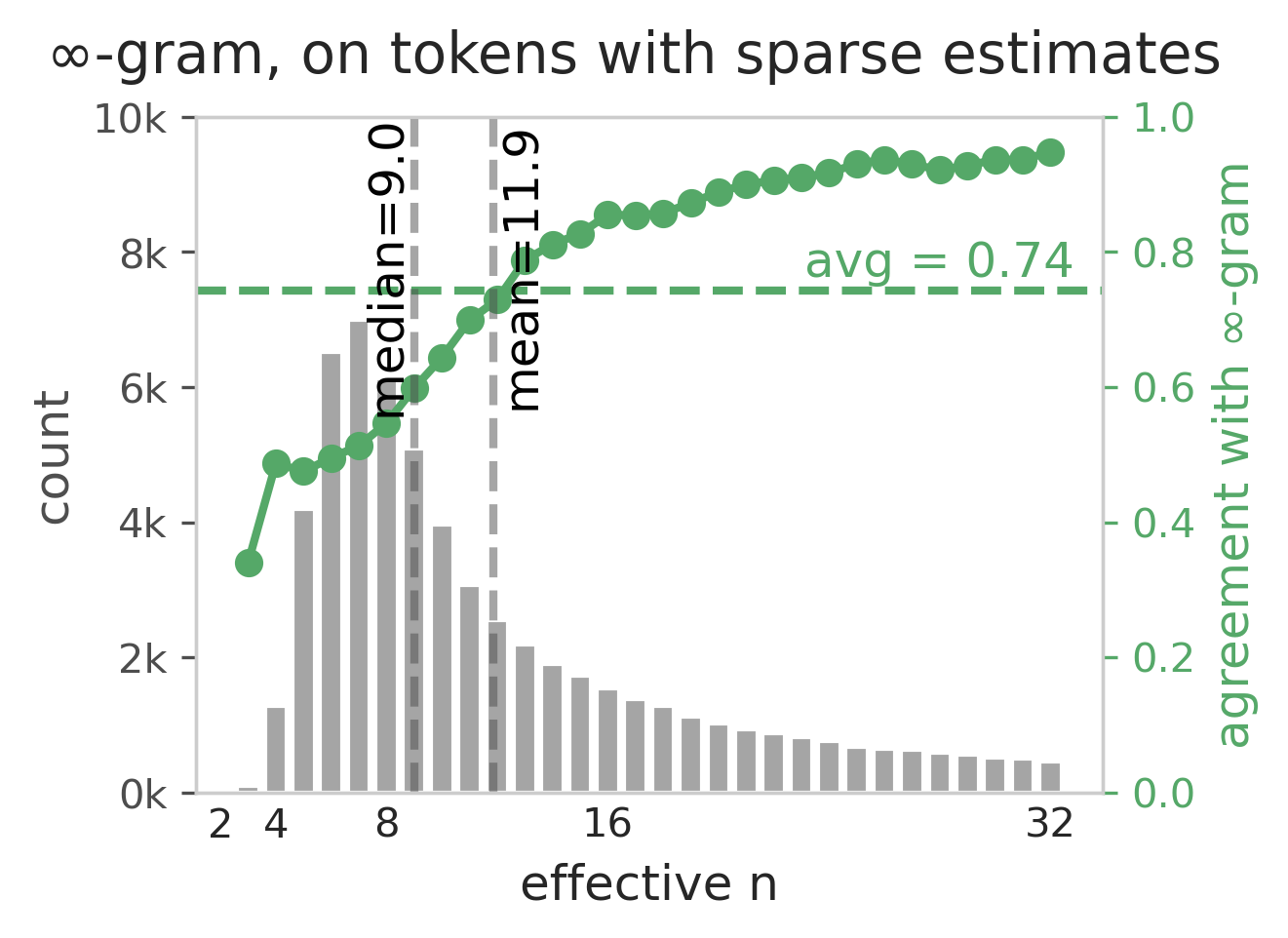

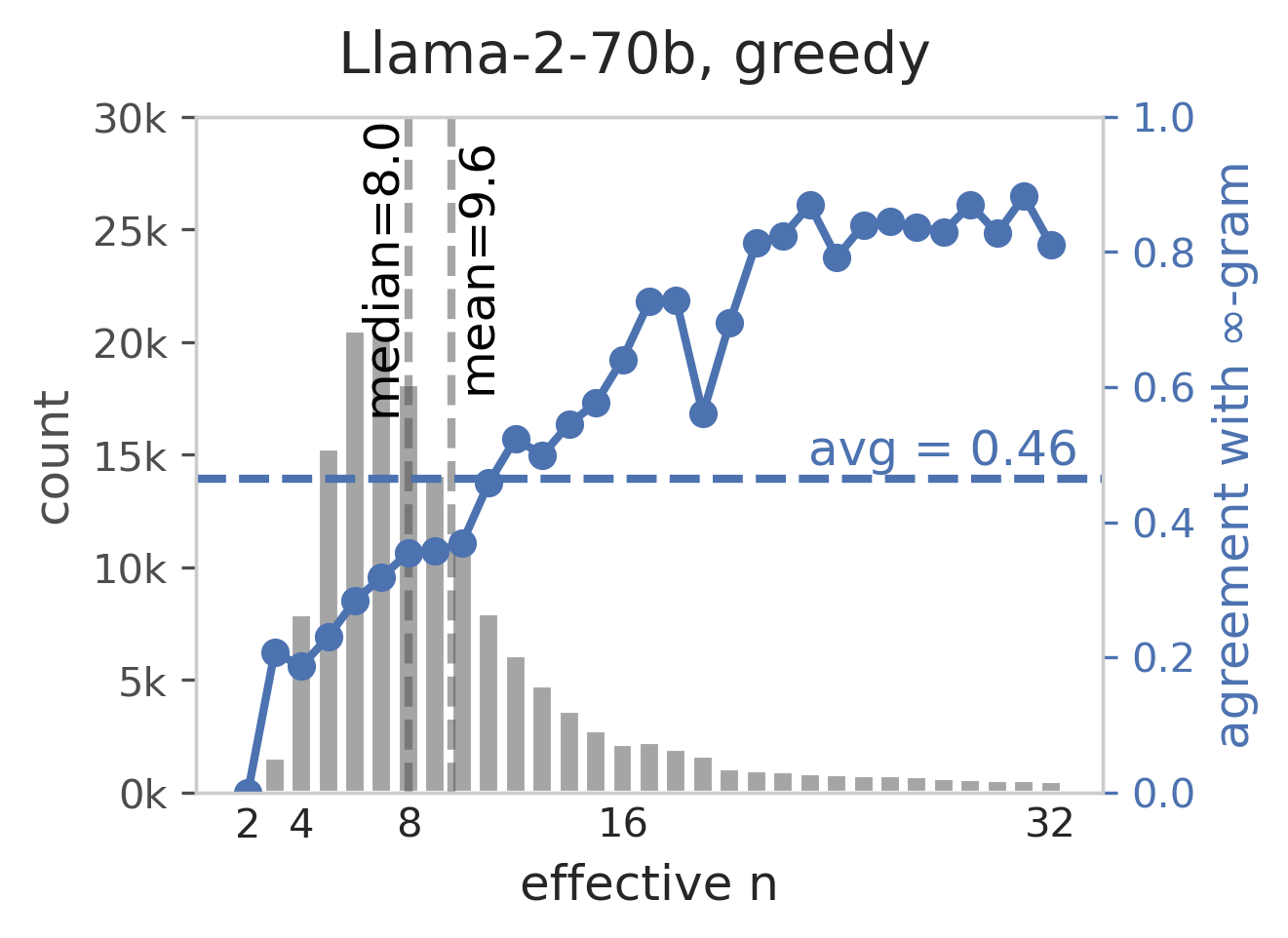

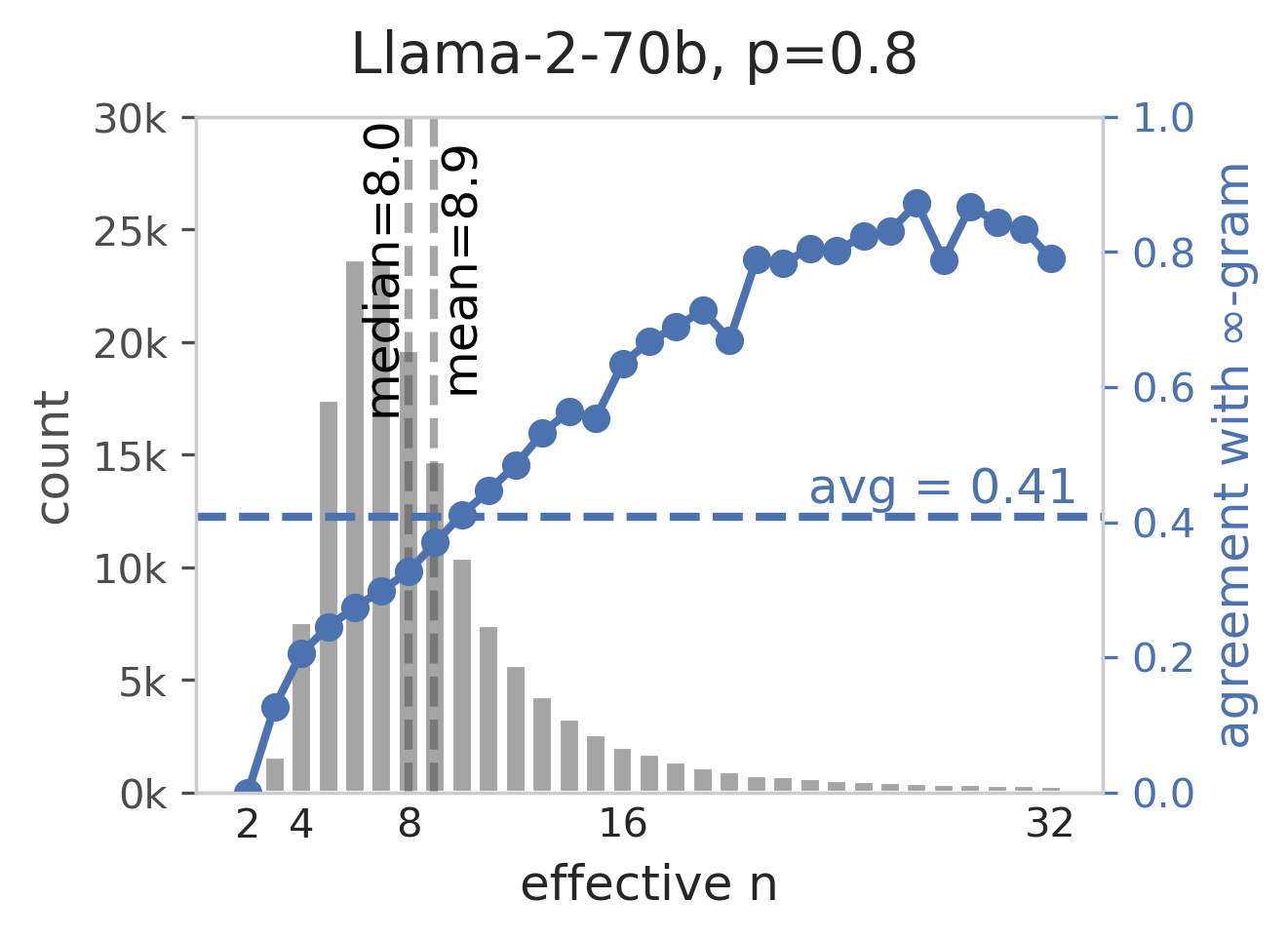

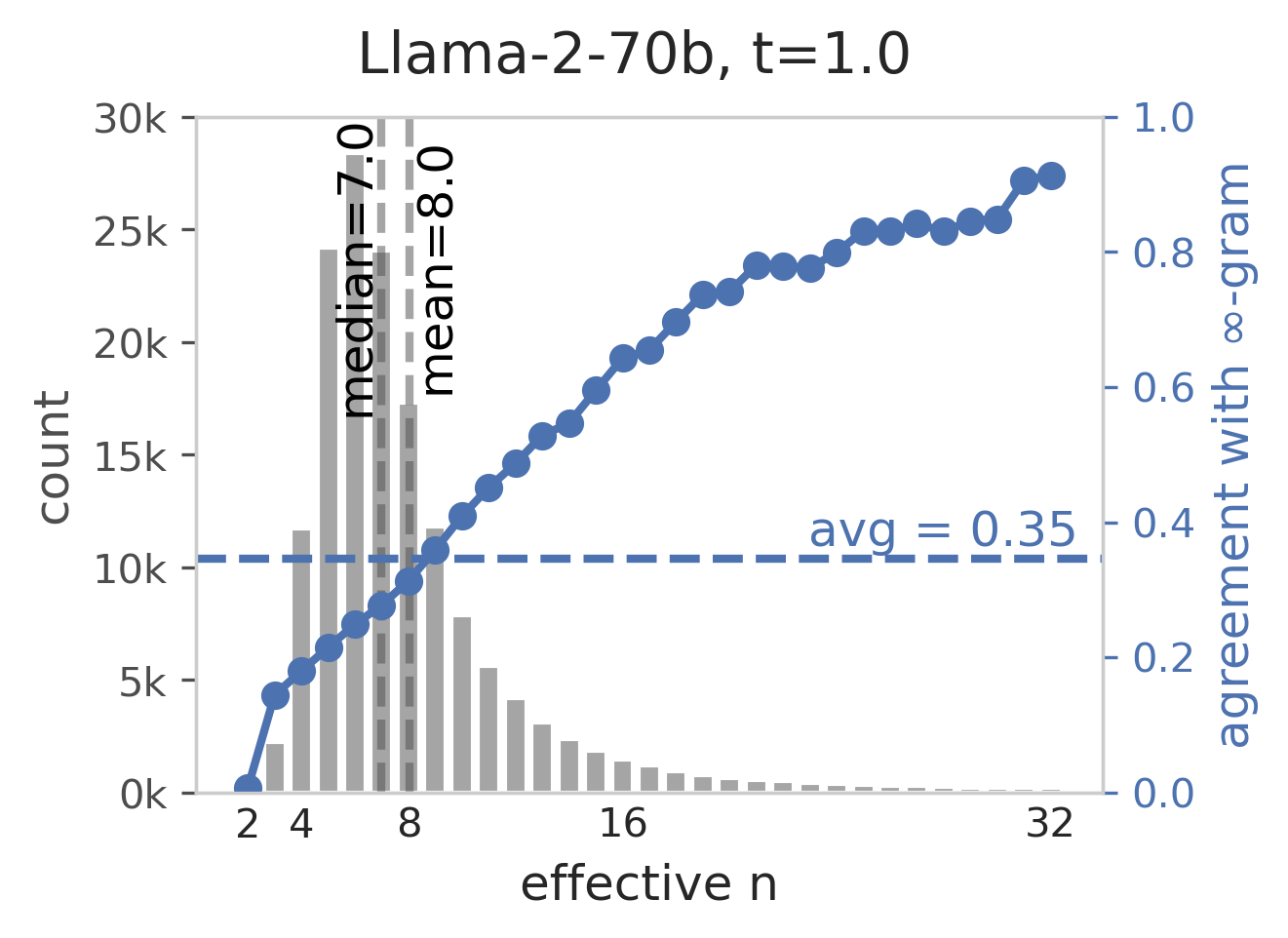

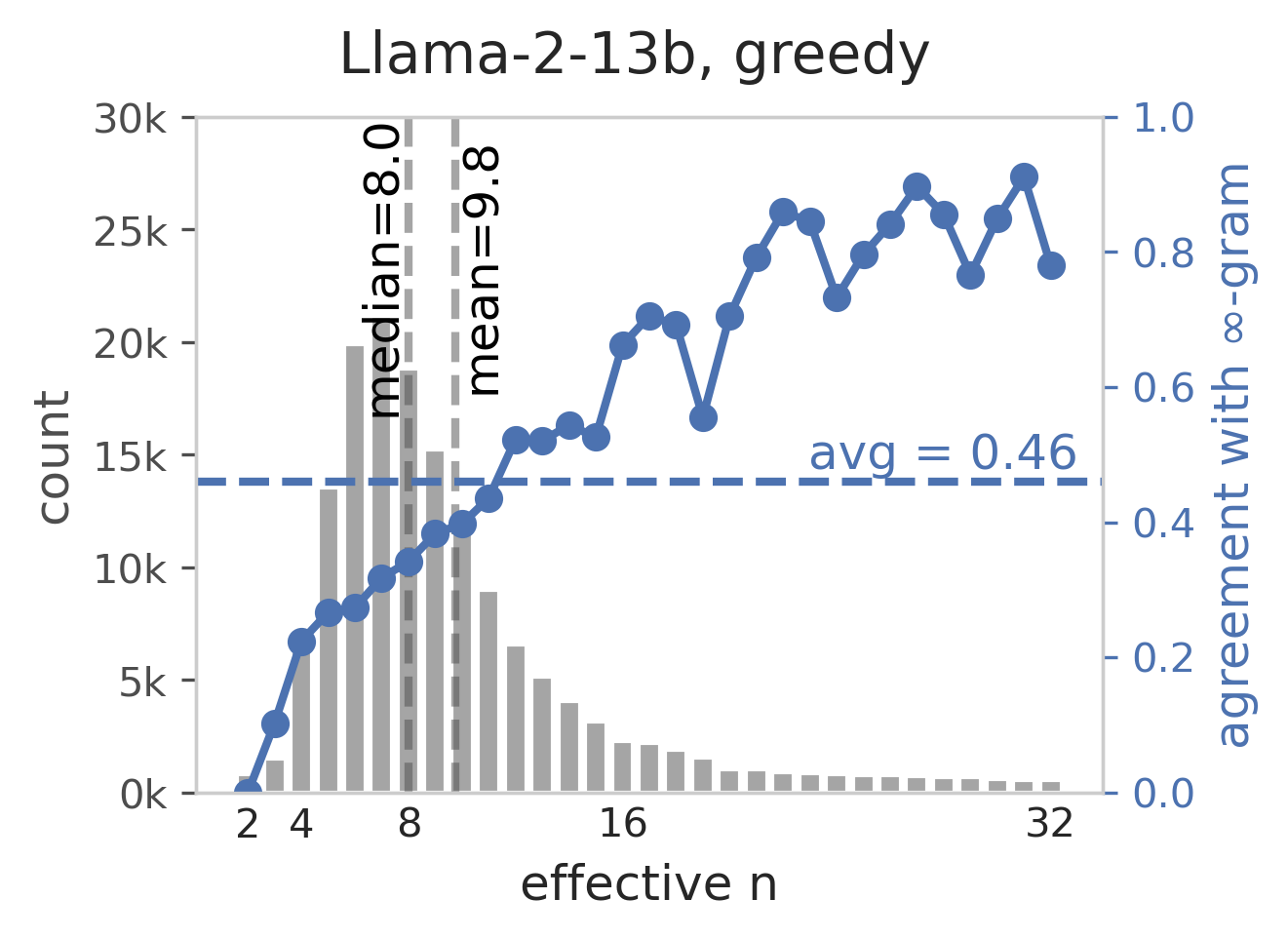

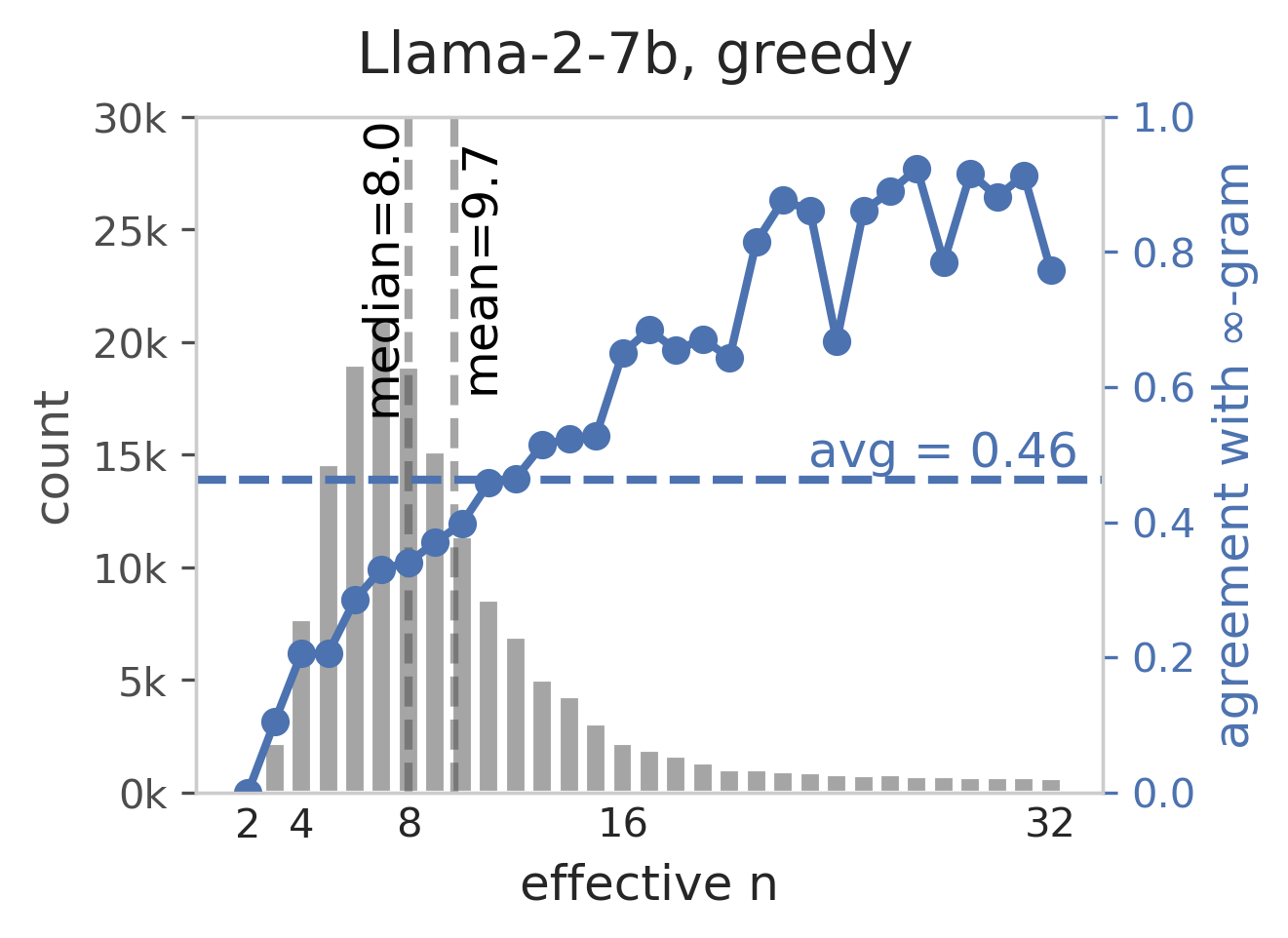

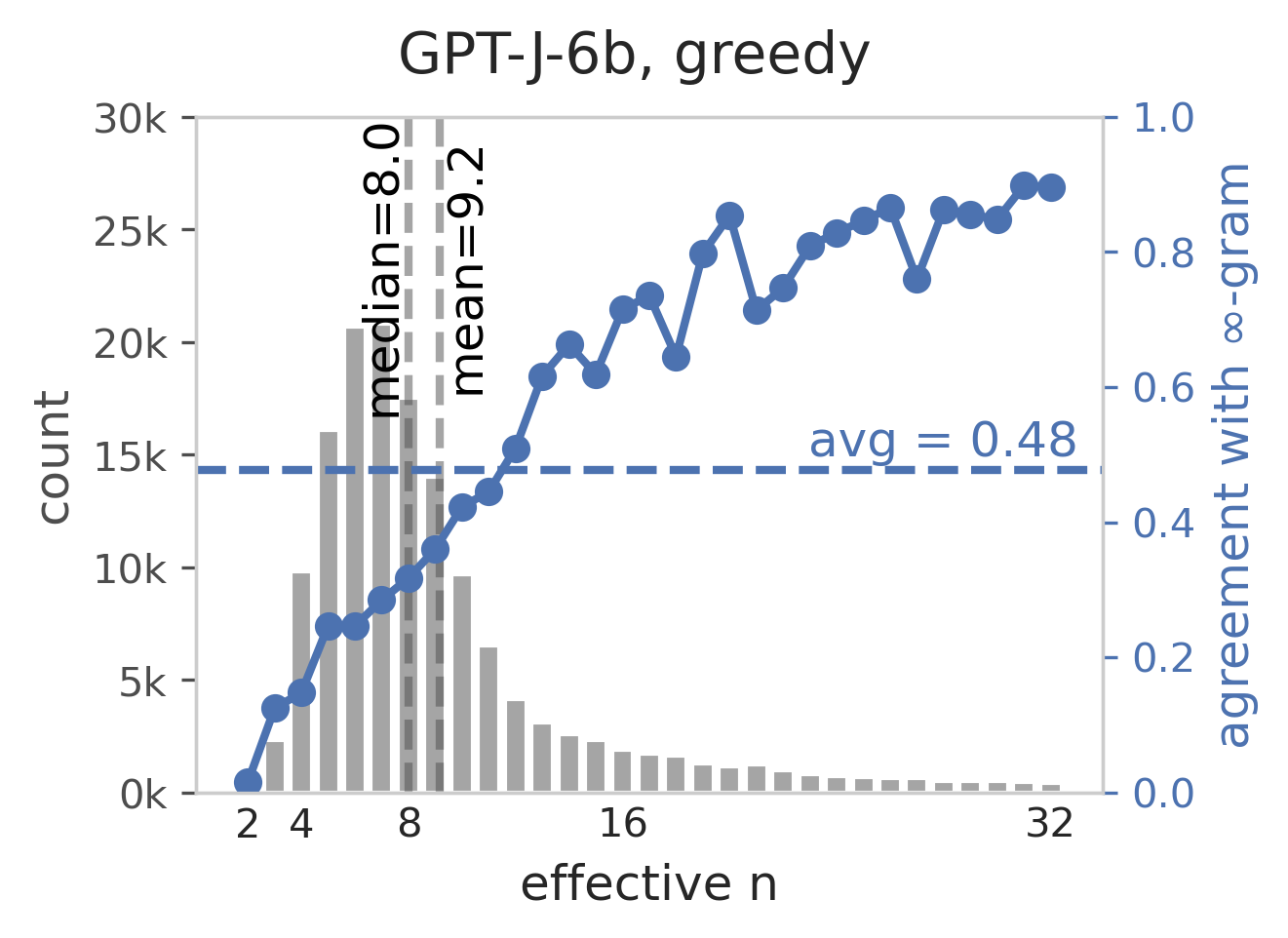

Abstract: Are $n$-gram LLMs still relevant in this era of neural LLMs? Our answer is yes, and we showcase their values in both text analysis and improving neural LLMs. This was done by modernizing $n$-gram LMs in two aspects. First, we train them at the same data scale as neural LLMs -- 5 trillion tokens. This is the largest $n$-gram LM ever built. Second, existing $n$-gram LMs use small $n$ which hinders their performance; we instead allow $n$ to be arbitrarily large, by introducing a new $\infty$-gram LM with backoff. Instead of pre-computing $n$-gram count tables (which would be very expensive), we develop an engine named infini-gram -- powered by suffix arrays -- that can compute $\infty$-gram (as well as $n$-gram with arbitrary $n$) probabilities with millisecond-level latency. The $\infty$-gram framework and infini-gram engine enable us to conduct many novel and interesting analyses of human-written and machine-generated text: we find that the $\infty$-gram LM has fairly high accuracy for next-token prediction (47%), and can complement neural LLMs to greatly reduce their perplexity. When analyzing machine-generated text, we also observe irregularities in the machine--$\infty$-gram agreement level with respect to the suffix length, which indicates deficiencies in neural LLM pretraining and the positional embeddings of Transformers.

- Quantitative analysis of culture using millions of digitized books. Science, 331:176 – 182, 2011. URL https://api.semanticscholar.org/CorpusID:40104730.

- Mining source code repositories at massive scale using language modeling. 2013 10th Working Conference on Mining Software Repositories (MSR), pp. 207–216, 2013. URL https://api.semanticscholar.org/CorpusID:1857729.

- Adaptive input representations for neural language modeling. In Proceedings of the International Conference on Learning Representations, 2019.

- Improving language models by retrieving from trillions of tokens. In Proceedings of the International Conference of Machine Learning, 2022.

- Accelerating large language model decoding with speculative sampling. ArXiv, abs/2302.01318, 2023. URL https://api.semanticscholar.org/CorpusID:256503945.

- Documenting large webtext corpora: A case study on the colossal clean crawled corpus. In Conference on Empirical Methods in Natural Language Processing, 2021. URL https://api.semanticscholar.org/CorpusID:237568724.

- What’s in my big data? ArXiv, abs/2310.20707, 2023. URL https://api.semanticscholar.org/CorpusID:264803575.

- All our n-gram are belong to you. Google Machine Translation Team, 20, 2006. URL https://blog.research.google/2006/08/all-our-n-gram-are-belong-to-you.html.

- The Pile: An 800GB dataset of diverse text for language modeling. arXiv preprint arXiv:2101.00027, 2020.

- Openllama: An open reproduction of llama, May 2023. URL https://github.com/openlm-research/open_llama.

- Dirk Groeneveld. The big friendly filter. https://github.com/allenai/bff, 2023.

- Retrieval augmented language model pre-training. In Proceedings of the International Conference of Machine Learning, 2020.

- Rest: Retrieval-based speculative decoding. 2023. URL https://api.semanticscholar.org/CorpusID:265157884.

- The curious case of neural text degeneration. ArXiv, abs/1904.09751, 2019. URL https://api.semanticscholar.org/CorpusID:127986954.

- Few-shot learning with retrieval augmented language models. arXiv preprint arXiv:2208.03299, 2022.

- Speech and language processing - an introduction to natural language processing, computational linguistics, and speech recognition. In Prentice Hall series in artificial intelligence, 2000. URL https://api.semanticscholar.org/CorpusID:60691216.

- Linear work suffix array construction. J. ACM, 53:918–936, 2006. URL https://api.semanticscholar.org/CorpusID:12825385.

- Slava M. Katz. Estimation of probabilities from sparse data for the language model component of a speech recognizer. IEEE Trans. Acoust. Speech Signal Process., 35:400–401, 1987. URL https://api.semanticscholar.org/CorpusID:6555412.

- Suffix trees as language models. In International Conference on Language Resources and Evaluation, 2012. URL https://api.semanticscholar.org/CorpusID:12071964.

- Generalization through memorization: Nearest neighbor language models. In Proceedings of the International Conference on Learning Representations, 2020.

- Copy is all you need. In Proceedings of the International Conference on Learning Representations, 2023.

- Deduplicating training data makes language models better. In Proceedings of the Association for Computational Linguistics, 2022.

- Residual learning of neural text generation with n-gram language model. In Findings of the Association for Computational Linguistics: EMNLP 2022, 2022. URL https://aclanthology.org/2022.findings-emnlp.109.

- Data portraits: Recording foundation model training data. ArXiv, abs/2303.03919, 2023. URL https://api.semanticscholar.org/CorpusID:257378087.

- SILO language models: Isolating legal risk in a nonparametric datastore. arXiv preprint arXiv:2308.04430, 2023a. URL https://arxiv.org/abs/2308.04430.

- Nonparametric masked language modeling. In Findings of ACL, 2023b.

- Language models as knowledge bases? ArXiv, abs/1909.01066, 2019. URL https://api.semanticscholar.org/CorpusID:202539551.

- The roots search tool: Data transparency for llms. In Annual Meeting of the Association for Computational Linguistics, 2023. URL https://api.semanticscholar.org/CorpusID:257219882.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- Compact, efficient and unlimited capacity: Language modeling with compressed suffix trees. In Conference on Empirical Methods in Natural Language Processing, 2015. URL https://api.semanticscholar.org/CorpusID:225428.

- REPLUG: Retrieval-augmented black-box language models. arXiv preprint arXiv:2301.12652, 2023.

- Dolma: An Open Corpus of 3 Trillion Tokens for Language Model Pretraining Research. Technical report, Allen Institute for AI, 2023. Released under ImpACT License as Medium Risk artifact, https://github.com/allenai/dolma.

- Herman Stehouwer and Menno van Zaanen. Using suffix arrays as language models: Scaling the n-gram. 2010. URL https://api.semanticscholar.org/CorpusID:18379946.

- Together. RedPajama: An open source recipe to reproduce LLaMA training dataset, 2023. URL https://github.com/togethercomputer/RedPajama-Data.

- LLaMA: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023a.

- Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023b.

- GPT-J-6B: A 6 Billion Parameter Autoregressive Language Model. https://github.com/kingoflolz/mesh-transformer-jax, May 2021.

- Training language models with memory augmentation. In Proceedings of Empirical Methods in Natural Language Processing, 2022.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.