Introduction

LLMs have transformed the nature of tasks that AI systems can perform. However, they typically have a limitation in the context length they can handle, posing a challenge in applications such as document summarization, long conversations, and lengthy reasoning tasks. Most models have a preset limit on the number of tokens they can consider, and increasing this limit traditionally implies a massive computational burden and the need for fine-tuning on extensive datasets. This paper proposes E²-LLM, a method that streamlines the length extension of LLMs in an efficient manner, using shorter context lengths for training and supporting evaluation on longer inputs without additional fine-tuning or computational intensiveness.

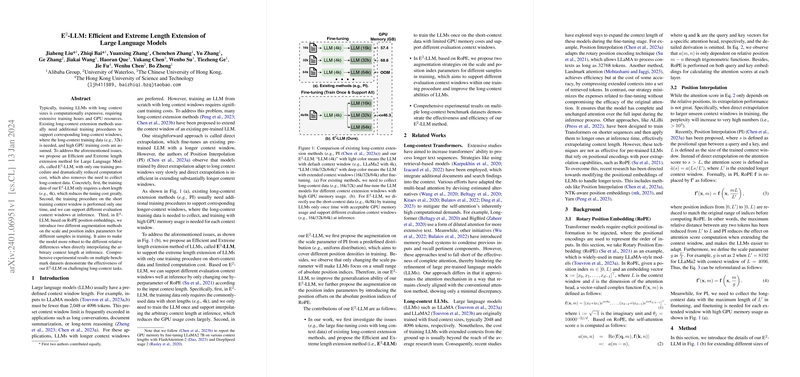

Methodology

The basis of E²-LLM lies in its two-pronged augmentation strategy, which leverages the Rotary Position Embedding (RoPE) to extend the effective context length with minimal additional training. The first strategy varies the scale parameter of the position embeddings, effectively changing the facing of position indices so that the model learns to deal with varied densities of positions. Secondly, an augmentation on the position index parameters is introduced to allow for offsets, making the model more versatile to different positional ranges. This is crucial as it teaches the LLM to generalize across different lengths and relative differences, a capability that is activated during inference time depending on the given context window.

Experimental Findings

E²-LLM was put to the test on several benchmark datasets designed to challenge the model’s long-context abilities. It was found to perform effectively across these tasks, often matching or outperforming existing LLMs that had been trained extensively for longer context windows. Notably, E²-LLM managed to achieve these results with significantly lower GPU memory costs as it required only once-off training using shorter data sequences (e.g., 4k tokens), yet it was successful in handling much longer contexts (e.g., 32k tokens) effectively.

Implications and Future Work

The ingenuity of E²-LLM opens new doors for efficient utilization of powerful LLMs without the prohibitive costs associated with training them on long contexts. As future work, the authors intend to apply this methodology to even larger models and examine its performance on more varied datasets and tasks. Furthermore, they plan to explore the method's adaptability to other types of positional encodings and LLMs. As the computational landscape becomes more demanding, E²-LLM stands out as a promising approach to pushing the boundaries of what LLMs can do without breaking the computational bank.