PoSE: Efficient Context Window Extension of LLMs via Positional Skip-wise Training

The paper "PoSE: Efficient Context Window Extension of LLMs via Positional Skip-wise Training" by Dawei Zhu et al. presents a novel methodology for extending the context window of LLMs effectively and efficiently. This research addresses a crucial limitation in LLMs: the predefined context length, which poses significant constraints in applications requiring the processing of extremely long sequences.

Summary and Key Concepts

Background and Motivation

Traditional LLMs are constrained by their pre-defined context lengths, as evidenced in transformer models like GPT and LLaMA, which typically operate within a window of a few thousand tokens. This limitation hinders the performance of tasks requiring long sequence processing, such as document summarization, lengthy text retrieval, and in-context learning with multiple examples. Existing methods to extend this context length often rely on full-length fine-tuning, which is computationally prohibitive due to the quadratic increase in computational complexity associated with processing longer sequences.

Proposed Approach: Positional Skip-wise Training (PoSE)

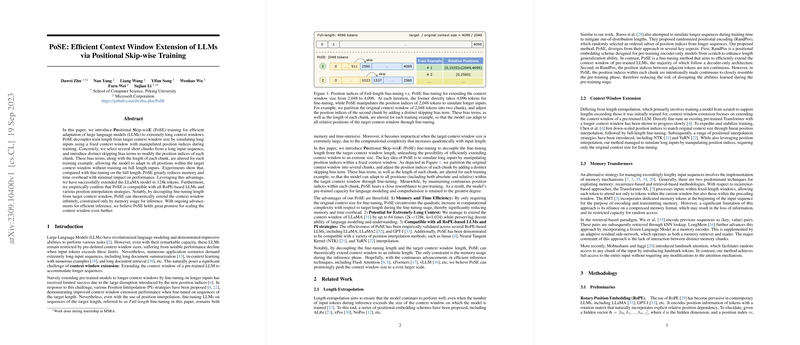

The paper introduces PoSE, a fine-tuning strategy designed to decouple the training context size from the target context length. The methodology leverages a positional index manipulation technique to simulate long inputs using a fixed context window size. The core idea is to partition the original context into several chunks and then adjust their position indices by adding distinct skipping bias terms. This adjustment facilitates the model's exposure to a diverse range of relative positions within the target context window. By alternating these bias terms and chunk lengths for each training example, PoSE ensures the model can adapt to positions up to the target length without the intensive computational demands of full-length fine-tuning.

Experimental Results

The empirical evaluation demonstrates PoSE's efficacy on multiple fronts:

- Memory and Time Efficiency:

- PoSE retains the fixed context window size during fine-tuning, significantly reducing both time and memory overhead compared to full-length fine-tuning. For example, scaling up LLaMA-7B from a context window of 2k to 16k requires substantially less computational resources with PoSE.

- LLMing Performance:

- Evaluation on datasets such as GovReport and Proof-pile shows that PoSE achieves comparable perplexity scores to full-length fine-tuning while using a fraction of the computational resources. This indicates that PoSE's position manipulation scheme effectively preserves the model's language comprehension and generation capabilities for extended context lengths.

- Compatibility and Versatility:

- PoSE has been empirically validated across different RoPE-based LLMs, including LLaMA, LLaMA2, GPT-J, and Baichuan models. It also works with various interpolation strategies like Linear, NTK, and YaRN, demonstrating its robustness and adaptability.

- Potential for Extreme Context Lengths:

- PoSE shows promising results in extending context windows to extreme lengths, such as 96k and 128k tokens. The researchers tested models on long document datasets like Books3 and Gutenberg, achieving reasonable perplexity scores and demonstrating the potential to process exceedingly long sequences effectively.

Theoretical and Practical Implications

Theoretical Implications

The theoretical foundation of PoSE lies in its innovative use of positional indices to simulate longer contexts within a fixed window. This approach challenges the traditional dependency on long-sequence fine-tuning, providing a generalizable mechanism that can potentially support infinite lengths, given advancements in efficient inference techniques. The paper also contributes to the understanding of positional embedding schemes and their adaptation for length extrapolation, particularly in the context of RoPE-based models.

Practical Implications

Practically, PoSE offers a scalable solution for deploying LLMs in real-world applications where long-context processing is crucial. Its efficiency in memory and computation enables the utilization of large models on limited hardware, making it accessible for a broader range of applications. This capability is particularly beneficial for industries dealing with large volumes of textual data, such as legal document analysis, academic research, and large-scale digital archiving.

Future Directions

Given the promising results of PoSE, future research can explore several avenues:

- Optimizing Positional Interpolation: While the paper explores linear, NTK, and YaRN interpolation methods, further optimization of these techniques could enhance performance for even longer contexts.

- Broader Model Applicability: Extending PoSE’s applicability to other types of LLM architectures beyond RoPE-based models could be another fruitful direction.

- Inference Efficiency: Continuous advancements in efficient inference techniques like Flash Attention and xFormers can further reduce the inference phase's memory usage, pushing the boundaries of context length.

- Real-World Applications: Implementing PoSE in diverse real-world applications can validate its practical utility and inspire further enhancements based on application-specific requirements.

Conclusion

The PoSE methodology presents an efficient and effective approach for extending the context windows of LLMs. It addresses the computational challenges of full-length fine-tuning and demonstrates substantial potential for both theoretical advancements and practical applications in processing long sequences. The research's implications suggest a significant step forward in making LLMs more versatile and scalable, thereby expanding their utility in complex, real-world text processing tasks.