An Evaluation of "VCoder: Versatile Vision Encoders for Multimodal LLMs"

The paper entitled "VCoder: Versatile Vision Encoders for Multimodal LLMs" addresses limitations in present-day Multimodal LLMs (MLLMs) regarding their object perception capabilities. The research identifies a critical gap in MLLMs - while they excel in visual reasoning and question-answering tasks, these models falter on simple yet essential tasks like object identification and counting. This discrepancy epitomizes Moravec's Paradox, which highlights the apparent ease with which machines perform complex tasks compared to basic sensory tasks that humans find effortless.

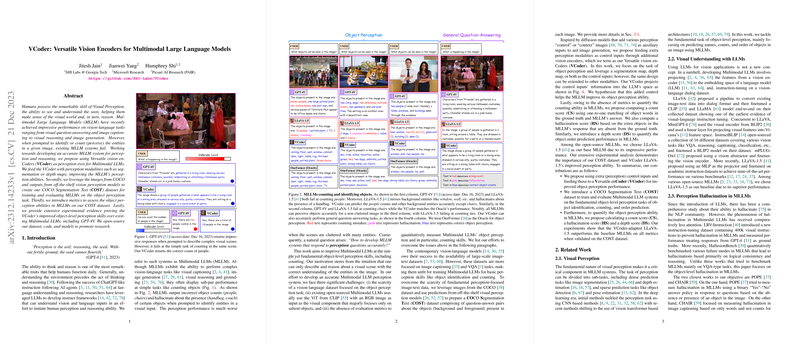

The principal contribution of this work is the proposal of Versatile vision enCoders (VCoder) which act as auxiliary modules to enhance the perception abilities of MLLMs. VCoder encodes perception modalities such as segmentation and depth maps into embeddings that improve the model's understanding of visual inputs. By adopting a VCoder module interfaced with existing MLLMs, specifically LLaVA-1.5, the paper demonstrates significant advancements in object-level perception tasks without degrading the reasoning performance of the model.

A novel dataset called the COCO Segmentation Text (COST) dataset is also introduced. This dataset focuses on training and evaluating MLLMs in object perception tasks, presenting new questions about objects in each image to bolster the training process in areas where MLLMs exhibit weaknesses. By providing both the essential modality input data and a suite of perception-focused queries, the COST dataset aids in developing more robust evaluation metrics for object perception tasks.

Quantitative metrics establish a framework for evaluating object perception, introducing metrics such as the count score (CS) and hallucination score (HS). These metrics are meticulously designed to assess MLLMs' capabilities in accurately identifying and counting objects, accounting for errors that manifest as hallucinations in object recognition and enumeration.

The empirical results illustrate that models enhanced with VCoder surpass existing MLLM benchmarks (including MiniGPT-4, InstructBLIP, and LLaVA-1.5) on the COST dataset across varying task categories like semantic, instance, and panoptic segmentation. There's a particular emphasis on the qualitative improvements observed with the segmentation map serving as the control input. Moreover, the VCoder-augmented framework demonstrates competitive performance on object order perception tasks as well, elucidating a path forward for integrating additional sensory modalities.

The implications of this research are manifold. From a practical perspective, the work emphasizes the potential for synthesizing multimodal inputs to strengthen perceptual acuity in machine learning models, which can be significantly beneficial in real-world applications demanding high precision in complex visual environments. Theoretically, this paper sheds light on the limitations of current vision-language datasets and suggests a need for more comprehensive data collection efforts that encompass a wider array of objects with diverse vocabulary inclusion for more robust perception training.

Future research based on these findings could broaden into various directions, such as exploring the full integration of VCoder with modalities beyond segmentation and depth to include aspects like motion or audio for multimodal reasoning. Additionally, scaling up datasets like COST to include multifarious object classes and cluttered scenes beyond current constraints could be valuable in perfecting these perception models.

In conclusion, this paper provides a critical evaluation toolset and a methodology for advancing the field of MLLMs by enhancing basic sensory perception capabilities, thus aligning with the ultimate goal of creating systems that mirror human-like perception and understanding.