Contextual Object Detection with Multimodal LLMs: A Summary

In the domain of computer vision and language processing, the integration of multimodal data for object detection tasks is gaining attention for its potential to enhance machine perception capabilities. The paper, "Contextual Object Detection with Multimodal LLMs," authored by researchers from Nanyang Technological University, proposes an innovative approach that extends the conventional framework of multimodal LLMs (MLLMs) to tackle the challenge of contextual object detection. This essay summarizes the paper's methodology, results, and implications for future AI developments.

Problem Definition and Methodology

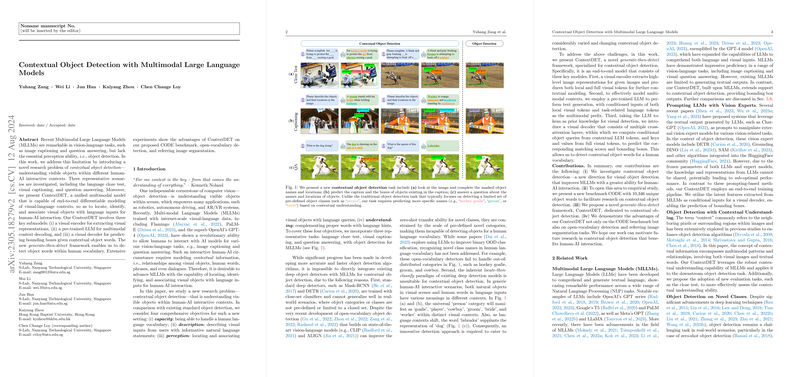

Traditional object detection methodologies face limitations when applied to tasks requiring complex human-AI interaction, as they are typically constrained by pre-defined object classes and do not account for context-specific object identification. This paper addresses these limitations by proposing a novel task called contextual object detection, which involves predicting the locations and identifying objects within various human-AI interactive contexts, such as language cloze tests, visual captioning, and question answering.

The authors introduce ContextDET, a unified multimodal model consisting of three primary components: (i) a visual encoder for extracting high-level image representations, (ii) a pre-trained LLM for multimodal context decoding, and (iii) a visual decoder that predicts bounding boxes based on contextual object words. This generate-then-detect paradigm uniquely leverages human vocabulary for object detection, enhancing the model's ability to understand visual-language contexts.

Key Components of ContextDET

- Visual Encoder: This component processes input images to produce both local and full visual tokens using pre-trained vision backbones, such as ResNet, ViT, or Swin Transformer. These tokens serve as the foundation for integrating visual information into the LLM.

- Multimodal Context Modeling with LLM: By leveraging pre-trained LLMs like OPT, the system generates language-based contextual information conditioned on visual representations and task-specific language prompts. This multimodal synchronization enables the model to decode complex visual-language contexts essential for tasks like cloze tests and visual QA.

- Visual Decoder: Distinct from traditional detection pipelines, the visual decoder in ContextDET uses latent LLM embeddings as prior knowledge to predict object words and their bounding boxes. This mechanism not only ensures the model's contextual adaptability but also supports open-vocabulary object detection without being limited to pre-defined classes.

Experimental Evaluation

The proposed framework is evaluated using the CODE benchmark, which is specifically designed for contextual object detection and includes over 10,000 unique object words. The rigorous assessment involves metrics such as top-1 accuracy (Acc@1), top-5 accuracy (Acc@5), mean Average Precision (AP) for both the top-1 and top-5 predicted names. The results reveal ContextDET's potential, demonstrating superior performance and generalizability across diverse contextual settings when compared to current state-of-the-art models. Additionally, ablation studies highlight the significance of each component, particularly the role of local visual tokens in enhancing contextual understanding.

Implications and Future Developments

The conceptual and experimental advancements presented in this paper have significant implications for the evolution of AI systems capable of robust human-AI interaction. By extending the utility of MLLMs for contextual object detection, ContextDET offers new pathways for applications requiring finer object-location awareness, such as in augmented reality (AR) and autonomous systems.

Looking ahead, there is promising potential for further reducing data annotation costs through semi-supervised or weakly-supervised learning approaches. Moreover, the exploration of other capabilities of MLLMs, such as their interactive adaptability for on-the-fly adjustments based on human instructions, presents a fertile ground for future research. In sum, the work lays a foundation for more responsive, context-aware AI systems, advancing the field's understanding of multimodal learning integration.