Visual LLM Pre-training

Introduction and Context

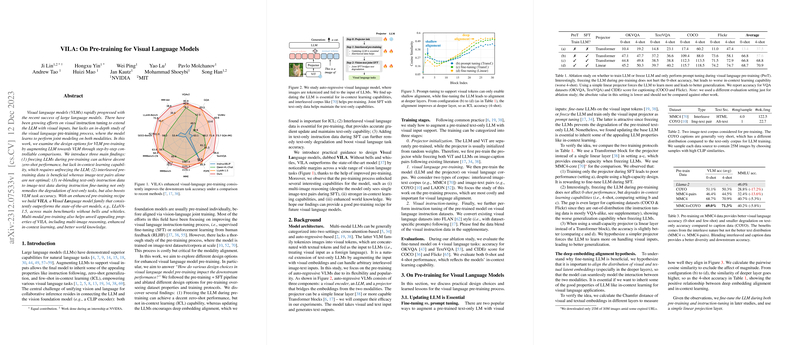

Recent advancements in AI research have shown considerable improvements by extending LLMs to incorporate visual inputs, creating visual LLMs (VLMs). These models have shown promising results in comprehending and generating content that combines both text and visual information, a process known as "multimodal learning." A critical component in the development of VLMs has been the pre-training process, where a model is trained on a large dataset that includes both text and images. However, the specifics of augmenting an LLM with visual capabilities, known as visual language pre-training, have not been deeply explored. This work aims to fill that gap by examining various design approaches for visual language pre-training.

Pre-training Factors and Findings

The paper identifies three key findings from the augmentation process. Firstly, while freezing the LLM during pre-training can produce acceptable results in zero-shot tasks (where the model makes predictions without seeing similar examples), it falls short in tasks that require in-context learning. Here, unfreezing or updating the LLM proves to be crucial. Secondly, incorporating interleaved pre-training data, which includes combined text and image datasets with text segments interspersed with pictures, offers substantial benefits. It provides more precise gradient updates and helps maintain text-only capabilities. Lastly, adding text-only instruction data to image-text data during supervised fine-tuning (SFT) not only helps recover the model's text-only task degradation but also improves accuracy in visual language tasks. These insights are critical in designing pre-training regimes for future VLMs.

Training Strategies and Outcomes

The paper's proposed pre-training design, named VILA (Visual Instruction tuning with Linear Attention), consistently surpasses state-of-the-art models across various benchmarks. Moreover, VILA showcases additional capabilities, such as multi-image reasoning and robust in-context learning, even when presented with inputs it has not been explicitly trained on.

Model Training and Evaluation

VILA is trained in multiple stages, starting with projector initialization and followed by pre-training on visual language corpora. It's then fine-tuned via visual instruction datasets with dataset-specific prompts. The evaluations used a variety of visual language tasks to gauge the model's performance in zero-shot and few-shot settings, reflecting its in-context learning capabilities.

Conclusion and Future Considerations

The findings from this paper offer a clear pathway toward creating more effective VLMs by identifying crucial aspects of the visual language pre-training process. The resulting VILA model showcases improved performance across numerous visual language tasks without compromising its text-only abilities. Future research could further enhance these findings by exploring additional pre-training datasets, optimizing training throughput, and scaling up the pre-training corpus.