Enhanced Visual Instruction Tuning for Text-Rich Image Understanding: An Overview of LLaVAR

The paper "LLaVAR: Enhanced Visual Instruction Tuning for Text-Rich Image Understanding" presents advancements in the field of visual instruction tuning by addressing the limitations of current models in comprehending textual elements within images. Instruction tuning has significantly improved the utility of LLMs in human interaction tasks. These models, when augmented with visual encoders, possess the potential for comprehensive human-agent interaction based on visual inputs. However, their efficacy diminishes when tasked with dissecting and understanding the textual intricacies present within images - an area crucial for enriched visual comprehension.

Methodology and Data Collection

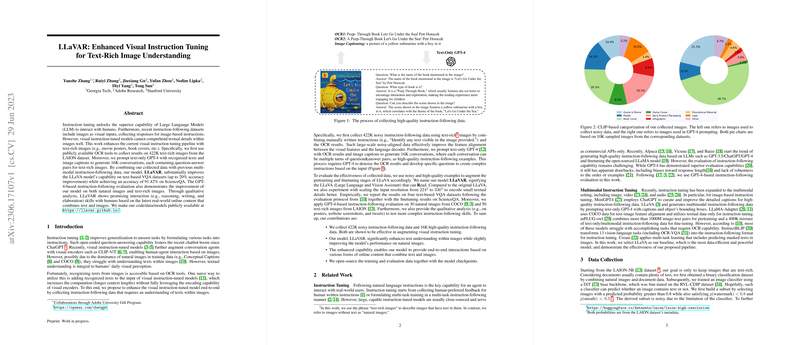

This paper introduces LLaVAR, a model designed to augment the visual instruction tuning capability of its predecessors by focusing on text-rich images. The authors embark on enhancing the current visual instruction tuning pipeline by accumulating a substantial dataset from the LAION repository, composed of 422K text-rich images such as movie posters and book covers. These images serve as a foundation to collect textual data using Optical Character Recognition (OCR) tools. This dataset aids in overcoming the operational challenges posed by existing models that predominantly train on natural images, which lack embedded textual information.

Moreover, the authors employ GPT-4 to process the gathered OCR data and image captions, generating 16K question-answer conversational pairs tailored for text-rich images. These pairs provide high-quality, instruction-following examples crucial for the model training phases.

Model Architecture and Training Process

LLaVAR utilizes the CLIP-ViT architecture in conjunction with a Vicuna-language decoder. The model undergoes a two-stage training process:

- Pre-training Stage: This involves aligning visual features with a language decoder using a trainable projection matrix. Here, the integration of both the newly collected noisy data and existing pre-training datasets lays the groundwork for feature alignment without finetuning the decoder.

- Fine-tuning Stage: During this phase, the model incorporates high-quality instruction-following pairs. Both the feature projection matrix and the decoder undergo training to refine question-answering capabilities.

Results and Implications

LLaVAR demonstrates substantial improvement over the original LLaVA and other models across four text-based Visual Question Answering (VQA) datasets, such as ST-VQA, OCR-VQA, TextVQA, and DocVQA, with observable accuracy improvements by up to 20%. The model's high-resolution capability, marked by upgrades from to , further underscores its enhanced proficiency in capturing and interpreting small textual details—a field traditionally challenging for standard models due to resolution constraints.

The strength of LLaVAR lies in its strategic data augmentation and instruction-following training, enhancing the encoding and decoding robustness through OCR and high-resolution inputs. This improvement is crucial in real-world applications, where combination of textual and visual information processing plays a pivotal role—spanning areas from navigating digital content to autonomous vehicle navigation systems that rely on traffic signs.

Future Directions

The progression reflected in LLaVAR paves the way for exploring even higher resolution capabilities and more sophisticated visual encoders. Future endeavors might benefit from expanding datasets further or implementing domain reweighting strategies to maximize data utility. Moreover, enhancing computation efficiency in both high-resolution and multimodal settings remains a pivotal direction for future research endeavors.

Conclusion

By focusing on augmenting the text recognition capabilities of visual instruction models through substantial datasets and strategic model adaptations, LLaVAR represents a meaningful stride in visual language processing. This paper not only highlights the current limitations and avenues for advancement but also sets a definitive blueprint for future AI models underpinned by improved visual-textual comprehension in various computational domains.