Introduction

As advancements take the AI field by storm, the synergy between vision and language (VL) tasks has seen remarkable growth. Building on the momentum of LLMs, researchers have focused on multimodal models that integrate powerful image processing in an architecture initially devised for text processing. Various approaches have been explored, with pre-trained LLMs often connected to image encoders through trainable modules that combine visual features with text embeddings. These multimodal systems have demonstrated remarkable vision and language understanding capabilities but typically handle understanding and generation tasks separately, leading to discrepancies in representations and hindering the implementation of uniform training objectives. This paper introduces a transformative approach that allows for simultaneous processing of visual and textual content in a unified framework.

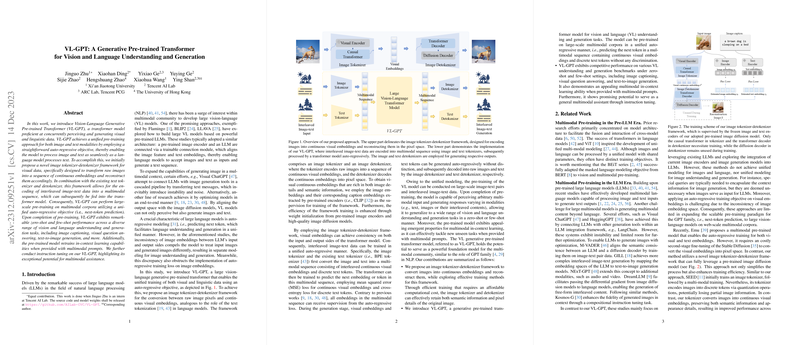

VL-GPT Architecture

The Vision-Language Generative Pre-trained Transformer (VL-GPT) bridges this gap by introducing an image tokenizer-detokenizer framework that processes images into continuous embeddings that can be combined with text sequences. The image tokenizer transforms raw images into a sequence of embeddings, akin to how textual data is tokenized, while the detokenizer reconstructs this sequence back into an image. These continuous embeddings are made rich in both details and semantic information through supervision from pre-trained models, and during pre-training, they are seamlessly integrated with text tokens in an interleaved multimodal sequence that can be fed to the transformer model for auto-regressive learning. With VL-GPT, both visual and language inputs can be effectively understood and generated in tasks like image captioning, visual questioning, and text-to-image synthesis.

Unified Pre-training and In-Context Learning

A critical aspect of VL-GPT's strength lies in its unified auto-regressive pre-training, facilitated by robust modeling that meaningfully aligns visual and textual data. After pre-training, the model efficiently generalizes to a multitude of vision-language tasks, exhibiting an adaptive in-context learning ability with the provision of multimodal prompts. This property proves advantageous in tackling new tasks with minimal additional input or instruction. Further instruction tuning demonstrates the model's potential to operate as a general multimodal assistant, reflecting its capability to follow instructions and generate contextually appropriate responses.

Model Evaluation and Potential

VL-GPT's evaluation across a spectrum of benchmarks showcases its competitive edge in both understanding and generative tasks under different settings, including zero-shot, few-shot, and prompted scenarios. Remarkably, the model performs well against other multimodal models, indicating the transformative impact of the unified training approach. These successes suggest that VL-GPT has the potential to serve as a foundational model in the multimodal landscape, much like how the GPT model has become a touchstone in NLP. Despite the achievements documented in this work, further exploration is needed, particularly on scaling the model and verifying its effectiveness with increasingly larger datasets. The VL-GPT could prove vital in advancing towards more general and integrative AI systems that blend vision and language.