Analysis of OneLLM: A Unified Framework for Multimodal Language Alignment

The paper "OneLLM: One Framework to Align All Modalities with Language" presents a sophisticated approach to multimodal LLMs (MLLMs). This research introduces OneLLM, a unified model designed to comprehend and integrate eight distinct modalities, including image, audio, video, point cloud, and others, with language. The paper navigates the complexities of multimodal learning by proposing a novel architecture and training methodology.

Key Contributions

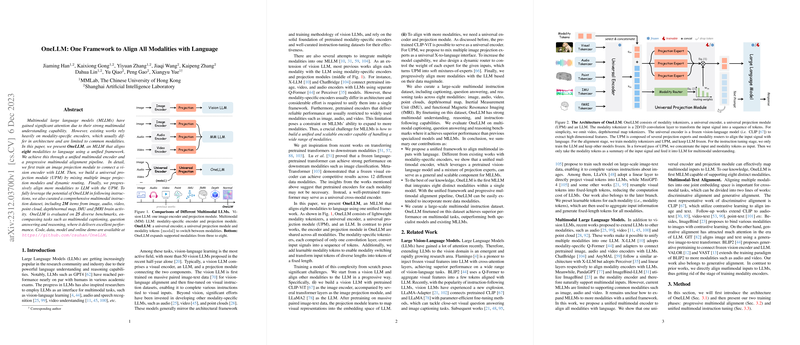

OneLLM utilizes a single universal multimodal encoder, unified projection module (UPM), and LLM to handle diverse modalities. The model leverages the strengths of pretrained models like CLIP-ViT and LLaMA2, demonstrating robust performance across varied benchmarks.

Architecture

The architecture is characterized by:

- Lightweight Modality Tokenizers: Each modality is processed through a specific tokenizer, converting inputs into token sequences. This component is optimized for efficiency, especially given the variability across modalities.

- Universal Encoder and Projection Module: The CLIP-ViT model serves as a frozen encoder, highlighting the transferability of pretrained models. The UPM is innovatively designed with multiple projection experts, guided by a dynamic routing mechanism.

- LLM Integration: Utilizing LLaMA2 allows for sophisticated language understanding and generation, crucial for aligning visual and auditory data with linguistics.

Multimodal Alignment Strategy

The authors implement a progressive alignment strategy. Initially training on image-text data, the model extends to other modalities progressively, stabilizing representations and preventing modal biases. This approach ensures that new modalities are aligned without adversely impacting previously learned information.

Instruction Tuning

The paper introduces a comprehensive multimodal instruction dataset, which significantly enhances the model's ability to generate multimodal captions, answer questions, and perform reasoning tasks. This dataset is designed to fully exploit the interaction capabilities of OneLLM across all supported modalities.

Experimental Evaluation

OneLLM's effectiveness is validated on 25 benchmarks, spanning tasks like VQA, captioning, reasoning, and more. The model demonstrates competitive performance, often surpassing existing specialist and generalist multimodal models.

- Vision Tasks: The model shows strong results in VQA and image captioning, nearly rivaling some vision-specific models.

- Audio and Video Tasks: OneLLM effectively handles both audio and video text tasks, showcasing its versatility in temporal and auditory processing.

- Emergent Capabilities: The integration of point cloud, depth/normal map, IMU, and fMRI data demonstrates OneLLM’s potential in less-explored areas, such as motion analysis and brain activity interpretation.

Implications and Future Work

OneLLM’s design suggests a scalable direction for research in MLLMs, where a unified framework could accommodate even more modalities. This architecture reduces the need for extensive models with modality-specific designs, potentially simplifying future research and application processes.

However, challenges remain, notably the need for extensive, quality datasets for non-visual modalities and improved methodologies for handling high-resolution and long-sequence data. Future work might focus on fine-grained understanding and expanding modality support with minimal additional resources.

In sum, OneLLM represents a significant step towards versatile, unified models capable of comprehensive multimodal understanding, opening avenues for more integrated AI systems capable of complex real-world applications.