A Formal Analysis of Ola: Towards an Omni-Modal LLM

The paper, "Ola: Pushing the Frontiers of Omni-Modal LLM with Progressive Modality Alignment," presents an in-depth exploration of creating an efficient and versatile omni-modal LLM. At its core, Ola leverages a progressive modality alignment strategy to adeptly handle image, video, and audio data. This essay deconstructs the structural and methodological facets of the research, delineating its empirical accomplishments and exploring its ramifications.

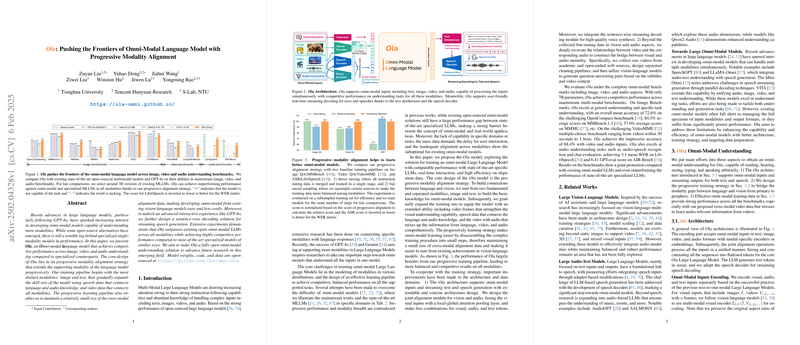

Overview of Ola's Architectural Design

The Ola model distinguishes itself through its sophisticated architecture supporting input from diverse modalities, including text, image, video, and audio. It employs distinct encoders for each modality, such as OryxViT for visual inputs and Whisper-v3 for audio inputs, ensuring high fidelity feature extraction. The vision and audio encoders work in tandem with joint alignment modules, integrating features via Local-Global Attention Pooling. This architectural choice enhances the comprehension capacity of the model across combined inputs, facilitating comprehensive omni-modal understanding.

Progressive Modality Alignment Strategy

The model's training procedure is meticulously structured into progressive stages. Initially, the model is pretrained on distinct modalities, where the foundational phase emphasizes text and image integration. In the subsequent training phases, the model incrementally incorporates video data and audio features, ultimately achieving a cohesive multimodal understanding. This gradual fusion allows precise alignment of disparate data distributions into a singular, holistic model capability. The strategic phasing not only underlies cost-effectiveness of aligning existing models but also optimizes the model's performance via measured, sequential learning.

Empirical Evaluation and Results

Ola's performance is exhaustively evaluated across a range of benchmarks. In image-centric evaluations like MMBench-1.1 and OCRBench, it surpasses existing vision-LLMs, attesting to its robust visual comprehension. As the model incorporates video and audio data, its prowess extends to video benchmarks such as VideoMME, where it shows superior comprehension in multi-choice tasks involving extended video content. The audio capabilities of Ola are evaluated using LibriSpeech and AIR-Bench, showcasing strong results in audio-speech recognition and question-answering metrics, even rivaling specialist models.

Theoretical and Practical Implications

The introduction of Ola potentially impacts both theoretical constructs in multimodal machine learning and practical applications. Theoretically, Ola's multi-stage modality alignment illuminates the pathway for fusing complex data distributions effectively without sacrificing performance on any singular modality. Practically, Ola's real-time streaming capabilities and open-source availability can substantially influence sectors requiring simultaneous processing of text, visual, and auditory cues, such as autonomous systems and interactive AI assistants.

Prospects for Future Research

Building on this work, future research could explore further optimization of multi-stage modality learning and expand applications of omni-modal models into areas like real-time interactive systems and augmented reality applications. Meanwhile, the scalability of models like Ola in handling increasingly complex data and performing in varied real-world settings remains an intriguing avenue for continuous exploration.

In conclusion, the paper's contribution lies in presenting a sophisticated, unified model that not only excels in individual modalities but also harmonizes their intricate interplay. Ola represents a significant stride towards truly comprehensive AI systems, capable of nuanced understanding and interactive functionalities across diverse data forms.