An Analysis of ILLUME: Integrating Vision and Language in Unified Multimodal LLMs

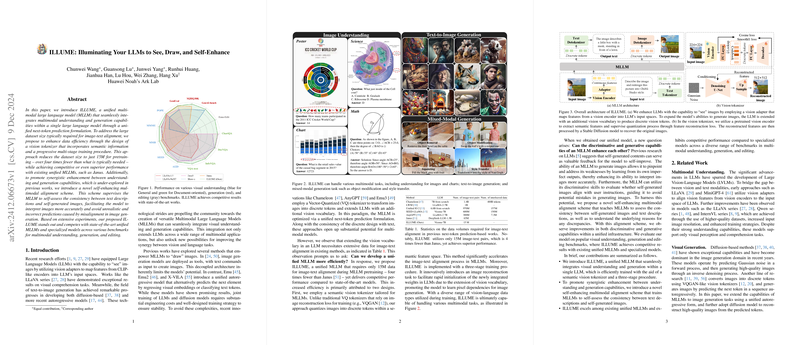

The paper "ILLUME: Illuminating Your LLMs to See, Draw, and Self-Enhance" introduces a sophisticated approach to optimizing Multimodal LLMs (MLLMs). The authors present ILLUME, a framework that notably enhances the efficiency and synergy between multimodal understanding and generation tasks within a single model. By adopting a novel vision tokenizer and a progressive multi-stage training strategy, ILLUME achieves impressive data efficiency and competitive performance against state-of-the-art models.

Key Contributions

- Data Efficiency and Semantic Vision Tokenizer: ILLUME reduces the dataset size for pretraining to 15 million image-text pairs, which is a significant reduction compared to other counterparts. This is primarily achieved by employing a vision tokenizer that integrates semantic information, promoting a more informed alignment between image and text. As shown in the experiments, the tokenizer accelerates the convergence of model training, fostering efficient multimodal data processing.

- Progressive Training Approach:

The method involves a three-stage training process: - Visual Embedding Initialization: Adapts visual features to LLM input spaces using image-to-text pairs, focusing on generating accurate visual representations. - Unified Image-Text Alignment: Unfreezes the model to learn from a diverse range of multimodal data. - Supervised Fine-tuning: Fine-tunes the model with task-specific data to handle a variety of visual and text generation tasks.

- Self-Enhancing Multimodal Alignment Scheme: This novel alignment scheme introduces a self-assessment process that allows the MLLM to evaluate its self-generated content, enhancing its ability to interpret images and improve generative predictions. This bidirectional enhancement between understanding and generation is under-explored in prior models.

Evaluation and Results

The model was evaluated across numerous benchmarks, including visual understanding, generation, and editing tasks. ILLUME demonstrates comparable or superior capabilities relative to both state-of-the-art specialized and unified models. The results indicate an impressive reduction in the number of required training samples while maintaining high performance. On benchmarks like SEED and MMMU, ILLUME excels, indicating its robust understanding and generation capabilities. Furthermore, in tests on text-to-image generation benchmarks, ILLUME attains FID scores that match or exceed those of existing high-performance models, revealing its proficiency in creating visually coherent and contextually accurate images.

Theoretical Implications and Future Directions

The theoretical implications of integrating vision and language are substantial. ILLUME's self-assessing capability opens a pathway for models that can introspectively improve through feedback loops—potentially paving the way for autonomous self-improvement in AI systems. This capability also hints at a future where models can more effectively learn from fewer samples, a vital trait for sustainable AI development.

In terms of future developments, extensions to additional modalities such as audio and 3D data, as well as enhancements in generating higher resolution outputs, could be explored. Moreover, evolving the self-enhancement strategy to incorporate more sophisticated evaluation criteria, including aesthetic considerations, could significantly impact the generation's alignment with human preferences.

Conclusion

ILLUME represents a substantive step forward in the domain of unified MLLMs. By blending novel tokenization techniques with efficient training methodologies and self-enhancing capabilities, the framework sets a new standard for multimodal interaction within LLMs. Its contribution lies not only in current achievements but also in setting a foundation for future innovations that bridge the gap between various data modalities in LLMs.