Insightful Overview of AlignBench: Benchmarking Alignment of Chinese LLMs

The paper under review introduces AlignBench, a pioneering multi-dimensional benchmark explicitly designed to evaluate the alignment of LLMs when addressing Chinese language queries. This work marks a significant contribution to the field of LLM evaluation by addressing the notable gap in evaluating Chinese LLMs' alignment, with a strong emphasis on their effectiveness as instruction-tuned AI assistants.

Core Contributions

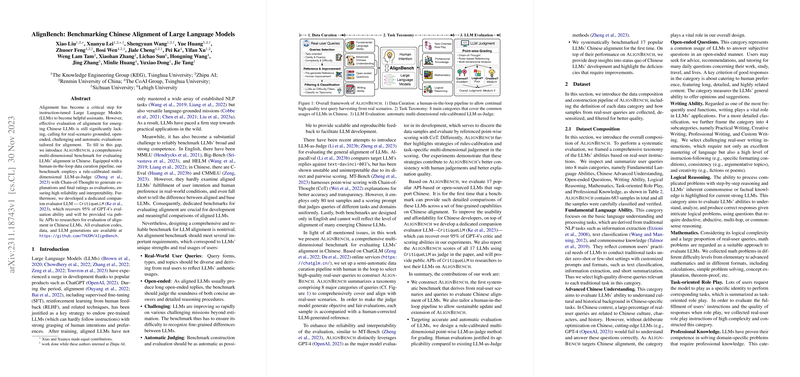

AlignBench is structured to encompass real-world user scenarios involving eight categories, specifically covering fundamental language tasks, advanced Chinese understanding, open-ended questions, logical reasoning, mathematics, task-oriented role play, professional knowledge, and writing ability. These categories collectively address the unique needs posed by the complexity of the Chinese language and its diverse user base.

AlignBench distinguishes itself by employing a multi-dimensional scoring approach through a rule-calibrated, point-wise, LLM-as-Judge methodology. This method acknowledges varied evaluation dimensions depending on the task type, such as factual accuracy, user satisfaction, logical coherence, and creativity, offering a nuanced evaluation mechanism that prior benchmarks have lacked.

The benchmark's architecture is robust, featuring a human-in-the-loop pipeline for data curation, enabling continual updates to reflect scenarios derived from real-world user interactions. Critical to this benchmark is its reliance on CritiqueLLM, a dedicated Chinese evaluator LLM shown to closely replicate GPT-4’s evaluative capabilities, thus providing a cost-effective and readily accessible alternative for broad-based LLM evaluation.

Methodology and Findings

The paper thoroughly documents a series of evaluations comparing 17 LLMs, highlighting AlignBench's ability to discern differences in the alignment of popular Chinese-supported models. Notably, the paper reports that models often face challenges concerning logical reasoning and mathematical capabilities. However, some Chinese LLMs demonstrate performance on par with, if not exceeding, their English-centric counterparts in Chinese-specific tasks, suggesting the cultural and regional tuning of these models leads to improved performance in Chinese-centric tasks.

Moreover, the evaluation process emphasizes how GPT-4 and CritiqueLLM maintain high agreement with human evaluations, especially due to the multi-dimensional criteria that address potential biases such as verbosity bias, which can skew evaluations of LLMs' outputs.

Implications and Future Work

This paper underscores the need for tailored benchmarks like AlignBench to assess LLMs accurately given their increasing deployment for practical applications. Such comprehensive benchmarks ensure that LLMs are evaluated not merely on knowledge retrieval but also on alignment with user intention and contextual appropriateness.

The research opens avenues for future exploration into enhancing LLMs’ reasoning capabilities—a current limitation for many models, particularly in handling complex logic and mathematical tasks. It also suggests the potential for leveraging hybrid approaches, integrating factual verification systems with LLM evaluations, to tackle reference-free or open-ended queries more effectively.

In conclusion, AlignBench sets a new standard for evaluating Chinese LLMs, with its methodologically rigorous, multi-dimensional framework addressing both general and Chinese-specific challenges in LLM alignment evaluation. This benchmark serves as a pivotal tool in advancing the development of LLMs that not only possess linguistic prowess but also align closely with the nuanced demands of user interactions in diverse linguistic landscapes.