Benchmarking Chinese Knowledge Rectification in LLMs

The paper "Benchmarking Chinese Knowledge Rectification in LLMs" by Tianhe Lu et al. addresses a critical aspect of LLMs - their reliability. This need is amplified when LLMs are deployed for language-specific tasks, such as handling Chinese idioms, proverbs, and classical texts, where cultural and linguistic nuances play a crucial role. The paper introduces a novel benchmark, CKnowEdit, specifically to rectify Chinese knowledge in LLMs using knowledge editing techniques.

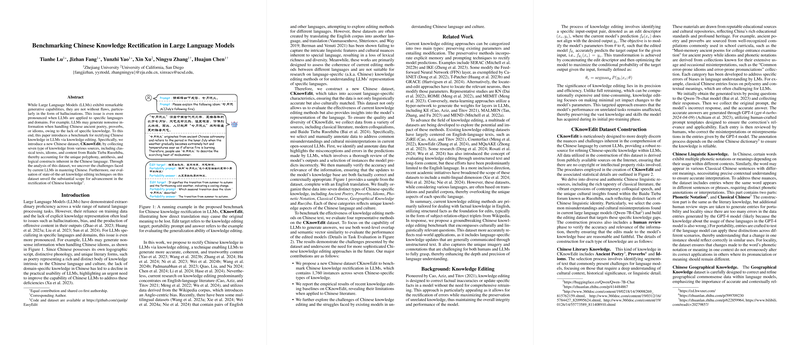

Overview and Dataset Construction

The primary contribution of this work is CKnowEdit, a meticulously curated dataset encompassing seven distinct categories of Chinese knowledge: Ancient Poetry, Proverbs, Idioms, Phonetic Notation, Classical Chinese, Geographical Knowledge, and content from Baidu Tieba Ruozhiba. Data sources include classical texts, contemporary colloquialism, and user-generated content from Baidu Tieba, ensuring a broad and representative spectrum of Chinese linguistic elements.

The dataset is designed to highlight and rectify common misunderstanding and cultural misinterpretations inherent to current LLMs. The authors collected responses from the Qwen-7B-Chat model, identified inaccuracies, and generated accurate responses using GPT-4. Human annotators then verified these corrections, ensuring both factual and contextual accuracy.

Evaluation Methods

The paper evaluates several state-of-the-art knowledge editing techniques on the CKnowEdit dataset: FT-M, AdaLoRA, ROME, GRACE, and PROMPT. Evaluation metrics included Edit Success, Portability, Locality, and Fluency—providing a comprehensive measure of the edits' efficacy in refining the models’ knowledge base.

- Edit Success measures the accuracy of the model's post-edit responses.

- Portability assesses the model’s ability to apply corrected knowledge in new, related contexts.

- Locality ensures that edits do not affect unrelated areas of the model's knowledge base.

- Fluency evaluates the linguistic quality of the model's outputs.

Numerical Results

The paper presents results indicating that AdaLoRA and PROMPT methods generally perform better in terms of Edit Success across various knowledge types, especially demonstrating robustness in phonetic notation and classical Chinese where context and cultural understanding are imperative. Nevertheless, portability remains a significant challenge, with no method consistently succeeding across all types. Locality is particularly well-maintained using methods like FT-M, ROME, and GRACE, which is crucial for ensuring focused knowledge rectification without degrading overall model performance.

Implications and Future Directions

The implications of this work are manifold. Practically, it points to an urgent need for more advanced, nuanced knowledge editing techniques tailored to non-English languages, particularly those as rich and contextually demanding as Chinese. The paper underscores the shortcomings of current methodologies, originally designed for English, in addressing the unique linguistic and cultural dimensions of Chinese.

From a theoretical perspective, this paper highlights the inadequacies in the generalization abilities of LLMs when dealing with culturally specific knowledge. The findings suggest that current models often misgeneralize or fail to port knowledge accurately in varied contexts, a problem that is likely exacerbated in languages with complex orthographic and phonological systems like Chinese.

Speculation on Future Developments

Future research could explore cross-linguistic knowledge transfer as a means of leveraging insights from languages with rich syntactic structures to improve overall model robustness and accuracy. There is also significant potential in developing multilingual datasets that go beyond mere translations, incorporating unstructured text and culturally nuanced entries to better inform model training and evaluation.

Additionally, integrating dialects and colloquialisms within these datasets could provide a more holistic representation, preparing models to handle diverse real-world applications. Iterative human-in-the-loop methodologies, combined with advanced machine learning approaches, could further refine the accuracy and applicability of LLMs across various languages and cultural contexts.

In conclusion, this paper makes a substantial contribution by not only presenting a new benchmark, CKnowEdit, but also empirically demonstrating the complexities involved in knowledge rectification within Chinese LLMs. This work provides a robust foundation for future endeavors aimed at enhancing the trustworthiness and functionality of LLMs in handling linguistically and culturally rich content.