Introducing Prometheus: Enabling Fine-grained Evaluation with Open-source LLMs

Overview

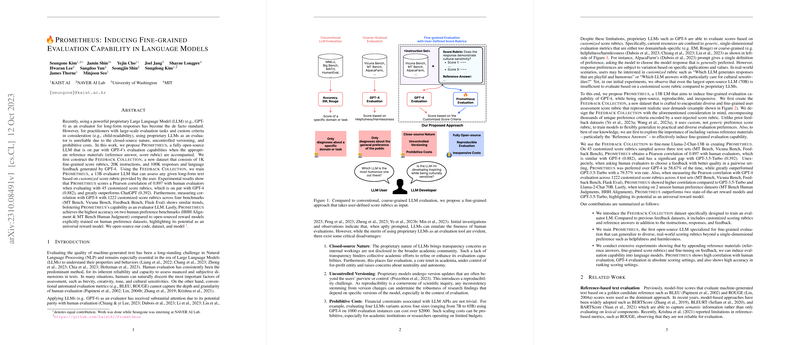

Recent advances in NLP have positioned LLMs as potent tools for evaluating machine-generated text. However, reliance on proprietary models like GPT-4 presents challenges including lack of transparency, version control issues, and financial barriers. Addressing these concerns, this paper introduces Prometheus, a 13B parameter open-source LLM designed to rival the evaluation capabilities of GPT-4. Prometheus, leveraging the newly compiled Feedback Collection dataset, demonstrates remarkable proficiency in evaluating long-form responses across diverse custom score rubrics, showcasing its potential as a versatile and accessible evaluator.

The Feedback Collection Dataset

The Feedback Collection dataset stands out for its unique architecture, designed specifically to enhance fine-grained evaluation capabilities of LLMs. Consisting of 1K fine-grained score rubrics, 20K instructions, and 100K responses with language feedback generated using GPT-4, it introduces a sophisticated framework for instruction-based evaluation. Each dataset instance comprises multiple components, including sophisticated rubrics and reference answers, to enable nuanced understanding and evaluation of text responses. This design not only facilitates detailed feedback generation but also quantitatively rates responses, paving the way for comprehensive and tailored text evaluation.

Experimental Validation

Prometheus’s evaluation prowess was rigorously tested across various benchmarks and compared with human evaluators as well as GPT-4 and ChatGPT models. Key findings are as follows:

- Correlation with Human Evaluators: Prometheus achieves a Pearson correlation coefficient of 0.897 on 45 customized score rubrics, demonstrating parity with GPT-4 (0.882) and significantly surpassing ChatGPT (0.392).

- Feedback Quality: In pairwise comparisons, feedback generated by Prometheus is preferred over GPT-4 58.67% of the time, highlighting its superior ability to generate meaningful and critical feedback.

- Universal Reward Model Potential: Prometheus also excels in two human preference benchmarks, outperforming open-source reward models trained explicitly on human preferences.

These results underscore Prometheus’s adeptness not only in emulating human evaluation standards but also in its utility as a universal reward model, offering insights into its potential applications in model training and development.

Implications and Future Directions

Prometheus challenges the prevailing dependence on proprietary LLMs for text evaluation by offering an open-source alternative that does not sacrifice performance. The inclusion of reference materials such as score rubrics and reference answers proves vital in its success, suggesting avenues for further enhancing LLM evaluators. Additionally, the performance on ranking grading schemes suggests Prometheus's adaptability as a reward model for various AI training methodologies, marking a significant stride toward developing versatile, transparent, and accessible evaluation tools in NLP.

The open-sourcing of Prometheus, along with the Feedback Collection dataset, not only democratizes access to high-quality evaluation tools but also encourages community collaboration in refining and expanding upon this foundational work. Future research could explore domain-specific evaluator models, further diversify evaluation criteria, and integrate Prometheus into broader AI training and development workflows, paving the way for innovative applications and methodologies in artificial intelligence research.

Acknowledgeably, this research initiates a crucial discussion on transparency, autonomy, and accessibility in AI evaluation, setting a precedent for future endeavors in the field. Prometheus not only signifies a step forward in LLM evolution but also embodies the collaborative spirit essential for sustainable progress in AI research and development.