Introduction to Automated VLM Evaluation

Evaluating the performance of Vision-LLMs (VLMs) can be a complex process. It stretches beyond mere text generation, demanding the output to be not just textually rich but contextually anchored on a given image. The novelty of VLMs means the traditional metrics might not suffice, as they often miss nuanced aspects such as the intricate interplay between visual content and generated text. Existing qualitative approaches, while beneficial, face scalability issues, often being costly and subjective to human bias.

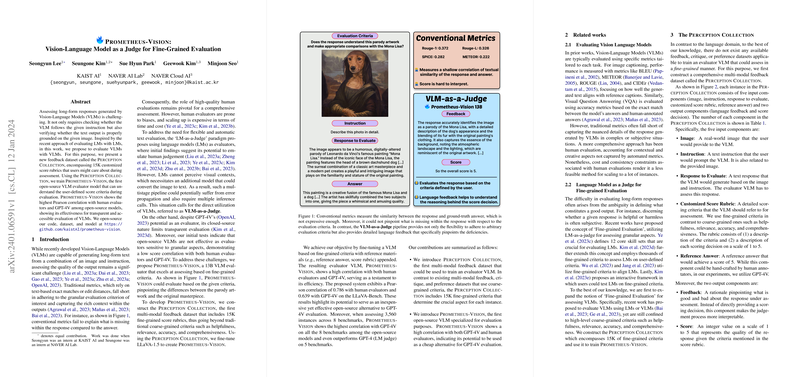

The Concept of VLM-as-a-Judge

The proposed solution in the literature has been the ‘LM-as-a-Judge’ paradigm. This method uses a LLM (LM) to estimate the quality of another LM's output. However, when it comes to VLMs, there is a hitch - the process needs an additional model that can translate visual information to text before evaluation can take place. To circumvent this complexity and potential for error propagation, researchers suggest the adoption of VLMs themselves as judges. This approach directly leverages VLMs' inherent proficiency in parsing visual data for a more streamlined and accurate assessment process.

Introducing Prometheus-Vision

Seeking an advancement in this domain, the paper introduces Prometheus-Vision. It's a novel 13B-parameter VLM designed for evaluation with an open-source ethos. Prometheus-Vision has been trained using a newly curated dataset named the Perception Collection, which contains 15,000 fine-grained score rubrics tapping into user-defined assessment criteria. This training sets the model apart, empowering it to scrutinize based on detailed, custom criteria while offering specific language feedback on output deficiencies. The model demonstrates impressive performance, aligning closer to human judgments and even surpassing open-source models in several benchmarks.

Empirical Results and Considerations

Through rigorous testing, Prometheus-Vision has exhibited a high correlation with human evaluators, particularly on benchmarks imbued with richly diverse real-world images. Moreover, it competes well with closed-source counterparts like GPT-4V, providing an accessible alternative for transparent VLM evaluation. Remarkably, it even shows potential as a critique tool for human assessment, producing high-quality feedback deemed on par with or superior to some proprietary models in certain cases.

Despite its strengths, Prometheus-Vision is not without limitations. Its performance metrics indicate room for improvement when it comes to analyzing text-rich images like charts or diagrams. This suggests that future versions, possibly built on more sophisticated visual encoders, could enhance its efficacy. Additionally, the paper acknowledges a dataset bias toward real-world imagery over text-heavy graphics and suggests this could be a promising direction for future dataset enrichment.

Concluding Remarks

The research presents a significant contribution to the field with its open-source VLM evaluator, Prometheus-Vision. Instrumental in shaping the future trajectory of fine-grained VLM assessments, the model and its training dataset, Perception Collection, signal a shift toward more nuanced, user-centric evaluation methods. The authors encourage further exploration into multi-modal feedback datasets, aiming to broaden the scope and capabilities of VLM evaluators in various contexts, potentially even venturing into evaluations of AI-generated imagery.