Repeat Ranking in RLAIF for Training Multilingual LLMs

Introduction

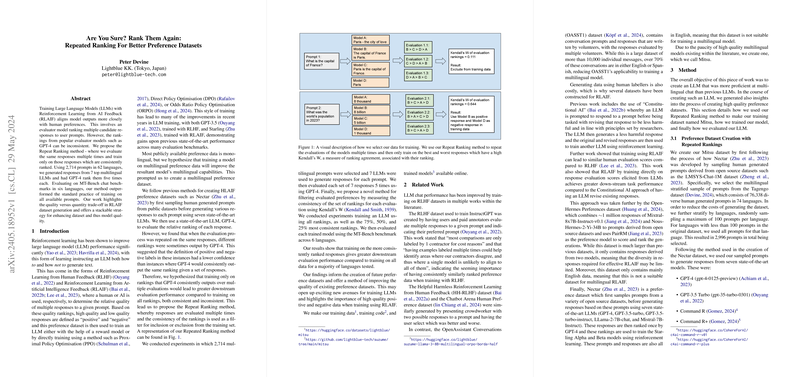

Reinforcement Learning from AI Feedback (RLAIF) has emerged as a pivotal methodology for enhancing the alignment of LLMs with human preferences. Using an evaluator model to rank multiple candidate responses to user prompts, the consistency of these rankings becomes crucial. This paper introduces the Repeat Ranking method, where model responses are evaluated multiple times to ensure consistency. Only the responses consistently ranked across multiple evaluations are used for training, hypothesizing that this will lead to improved evaluation performance.

Methodology

The paper follows a comprehensive approach to creating a multilingual preference dataset and employing the Repeat Ranking method. Initially, 7 state-of-the-art multilingual LLMs generated responses to 2,714 prompts across 62 languages. GPT-4 ranked these responses five times each, examining consistency using Kendall's W. Training sets were then created based on the top 25%, 50%, 75%, and 100% of consistent rankings.

The models trained using these subsets were compared against each other and against a baseline model in multilingual chat benchmarks. Additionally, the efficacy of the Repeat Ranking method was contrasted with training models on randomly selected subsets, as well as training solely on responses from the best and worst performing models.

Results

The findings are particularly noteworthy, showcasing that:

- Models trained only on the most consistently ranked responses (top 25%, 50%, and 75%) outperformed the model trained on all data across multiple languages tested in the MT-Bench chat benchmark.

- Suzume-ORPO-50 (trained on the top 50% consistent data) exhibited superior or equal performance to the Suzume-ORPO-100 model in 5 out of 6 languages evaluated, demonstrating the effectiveness of training on less but higher quality data.

- Comparative results also indicated that the performance of GPT-3.5 Turbo was exceeded by the best ORPO-trained model in 4 out of 6 languages evaluated.

However, the paper also noted a trade-off in performance on the next token prediction tasks, as the ORPO-trained model using all data showed lower performance on the Belebele benchmark. Nevertheless, models trained on a consistent subset (particularly, Suzume-ORPO-75 and Suzume-ORPO-25) performed better, achieving comparable or superior scores to the base model in several languages.

Discussion

The results emphasize the substantial improvement in chat capabilities that the Repeat Ranking method can deliver. The insights gained suggest that focusing on the consistency of training data evaluations can be more beneficial than maximizing the quantity of data. By selecting the most consistently ranked responses, models not only improve evaluation scores but also potentially reduce computational costs associated with training.

Furthermore, this work highlights the critical balance between data quality and quantity within RLAIF dataset construction, presenting a robust strategy for dataset refinement. Applying these methodologies can markedly improve existing datasets' quality, potentially bolstering the training of LLMs to achieve higher performance levels.

Future Directions

The implications of this research are far-reaching, suggesting multiple avenues for future exploration:

- Expanding Beyond RLAIF: Similar methodologies could be employed within Reinforcement Learning from Human Feedback (RLHF) datasets, investigating whether consistent human evaluations could similarly enhance performance.

- Diverse Evaluator Models: Incorporating evaluations from multiple high-performing LLMs (e.g., Claude 3, Gemini 1.5 Pro) might yield more comprehensive and unbiased response evaluations.

- Multi-turn Conversations: Extending datasets to include multi-turn conversations could provide a richer training ground for LLMs, enhancing their interactive capabilities.

- Task Difficulty Stratification: Filtering prompts based on task difficulty could optimize training resource allocation, potentially improving LLM performance in challenging tasks.

- Tool-Assisted Evaluations: Leveraging tools or agents to augment the evaluation capabilities (e.g., fact-checking using search tools, mathematical validation using calculators) could further refine preference datasets' quality.

Conclusion

The Repeat Ranking method presents a significant advancement in RLAIF, demonstrating that training on consistently ranked responses leads to better performance in multilingual chat tasks. This research underscores the importance of evaluation consistency in preference dataset creation, offering a strategic approach to enhance the quality and efficacy of LLM training. As future work continues to refine and expand upon these findings, the development of more proficient multilingual LLMs remains an exciting and promising frontier.