An Analysis of Multimodal LLM Editing

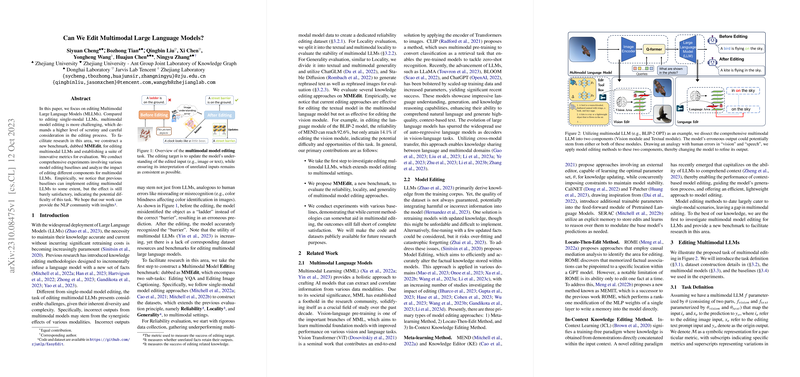

The paper "Can We Edit Multimodal LLMs?" addresses the burgeoning need to refine and adapt Multimodal LLMs (MLLMs). With the increasing deployment of LLMs, these models must maintain accurate and current knowledge without extensive retraining. Editing MLLMs is inherently complex due to their integration of multiple data modalities. This paper proposes a benchmark, MMEdit, to facilitate research in this domain and evaluates the efficacy of various model editing approaches.

Research Contributions and Methodology

The paper is innovative in presenting MMEdit, a benchmark specifically designed to evaluate the editing capabilities of MLLMs. MMEdit focuses on two primary tasks: Editing Visual Question Answering (E-VQA) and Editing Image Captioning (E-IC). The researchers constructed the dataset by gathering underperforming entries from established datasets, ensuring a robust framework for evaluating the capacity to update the knowledge framework of MLLMs efficiently.

Reliability, locality, and generality metrics have been set forth to measure the success of model editing. These metrics collectively assess the models' ability to maintain updated knowledge while avoiding unintended side effects and retaining the capacity to generalize edits across rephrased inputs.

Experimentation and Results

The researchers conducted extensive experiments using notable MLLMs such as BLIP-2 OPT and MiniGPT-4. Various editing methods including MEND, Knowledge Editor, SERAC, and In-Context Knowledge Editing were evaluated.

Reliability: Editing methods outperformed base methods significantly. Notably, In-Context Editing and SERAC produced high success rates in correcting erroneous outputs. However, fine-tuning approaches struggled with reliability, largely due to their inability to capture task-specific multimodal characteristics adequately.

Locality: Serious challenges were noted in retaining model stability, especially concerning the vision module. While textual locality was well-preserved by most methods, maintaining stability within the vision module proved challenging. Memory-based approaches like SERAC showed the most promise but were hampered by inadequate constraints on the M-Locality.

Generality: Image generalization lagged behind text generalization, a consistent theme across experiments. While memory-enhanced editing methods demonstrated strong generality, their lower locality scores highlighted a key area for future research.

Implications and Future Directions

The implications of these findings are multi-faceted. Practically, the results underscore the importance of targeted model editing techniques that respect the preservation of broader model knowledge. Theoretically, the paper invites further inquiry into efficient multimodal model editing strategies that account for the inherent complexity of these systems.

Future work could explore innovative editing paradigms that incorporate co-editing between modalities—leveraging insights from both visual and textual data to enhance model performance. Additionally, developing methods with better vision editing capabilities will be crucial in addressing current limitations.

In conclusion, this paper sets a foundational tone for subsequent research in MLLM editing, contributing valuable insights and benchmarks to the NLP community. As multimodal models continue to expand in complexity and scope, refining our approaches to knowledge editing will remain a vital frontier in AI research.