Overview of Editing LLMs: Problems, Methods, and Opportunities

The paper "Editing LLMs: Problems, Methods, and Opportunities" provides a meticulous examination of the current methodologies and challenges associated with the task of editing LLMs. This process involves strategically altering LLM behavior within a designated domain without impacting performance on unrelated inputs. The paper presents a detailed task definition, evaluates various editing techniques, and introduces a new benchmark dataset to facilitate robust evaluations.

Task Definition and Challenges

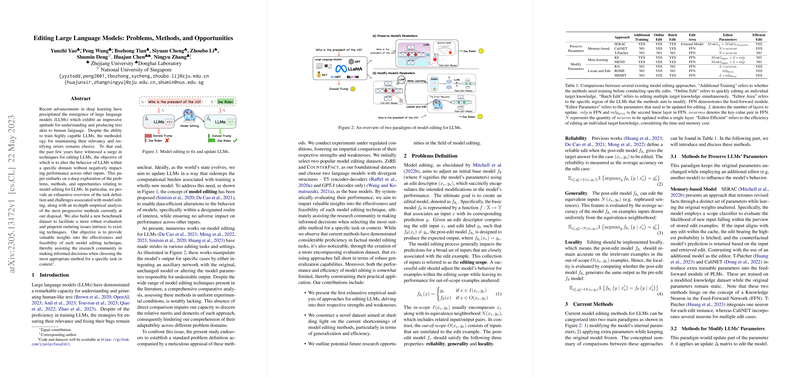

Model editing aims to modify the parameters of an LLM, represented as a function , to change its prediction for a specific edit descriptor while maintaining unchanged performance for other inputs outside the editing scope. The fundamental properties for successful edits are reliability, generalization, and locality. Reliability requires the LLM to produce the desired output for the edited example. Generalization involves adapting to equivalent neighbors of the edit example. Locality, on the other hand, ensures that the model's predictions for unrelated examples remain unaffected.

Evaluation of Current Methods

The paper categorizes existing model editing methods into two main paradigms: preserving and modifying model parameters.

- Preserving Parameters:

- Memory-based Models: Systems like SERAC store edit examples explicitly and use a retriever for model guidance, leveraging in-context learning capabilities.

- Additional Parameters: Techniques like T-Patcher and CaliNET introduce new neurons in specific network layers to handle individual or multiple edits.

- Modifying Parameters:

- Locate-Then-Edit: ROME and MEMIT strategies identify key parameters for model knowledge and apply matrix updates.

- Meta-learning Approaches: MEND and KE leverage hypernetworks to predict weight updates, enhancing the model's adaptability.

Empirical Analysis

The paper conducts an empirical analysis using two datasets, ZsRE and CounterFact, over various models. It highlights that while methods like ROME and SERAC exhibit strong performance in editing tasks, they face challenges in scalability with larger model architectures and batch edits. Memory-based models, despite rapid execution, demand extensive pre-training.

Comprehensive Evaluation: Portability, Locality, and Efficiency

To address gaps in existing evaluations, the paper introduces a new framework assessing portability, locality, and efficiency:

- Portability: Tests the model's capacity to extrapolate edits to similar contexts, revealing the limitations of current methods in generalizing the changes beyond direct edits.

- Locality: Assesses side effects, indicating that many methods fail to restrict changes solely to targeted knowledge.

- Efficiency: Examines computational cost, noting that while some methods like SERAC are efficient post-training, pre-training time remains prohibitive.

Implications and Future Directions

The findings of this paper underscore the need for more robust and efficient model editing techniques that can adapt effectively to evolving datasets and problem scopes. Model editing holds substantial potential for improving LLM alignment with real-world changes without necessitating comprehensive retraining. However, challenges in scalability, especially in preserving model integrity during sequential and batch edits, warrant further research. Future advancements may focus on enhancing adaptability across diverse domains, elevating the practical utility of LLMs through fine-grained, efficient edits.

In summary, the paper offers an insightful examination of current model editing methodologies, shedding light on existing limitations and setting a foundation for future explorations in LLM adaptation.