Ada-Instruct: Adapting Instruction Generators for Complex Reasoning

The paper "Ada-Instruct: Adapting Instruction Generators for Complex Reasoning" authored by Wanyun Cui and Qianle Wang, introduces a novel approach to instruction generation using fine-tuning rather than in-context learning (ICL). This work addresses the limitations of existing ICL-based methodologies in generating long and complex instructions for various reasoning tasks, such as code completion and mathematical reasoning.

Problem Statement and Background

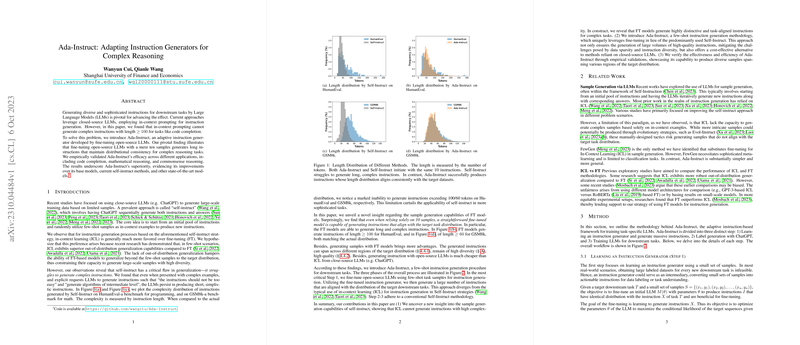

The prevailing approaches for instruction generation leverage closed-source LLMs and rely heavily on ICL. While effective in many scenarios, ICL struggles to generate instructions with increased complexity and length (≥100 tokens), which is crucial for sophisticated tasks like code completion. The authors highlight that existing self-instruct methodologies based on ICL fall short in maintaining the required distributional consistency for downstream tasks.

Key Contributions

- Fine-Tuning for Instruction Generation: The paper introduces Ada-Instruct, an adaptive instruction generator that uses fine-tuning rather than ICL. The authors demonstrate that even with as few as ten samples, fine-tuning open-source LLMs can produce long and complex instructions that align well with the target distribution of downstream tasks.

- Empirical Validation Across Applications: Ada-Instruct's efficacy is empirically validated across diverse applications, including code completion (HumanEval, MBPP), mathematical reasoning (GSM8k, MATH), and commonsense reasoning (CommonsenseQA). The results reveal significant improvements over the base models and current state-of-the-art methods.

Numerical Results and Key Findings

- Code Completion:

- On the HumanEval benchmark, Ada-Instruct achieves a pass@1 score of 64.0%, showing a relative improvement of 47.8% over the 13B parameter base model Code LLAMA-Python.

- Comparisons with other state-of-the-art models like WizardCoder and Code LLAMA-Instruct reveal Ada-Instruct’s competitive performance, achieved with fewer initial and fine-tuning data points.

- Mathematical Reasoning:

- Ada-Instruct attains a pass@1 score of 48.6% on GSM8k, improving the performance of the 13B model by 69.3%.

- It achieves a significant enhancement on the MATH benchmark, highlighting Ada-Instruct's capacity to handle more challenging tasks.

- Commonsense Reasoning:

- On the CommonsenseQA benchmark, Ada-Instruct achieves an accuracy of 75.5%, demonstrating a substantial relative improvement of 28.0% over its base model.

Analysis of Instruction Generation

Task Creativity:

Utilizing t-SNE to visualize the instruction distributions, the authors show that Ada-Instruct generates diverse and expansive instructions that align closely with the actual task distribution, going beyond the oversampling of initial training samples.

Quality and Diversity:

By leveraging ChatGPT for annotation, the paper demonstrates that the quality of the instructions generated by Ada-Instruct approximates that of real samples. Despite a small fraction of incorrect samples, the performance degradation is minimal, illustrating the robustness of Ada-Instruct in generating high-quality instructions.

Implications and Future Directions

Practical Implications: - The findings advocate for the use of fine-tuning over ICL, especially for tasks requiring complex and long-form instructions. - Ada-Instruct's methodology proves cost-effective, utilizing open-source LLMs and reducing reliance on expensive closed-source models (e.g., ChatGPT).

Theoretical Implications: - The work challenges the conventional wisdom that ICL is superior for out-of-distribution generalization, instead demonstrating the strong potential of fine-tuning in this domain. - The approach presented in Ada-Instruct could pave the way for refining instruction generation strategies, particularly in scenarios where data sparsity and diversity present significant challenges.

Future Developments: - Further research could explore the extension of Ada-Instruct to additional domains and task types, potentially broadening the applicability of fine-tuning-based instruction generation. - Investigations into optimizing the fine-tuning process, possibly incorporating hybrid approaches that blend the strengths of both ICL and fine-tuning, are promising avenues for future exploration.

In conclusion, the Ada-Instruct framework offers a robust alternative to ICL for generating complex instructions, thereby enhancing the adaptability and versatility of LLMs across a variety of reasoning tasks.