Fine-Tuning LLMs with Sequential Instructions: A Comprehensive Overview

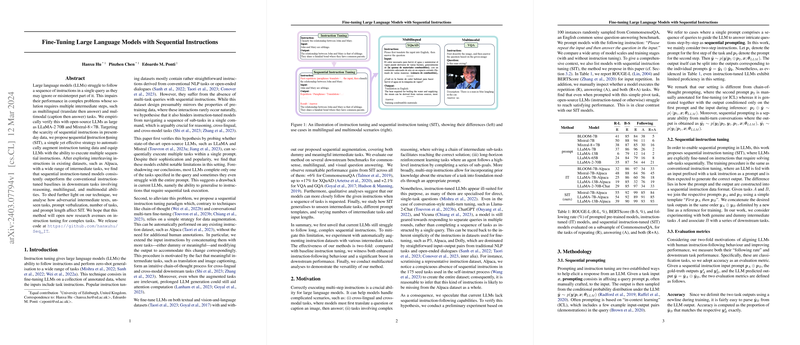

The paper "Fine-Tuning LLMs with Sequential Instructions" addresses a significant challenge in the capabilities of LLMs—the ability to follow and process a sequence of instructions in a single query. Traditional instruction datasets often contain straightforward, singular tasks, which limit models from navigating multi-step interactions effectively. The authors introduce a novel methodology termed Sequential Instruction Tuning (SIT), designed to enhance the models' competence in executing multiple tasks sequentially, a critical need for complex downstream tasks involving reasoning, multilingual, and multimodal scenarios.

Sequential Instruction Tuning and Its Implications

The central contribution of this research is the SIT paradigm, which broadens the scope of instruction tuning to encompass sequential sub-task executions. This method augments existing instruction datasets by interspersing tasks with intermediary steps, no longer requiring additional human annotations—a significant advantage in accelerating model training processes. For instance, intermediate tasks like translation or image captioning provide a composed step-by-step reasoning framework, facilitating improved LLM performance in cross-lingual and cross-modal tasks.

The paper details numerical results demonstrating SIT's superior performance over conventional instruction tuning. Noteworthy improvements are observed across various benchmarks: a +6% improvement on CommonsenseQA, a +17% boost for the XQuAD multilingual task, and a +2.1% enhancement in visual question answering tasks such as VQA and GQA. These outcomes underscore SIT's efficacy in enhancing both the instruction-following capabilities of LLMs and their downstream task performance, further confirmed by the paper's qualitative analyses.

Methodological Extensions and Evaluation

The SIT approach is experimentally validated using prominent LLMs, including LLaMA-2 70B and Mixtral-8×7B, fine-tuned on diversified datasets containing both genuine and synthetic intermediate tasks. The authors extend existing datasets (e.g., Alpaca) by concatenating additional tasks, which are then amended with corresponding outputs. This procedural innovation supports a broader array of tasks such as reasoning and cross-lingual processing even under unseen task conditions.

As part of their comprehensive evaluation, the authors demonstrate the robustness of SIT models when prompted with unseen templates and varying input lengths. The SIT models maintain high sequential task accuracy even when intermediate task steps are varied or when additional tasks are introduced during testing. These adaptability features indicate that SIT models generalize beyond their trained settings, confirming their utility in real-world applications requiring flexible and complex task executions.

Theoretical and Practical Implications

Theoretically, the introduction of SIT extends the conceptual framework and interpretation of instruction tuning by highlighting the importance of task order and intermediate processing steps in multi-step reasoning. It suggests a new dimension in LLM training that involves task chaining, which could be pivotal for future explorations into cognitive aspects of LLM behavior.

Practically, SIT enhances the applicability of LLMs in environments where complex, sequential decision-making is required, such as virtual assistants, autonomous systems, and multilingual conversational agents. This improved instruction-handling capability can potentially reduce human intervention, enabling more autonomous task executions and responses driven by structured curricular learning.

Future Directions

Given its promising results, future work could explore further diversification of intermediate tasks beyond those demonstrated in the paper, such as more intricate dummy tasks or context-specific intermediate sub-tasks. Additionally, integrating SIT with multilingual and multimodal datasets could provide transformative insights into scalable LLM applications.

In summary, this research advances the LLM field by proposing a targeted mechanism for sequential instruction execution, heralding a step forward in model efficiency, generalization, and applicability in increasingly complex computational tasks.