Improved Baselines with Visual Instruction Tuning

The paper entitled "Improved Baselines with Visual Instruction Tuning," authored by Haotian Liu et al., presents innovative approaches to enhancing the efficiency and effectiveness of Large Multimodal Models (LMMs) in visual instruction tuning. The paper focuses on the LLaVA framework, providing substantial evidence that simple yet strategic modifications can significantly improve these models' performance across a variety of benchmarks using publicly available data.

Key Contributions and Methodology

The primary contributions of the paper are two-fold: showcasing the efficacy of fully-connected vision-language connectors in LLaVA and proposing enhancements that streamline the architecture's training and improve its performance. The authors' modifications include:

- Adopting CLIP-ViT-L-336px with an MLP projection: This change leverages pre-existing strengths in the CLIP model and transitions from a linear projection to an MLP to capture more intricate data relationships.

- Incorporating VQA data with academic task-oriented response formatting prompts: This step involves fine-tuning the model using Visual Question Answering (VQA) datasets and precisely structuring prompts to guide the models towards producing desired outputs.

Experimental Results

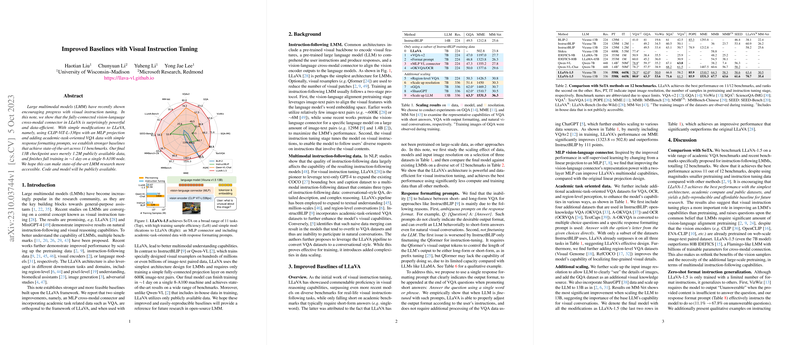

The experimental setup used a relatively modest training corpus of 1.2 million publicly available images, achieving state-of-the-art results across 11 benchmarks. Notably, the training process required approximately one day on a single 8-A100 node, highlighting the method's computational efficiency. Table~\ref{tab:results} in the original document presents a comprehensive comparison, showing the model's superior performance relative to other contemporaneous methods such as InstructBLIP and Qwen-VL.

Implications and Future Directions

The results underscore the utility of visual instruction tuning over extensive pretraining. Despite its minimalistic architecture, LLaVA-1.5, enhanced with simple modifications, significantly outperformed models that employ intricate resamplers and extensive pretraining. The findings pose critical questions regarding the necessity and efficiency of using large-scale datasets and sophisticated vision samplers, suggesting that simpler architectures might suffice for state-of-the-art LMM performance.

The paper opens several avenues for future research, including:

- Exploring visual resamplers: While current resamplers did not match LLaVA's efficiency, more sample-efficient versions could enable more scalable and effective LMMs.

- Enhancing multimodal data integration: Future models could benefit from integrating more diverse and granular datasets, potentially improving understanding in various task-specific contexts without extensive pretraining.

- Addressing computational efficiency: Additional optimizations in training processes and architectural adjustments could further reduce computational loads, making advanced LMM capabilities more accessible.

Conclusion

The paper "Improved Baselines with Visual Instruction Tuning" successfully demonstrates that substantial gains in LMM capabilities can be achieved through strategic, simple modifications to existing frameworks. The enhanced LLaVA-1.5 model's performance across multiple benchmarks suggests a promising direction for future multimodal research that emphasizes efficiency and accessibility without compromising on performance. The paper facilitates a re-evaluation of current LMM training paradigms and paves the way for further innovations in visual instruction tuning.