Language-Based Instruction Tuning and Its Impact on Multimodal LLMs

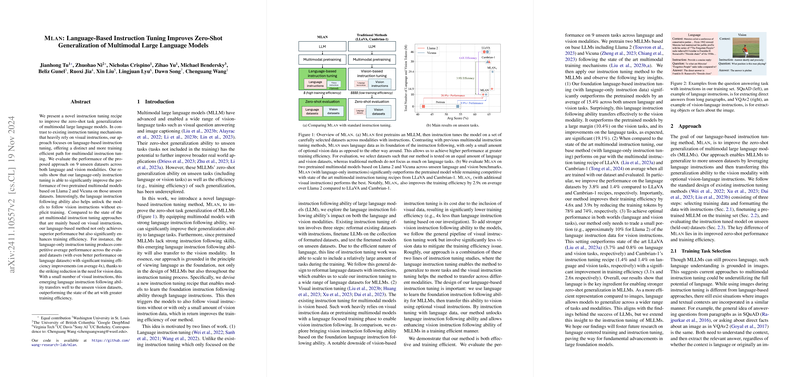

The paper "Mlan: Language-Based Instruction Tuning Improves Zero-Shot Generalization of Multimodal LLMs" presents a methodological exploration into leveraging language-based instruction tuning to enhance the zero-shot generalization capabilities in Multimodal LLMs (MLLMs). The paper is rooted in the need to address the limitations of existing instruction tuning methods that predominantly rely on visual data, often at the expense of computational efficiency.

Key Contributions and Methodology

The primary contribution of the paper lies in proposing a novel approach named Mlan, which focuses on language-exclusive instruction tuning to empower MLLMs to generalize across untrained tasks effectively. This method stands in contrast to the current emphasis on visual instruction tuning for multimodal models. The authors argue that by prioritizing language data, which is inherently more efficient to process than visual data, their method can significantly enhance training efficiency, reducing the requisite visuals in model training by approximately four times on average.

The authors developed Mlan using two pretrained multimodal models based on Llama 2 and Vicuna architectures. These models were evaluated across nine unseen datasets, spanning both language and vision modalities. The evaluation was conducted to ascertain the improvement in zero-shot task generalization—a model's ability to understand and perform tasks it was not explicitly trained on.

Findings and Performance

The evaluation results suggest that language-only instruction tuning substantially outperforms the baseline pretrained models and remains competitive with existing state-of-the-art models, LLaVA and Cambrian-1, which employ visual instruction tuning methods. In terms of language tasks, Mlan exhibited superior performance, affirming the hypothesis that strong language proficiency can indeed translate into improved vision task performance. Interestingly, there was a notable transfer of language instruction capabilities to the vision modality, leading to enhanced model performance even in the absence of explicit vision-based training.

Implications and Future Directions

The implications of this research are multifold. Practically, it suggests a shift towards language-dominant instruction tuning that promises significant gains in training efficiency, making it a compelling choice for scenarios constrained by computational resources. Theoretically, it underscores the foundational role of language in achieving comprehensive multimodal understanding, advocating for a reevaluation of how modality instruction is approached in AI model training.

Future research endeavors could explore the scalability of language-based instruction tuning to more extensive and diverse datasets, investigating how this approach could potentially replace or complement existing methods across varying model architectures. Additionally, further studies could delve into the optimization of instruction tuning strategies that incorporate dynamic balancing between language and vision data based on the task requirements.

In conclusion, the proposed language-based instruction tuning presents a compelling alternative to conventional visual-heavy tuning techniques, promising enhancements in performance across language and vision tasks while bolstering the overall training efficiency of MLLMs. The research invites a broader reassessment of the role language could play in the future advancements of multimodal AI systems.