Introduction

The exploration of in-context learning (ICL) using LLMs entails examining its potential for handling text classification tasks with many labels. To circumvent the inherent limitation of LLMs' constrained context windows, which restrict the number of examples that can be featured in a prompt, researchers have integrated secondary pre-trained retrieval models. This fusion enables the LLMs to ingest only a pertinent subset of labels for each inference, paving the way for application to domains previously deemed infeasible for LLMs' capabilities without the necessity of fine-tuning.

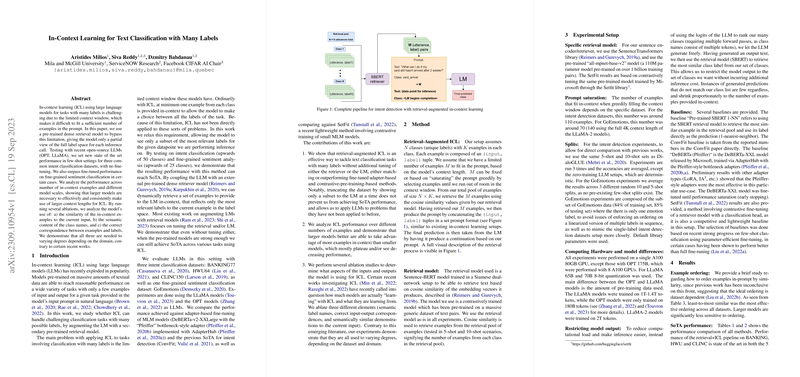

Methodology

This paper introduces a retrieval-augmented ICL where a dense retrieval model, specifically a Sentence-BERT pre-trained on extensive text pair datasets, dynamically identifies a relevant set of examples based on cosine similarity to the input. The research utilizes a "greedy" approach to fill the prompt to its capacity, maximizing the usage of the LLMs' context window. Importantly, the research avoids additional computational costs during inference by having the LLM freely generate output, which is then matched to the closest class using the same retrieval model.

Experimental Insights

The performance gained through the proposed retrieval-augmented ICL is noteworthy, with state-of-the-art (SoTA) strides observed in few-shot settings across various intent classification benchmarks and even outperforming fine-tuned approaches in certain fine-grained sentiment analysis scenarios. Moreover, the research explores the contribution of the semantic content of class names, correct example-label correspondence, and similarity of in-context examples to the current input, deducing their varying degrees of importance across datasets. The paper also reveals model scale to be a crucial factor in leveraging a higher number of in-context examples.

Conclusion and Future Directions

The findings confirm the retrieval-augmented ICL's prowess in addressing multi-label text classification without necessitating further adjustments to the retriever or LLMs, harnessing their pre-training strengths instead. The research points to the larger model architectures being more adept in capitalizing on a broader context when making use of in-context learning. In closing, the paper positions retrieval-augmented ICL as a powerful paradigm for efficiently handling complex classification tasks, introducing a transformative technique in the deployment of LLMs across diverse domains and task scopes.