Overview of "ImageBind-LLM: Multi-modality Instruction Tuning"

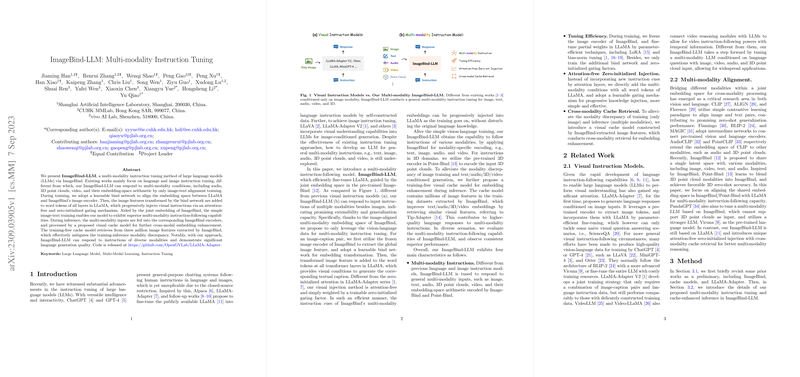

The paper, “ImageBind-LLM: Multi-modality Instruction Tuning,” presents a novel methodology for instruction tuning of LLMs using a method named ImageBind. Unlike previous approaches that predominantly address language and image instruction tuning, ImageBind-LLM is designed to handle a wider range of modalities, such as audio, 3D point clouds, video, and their combinations, using only image-text alignment during training.

Methodology

The core innovation of ImageBind-LLM lies in its ability to integrate multiple modalities into the LLM, particularly the LLaMA, by leveraging a shared embedding space provided by ImageBind. The process involves training on vision-language data, using a learnable bind network that harmonizes the embedding space between LLaMA and ImageBind's image encoder. The image features obtained through the bind network are added directly to the word tokens of the LLaMA across all layers. This integrates visual instructions without relying on attention mechanisms, which are typically more computationally intensive. Additionally, a zero-initialized gating mechanism is used, allowing progressive addition of visual instructions without disturbing existing language knowledge.

Distinct Features

The ImageBind-LLM framework is characterized by several distinct features:

- Multi-modality Integration: The ability to process diverse modalities such as images, audio, 3D point clouds, and video using a unified approach sets ImageBind-LLM apart from existing models.

- Tuning Efficiency: By freezing the image encoder of ImageBind and only fine-tuning selected components within the LLaMA using parameter-efficient techniques like LoRA and bias-norm tuning, ImageBind-LLM achieves cost-effective and resource-efficient training.

- Attention-free Integration: The integration of image features into the LLaMA is done in a straightforward manner, avoiding additional attention layers. This makes the model more efficient and reduces computational overhead.

- Cache Model for Inference Enhancement: A cache model, which stores image features extracted by ImageBind, helps mitigate modality discrepancies during inference, improving embedding quality across conditions.

Numerical Performance and Implications

The model has demonstrated consistent superior performance across various settings through evaluations on traditional vision-language datasets and the MME benchmark. The results underscore the versatility and robustness of ImageBind-LLM in multi-modality understanding and instruction-following capabilities.

Future Prospects

Theoretically, the introduction of ImageBind-LLM opens new avenues in multi-modality learning by showing effective alignment between diverse input types, contributing to broader applications in artificial intelligence. Practically, this approach can significantly influence areas such as autonomous systems, human-computer interaction, and cross-disciplinary computational models.

The research suggests potential for expanding the capabilities of such models by integrating more modalities and exploring further efficiency strategies in parameter tuning and embedding alignment. These future developments could leverage the foundational work of ImageBind-LLM to enhance multi-modal AI systems further, making them more adaptable and reliable across a diverse set of applications.