ImageBind: One Embedding Space to Bind Them All

Overview

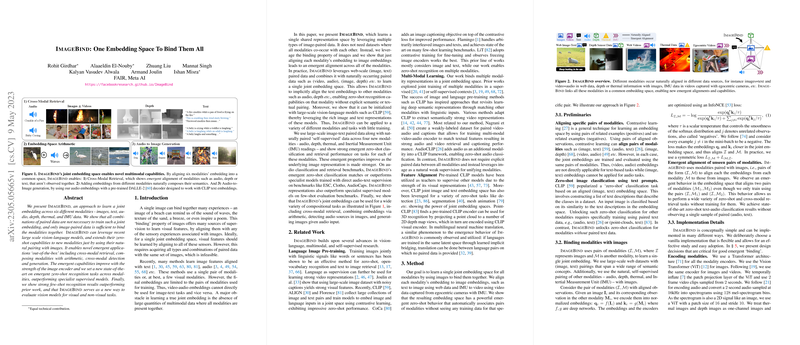

The paper "ImageBind: One Embedding Space to Bind Them All" presents a novel approach for creating a unified multimodal embedding space capable of binding six distinct modalities—images, text, audio, depth, thermal, and Inertial Measurement Unit (IMU) data. The primary contribution is demonstrating that it is sufficient to use image-paired data to align different modalities into a common embedding space. Thus, extensive multimodal datasets where all forms of data co-occur are not necessary. ImageBind effectively leverages large-scale vision-LLMs such as CLIP to extend zero-shot capabilities to new modalities.

Methodology

ImageBind utilizes a framework where an image is central to creating alignments with other modalities. The following are the primary components of the methodology:

- Contrastive Learning: This is employed to align the embeddings between modality pairs

(I, M), where represents images and represents another modality (text, audio, depth, thermal, IMU). The InfoNCE loss is specifically leveraged here. - Pretrained Vision-LLMs: ImageBind initializes its framework using pretrained models like CLIP, enabling it to leverage rich image and text representations available through large-scale web datasets.

- Naturally Paired Self-Supervised Data: Besides image and text pairs, other modalities are paired with images using naturally occurring data like videos paired with audio or IMU data.

Numerical Results

ImageBind demonstrates strong performance across various benchmarks involving zero-shot and few-shot classifications:

- Zero-shot Classification:

- Audio (ESC-50): Achieved 66.9% compared to 68.6% by AudioCLIP (which uses paired audio-text data).

- Depth (SUN-D): Scored 35.1% versus CLIP-based specialized models which achieved 25.4%.

- Few-shot Classification:

- Audio (ESC-50): Outperformed self-supervised models such as AudioMAE with up to 40% higher accuracy in low-shot settings.

- Depth (SUN): ImageBind significantly outperformed similar models trained on multimodal data, like MultiMAE, in few-shot settings.

Implications and Future Directions

Practical Implications:

- Universal Multimodal Application: ImageBind can serve as a backbone for various applications—ranging from content retrieval systems to multimodal AI systems that need to interpret and generate data across multiple modalities.

- Upgradability of Existing Models: The framework allows upgrading existing vision-based models to incorporate additional modalities with minimal retraining, showcasing extensibility and adaptability.

Theoretical Implications:

- Emergent Alignment: The emergent alignment properties of ImageBind suggest significant potential for theoretical exploration in latent space alignment methodologies, especially in multimodal contexts.

- Cross-Modal Semantic Understanding: The framework opens new directions in understanding and designing AI systems capable of truly integrated semantic understanding using disparate types of input data.

Future Research Directions:

- Expanding Training Data and Modalities: Extending the range of data used for pairing with images could further enhance the robustness and versatility of the embedding space.

- Improved Contrastive Loss: Research into optimizing contrastive loss functions, perhaps through dynamic temperature adjustments or more sophisticated negative sampling, could yield benefits.

- Task-Specific Adaptations: While ImageBind shows strong emergent properties, task-specific adaptations may help leverage the general embeddings for specialized tasks like detection or segmentation fully.

Conclusion

ImageBind represents a significant step towards creating a unified multimodal embedding space, leveraging the natural binding properties of images with various modalities. The approach substantially reduces the dependency on large and complex multimodal datasets while still achieving impressive zero-shot and few-shot capabilities across multiple benchmarks. The implications of this work are broad, affecting both practical applications and theoretical studies in multimodal AI research.