The paper "Through the Lens of Core Competency: Survey on Evaluation of LLMs," published in August 2023, provides a comprehensive survey on the evaluation of LLMs. It tackles the underlying challenges of evaluation in the context of significant advancements in NLP, given the transition from pre-trained LLMs (PLMs) to LLMs. The authors argue that traditional NLP evaluation tasks and benchmarks are no longer sufficient due to the exceptional performance of LLMs and the expansive range of their real-world applications.

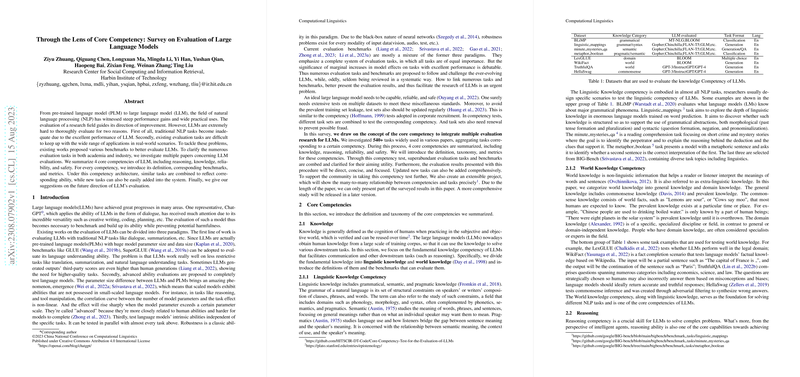

To address these evaluation challenges, the paper introduces a framework centered around four core competencies for assessing LLMs:

- Reasoning:

- Definition: This competency involves the model's ability to perform logical inferences, understand context, and apply common-sense knowledge to draw conclusions.

- Benchmarks and Metrics: Benchmarks such as the Winograd Schema Challenge and logical reasoning tasks are included to evaluate this competency.

- Knowledge:

- Definition: This focuses on the LLM's capacity to store and retrieve information accurately from its training data.

- Benchmarks and Metrics: Tasks like question answering and factual consistency checks are employed to measure this competency.

- Reliability:

- Definition: This competency covers the consistency and robustness of the model’s outputs, especially in providing reliable information over varied contexts.

- Benchmarks and Metrics: Robustness checks, adversarial testing, and consistency evaluation metrics are used to assess reliability.

- Safety:

- Definition: This highlights the importance of ensuring that LLMs generate harmless and ethically sound outputs, mitigating biases and preventing harmful content.

- Benchmarks and Metrics: Safety benchmarks focus on bias detection, toxicity levels, and ethical evaluations.

The paper details how each of these competencies is defined and measured using various benchmarks and metrics. It also explores how tasks associated with each competency can be combined to provide a composite evaluation, making it easier to add new tasks as the field evolves.

Lastly, the authors offer suggestions for future directions in LLM evaluation, emphasizing the need for dynamic, real-world applicable tasks and more comprehensive, transparent metrics that would accommodate the rapid development within the field. This holistic approach aims to standardize evaluations, drive improvements, and ensure LLMs’ performance aligns with real-world requirements.