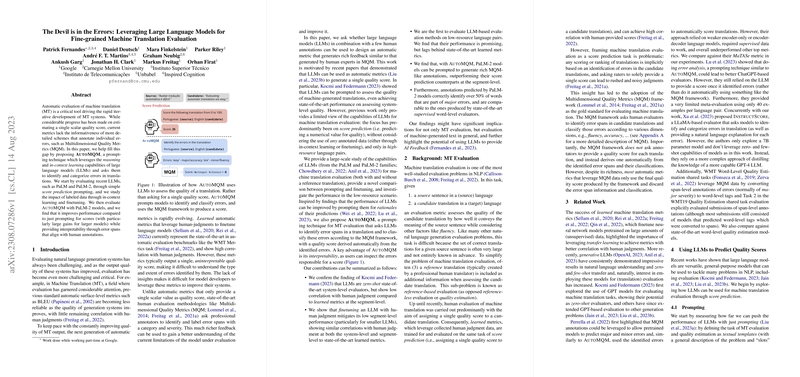

The paper "The Devil is in the Errors: Leveraging LLMs for Fine-grained Machine Translation Evaluation" addresses the gap between traditional scalar quality scores in machine translation (MT) evaluation and more detailed error annotation schemes, specifically targeting Multidimensional Quality Metrics (MQM).

Background and Motivation

Automatic evaluation metrics for MT, such as BLEU, primarily provide single scalar scores to quantify translation quality. While useful, these metrics lack granularity and fail to offer actionable insights into specific types of errors. MQM, on the other hand, offers a fine-grained approach by categorizing individual errors, but its reliance on human annotation is resource-intensive.

Main Contributions

The primary contribution of this paper is the introduction of AutoMQM, a novel technique that leverages the capabilities of LLMs to automatically identify and categorize translation errors. By utilizing the reasoning skills and in-context learning capabilities of LLMs, AutoMQM aims to bridge the gap between simple score-based evaluations and detailed error annotations.

Methodology

- Baseline Evaluations: The authors begin by evaluating recent LLMs such as PaLM and PaLM-2 through straightforward score-prediction prompting. This step serves as a baseline to compare against more complex techniques.

- In-Context Learning and Fine-tuning: The impact of labeled data is explored through in-context learning and fine-tuning approaches. The models are given examples to learn from and subsequently adjust their evaluations.

- Introducing AutoMQM:

- The authors propose a prompting technique to instruct the LLMs to identify and categorize specific types of errors in translations.

- This method leverages the LLMs' in-context learning to improve over simple scalar predictions.

Evaluation and Results

The paper presents a comprehensive evaluation of AutoMQM using PaLM-2 models. Key findings include:

- Improved Performance: AutoMQM shows performance improvements over baseline score-prediction prompting. The gains are more pronounced for larger models, indicating an advantage in scale.

- Interpretability: One of the significant advantages of AutoMQM is its ability to provide interpretable results in the form of error spans. These annotated spans align closely with human annotations, offering a more transparent evaluation process.

Conclusion

AutoMQM stands out by combining the strength of LLMs in understanding and contextualizing language with the need for fine-grained, interpretable MT evaluation. This method not only enhances performance compared to traditional scoring methods but also bridges the gap to human-like error categorization, potentially reducing the reliance on human annotators.

The paper's findings contribute to advancing the field of machine translation evaluation by proposing an automated, detailed, and interpretable assessment method.