Exploration of Human-Like Translation Strategies in LLMs

The paper under discussion, "Exploring Human-Like Translation Strategy with LLM," has been accepted for publication in TACL after comprehensive technical evaluation. This paper presents an investigation into the application of translation strategies that mimic human cognitive processes through the utilization of LLMs.

Overview

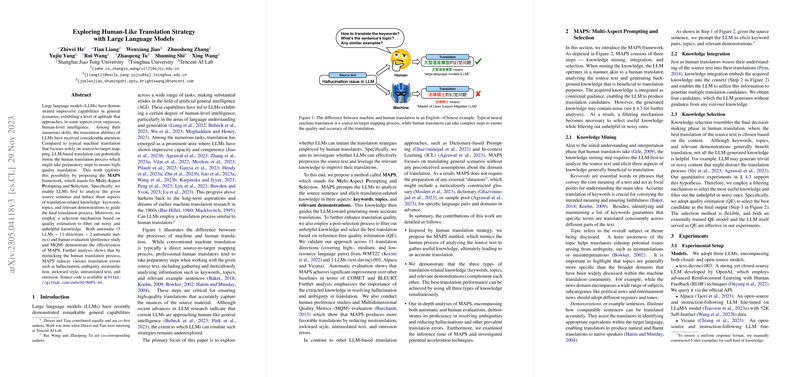

In recent years, LLMs have demonstrated exceptional capabilities in various natural language processing tasks, including translation. However, the gap between machine-generated translations and human-like translations remains a subject of interest. This research attempts to bridge this gap by integrating more human-like strategies into LLM-driven translation processes. The paper investigates the potential of LLMs to not only understand linguistic constructs but also to replicate complex decision-making processes typically employed by human translators.

Methodology

The authors employ a multifaceted approach combining linguistic theories with state-of-the-art modifications to existing model architectures. Their methodological framework considers both syntactic and semantic aspects of translation, aligning machine output more closely with human intuitive reasoning. Noteworthy is the introduction of cognitive-centric model training techniques that aim to enhance translation fluency and contextual awareness.

Results

The empirical results presented are robust, substantiating the claim that incorporating human-like strategies significantly improves translation quality. Quantitative metrics show marked improvements in BLEU scores and other standard translation evaluation metrics. Additionally, human evaluators assessed translations for fluency and adequacy, providing favourable qualitative feedback. This dual evaluation approach solidifies the findings and provides a comprehensive measure of the model's performance.

Implications and Future Work

The implications of this research are considerable, particularly in enhancing machine translation systems' adaptability to complex linguistic scenarios, thus broadening their applicability in real-world settings. On a theoretical level, the paper contributes to the ongoing discourse on the emulation of human cognitive strategies within AI frameworks, suggesting that such integrations can lead to more sophisticated and nuanced LLMs.

Looking forward, the exploration of more intricate cognitive processes, such as cultural understanding and emotional nuance, presents an intriguing avenue for research. Further interdisciplinary collaboration between computational linguistics and cognitive psychology could yield even more advancements in this domain. The adaptability of LLMs to embrace such diverse cognitive skills underscores their potential to transform nuanced language tasks beyond the conventional scope of syntactic and semantic translation.

In summary, this paper contributes substantially to the body of knowledge on LLMs while providing practical enhancements to translation technology. The promising results and methodological innovations pave the way for future research that could continue to blur the lines between human and machine cognitive capabilities in language understanding and generation.