Enhancing Machine Translation Quality through Post-Editing with LLMs

Introduction to the Study

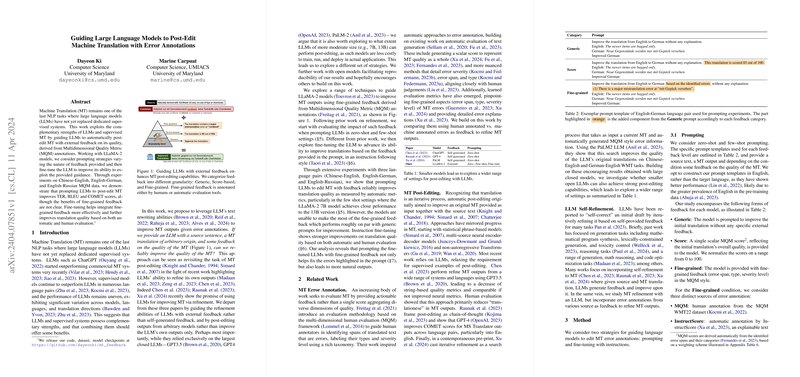

The integration of LLMs in Machine Translation (MT) has surged as a focal point in NLP research, seeking to leverage their generative prowess to augment translation quality. This paper presents a methodology that combines LLMs' capabilities with supervised MT systems through a post-editing framework guided by external feedback on translation quality, using the LLaMA-2 model series as a case paper.

Background and Related Work

While LLMs such as ChatGPT show promising results, dedicated supervised systems still marginally outperform LLMs in many language pairs. The persisting challenges and uneven performance across different LLMs have prompted researchers to investigate the complementary strengths of LLMs and supervised systems. This paper builds on several strands of research: the potential of LLMs for text refinement, MT error annotation practices that facilitate actionable feedback instead of aggregate scores, and automatic post-editing approaches to refine MT outputs. Notably, compared to existing works that often leverage closed LLMs for post-editing, this paper ventures into utilizing open-source models, namely the 7B and 13B variants of LLaMA-2, to evaluate their efficacy in a post-editing capacity.

Methodological Approach

The paper explores two novel strategies: prompting and fine-tuning LLMs with feedback of varying granularity to enhance post-editing performance. The feedback categories include generic prompts, score-based prompts conveying overall MT quality scores, and fine-grained feedback providing detailed error annotations. The latter is further split based on the source of error annotations - human versus automatic tools. This diversified approach allows a comprehensive examination of the impact of feedback nature on post-editing outcomes.

Experimental Insights

Prompting Performance

In zero- and ten-shot scenarios, prompting with any feedback type generally improved translation quality metrics (e.g., TER, BLEU, and COMET) across all evaluated language pairs, albeit with marginal gains in zero-shot settings. The improvement was more pronounced in the ten-shot setting, highlighting the potential of few-shot learning to bridge the performance gap between different model sizes. However, the anticipated advantage of fine-grained feedback over generic feedback was not clearly demonstrated in the results.

Fine-Tuning Efficacy

Instruction based fine-tuning presented a substantial uplift in translation quality over both original translations and prompted outcomes. Particularly, fine-tuning with fine-grained feedback exhibited more considerable enhancements than generic feedback, suggesting that fine-tuning enables a more effective utilization of detailed error annotations. Moreover, multilingual fine-tuning slightly outperformed bilingual configurations, indicating potential benefits from cross-lingual learning.

Implications and Prospects

This research underscores the feasibility of employing moderately-sized, open-source LLMs for MT post-editing tasks, which invites a broader exploration across more diverse language settings and tasks. The findings advocate for the more extensive creation and use of fine-grained error annotations to train LLMs for post-editing, signifying the value of detailed feedback over aggregate scores or generic prompts.

Concluding Remarks

This paper demonstrates that LLMs, even of moderate size, can effectively enhance MT quality through post-editing when guided by external feedback. The superior performance of fine-tuning with fine-grained feedback illuminates the path for future research directions, emphasizing the creation of richly annotated MT datasets and exploring automatic error annotation tools' reliability. The paper's exploration opens avenues for further leveraging the synergistic potential between LLMs and supervised MT systems, aiming for continual improvements in translation accuracy and fluency.