Overview of Research

The paper considers the potential of leveraging LLMs as a basis for cognitive models, addressing the existing gap where LLMs do not consistently demonstrate human-like behavior. The core hypothesis involves fine-tuning pre-trained LLMs with domain-specific data derived from behavioral studies to more accurately replicate human decision-making processes.

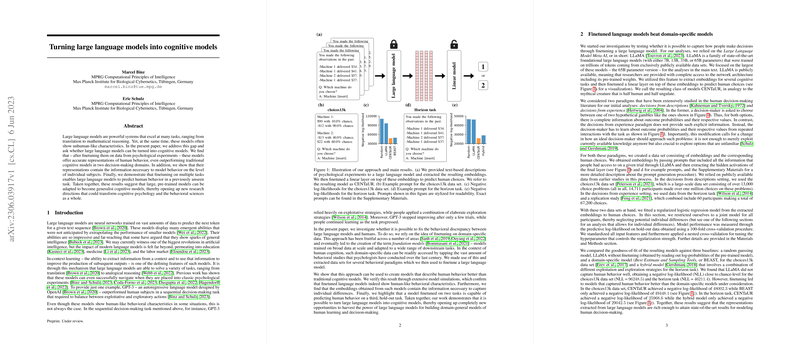

Methodology

The authors selected a variety of tasks capturing essential aspects of human decision-making and extracted embeddings from LLM Meta AI (LLaMA), a collection of foundational models available to the research community. They then fine-tuned the model using logistic regression, focusing on two behavioral paradigms: decisions from descriptions and decisions from experience. This led to the creation of CENTaUR, a model amalgamating traits of both LLMs and cognitive modeling. The authors compared the CENTaUR's performance against baseline models on tasks involving decision-making under uncertainty.

Simulation and Analysis of Human-Like Behavior

The paper undertook extensive simulations to verify that CENTaUR accurately represents human-like behavioral characteristics. Performance metrics revealed that CENTaUR closely matched human regret levels and displayed nuanced exploratory behaviors that align with human decision-making strategies. Additional analysis focused on the model's ability to capture individual differences, revealing that incorporating random effects substantially improved fit, reaffirming CENTaUR's aptitude for modeling participant-level behavior.

Generalization to Hold-Out Tasks

To stress-test the model, the authors examined CENTaUR's generalization capability by applying it to a third, previously unseen task. Remarkably, the finetuned CENTaUR was not only successful in generalizing to the new task but showcased a qualitative alignment with human decision-making biases, underscoring the model's potential to predict human behavior across various scenarios.

Implications and Future Directions

The insights presented in this paper illuminate the vast potential of LLMs when contextualized through fine-tuning, steering towards models that can generalize across the spectrum of human cognition. The researchers advocate for scaling the approach to encompass additional tasks from psychological literature, projecting the evolution of a unified, domain-general model of human cognition. Considering the broader scope, the paper paves the way for cognitive and behavioral sciences research, with implications for rapid experiment design, behavioral policy, and a deeper understanding of human behavior through model explainability techniques. The success of such models hinges on the richness of LLM embeddings and their profound ability to encapsulate human cognitive processes.