Introduction to PALR

A paper presents a new framework called PALR (Personalization Aware LLMs for Recommendation), designed to enhance recommenders systems by integrating users' historical interactions—such as clicks, purchases, and ratings—with LLMs to generate preferred item recommendations for users. The authors propose a novel approach to utilizing LLMs for recommendations, emphasizing the importance of user personalization.

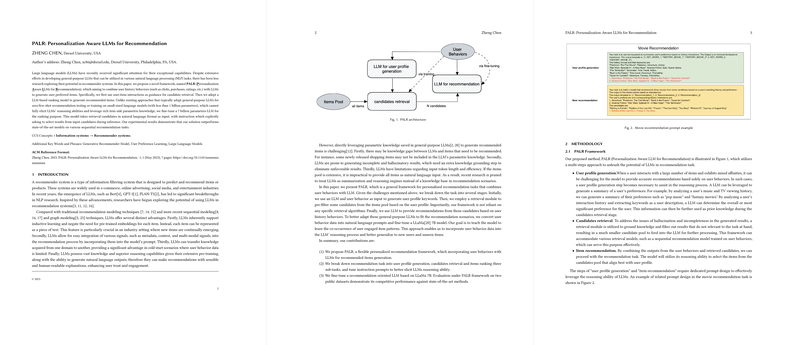

PALR: A Novel Recommendation Framework

The essence of the PALR framework is a multi-step process that first generates user profiles using an LLM based on their interactions with items. A retrieval module then pre-filters candidates from the vast pool of items based on these profiles. Importantly, any retrieval algorithm can be employed in this stage. Finally, the LLM is used to rank these candidates according to the user's historical behaviors.

Fine-Tuning LLM for Task Specificity

Critical to PALR's success is fine-tuning a 7-billion-parameter LLM (the LLaMa model) to accommodate the peculiarities of recommendation tasks. This process includes converting user behavior into natural language prompts that the model can understand during training, imparting the ability to discern patterns in user engagement and thus generate relevant item recommendations. The framework's flexibility was tested using two different datasets and displayed superior performance to existing state-of-the-art models in various sequential recommendation tasks.

Experimental Results and Future Implications

Experiments conducted on two public datasets, MovieLens-1M and Amazon Beauty, demonstrated PALR's significant outperformance over state-of-the-art methods. Notably, PALR showcased its effectiveness in re-ranking items, suggesting substantial improvements in the context of sequential recommendations when compared to traditional approaches. The findings encourage future exploration into optimizing LLMs for recommendation tasks, aiming to balance their powerful capabilities with the need for computational efficiency and reduced latency.