LLaMA-Adapter V2: Enhancing Parameter-Efficiency in Visual Instruction Models

Introduction

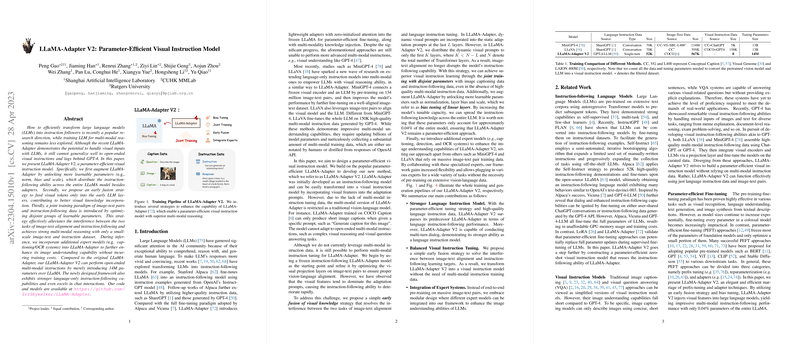

The evolution of LLMs for instruction-following tasks and their extension into the multi-modal domain marks a significant advance in generative AI. The introduction of LLaMA-Adapter V2 by Peng Gao et al. from the Shanghai Artificial Intelligence Laboratory and CUHK MMLab represents a leap forward in parameter-efficient visual instruction models. Building on its predecessor, LLaMA-Adapter, this version amplifies its capabilities to handle visual inputs alongside textual instructions, effectively managing tasks that require understanding and processing of both image and text data. This model demonstrates an adeptness at open-ended multi-modal instruction following, a feat that positions it as a sophisticated tool in AI-driven understanding and reasoning over visual elements.

Model Innovations and Strategies

Bias Tuning for Enhanced Instruction Following

LLaMA-Adapter V2 introduces an innovative bias tuning mechanism that expands learnable parameters within the model. This facilitates a more intricate intertwining of instruction-following abilities throughout the LLaMA model, beyond what was achievable with adapters alone. The enhancement is relatively lightweight, ensuring the model stays within the bounds of parameter efficiency by affecting only about 0.04% of the entire model's parameters.

Joint Training with Disjoint Parameters

A novel joint training paradigm is proposed to efficaciously balance the model's performance on dual tasks of image-text alignment and instruction following. This strategy cleverly leverages disjoint groups of learnable parameters optimized for either of the tasks, guided by the data type being processed—image-text pairs or instruction-following data. This approach notably obviates the need for high-quality multi-modal instruction data, which is often resource-intensive to collect.

Early Fusion of Visual Knowledge

To mitigate interference between image-text pairs and instruction data during model training, LLaMA-Adapter V2 employs an early fusion strategy. This method involves feeding visual tokens into the early layers of the LLM. This spatially and conceptually separates the adaptation prompts for textual and visual inputs, thus preserving the integrity and the distinct contribution of each towards the model's learning and reasoning capabilities.

Integration of Expert Models

Marking a departure from convention, LLaMA-Adapter V2 is engineered to work in tandem with external expert models (e.g., captioning or OCR systems) during inference. This modular design approach not only enhances the model's understanding of visual content without the need for extensive vision-language data but also introduces a layer of flexibility. Users can plug in various expert systems as per the task requirements, emphasizing its potential as a versatile tool in complex AI applications.

Performance and Implications

The comparative analysis showcases LLaMA-Adapter V2 outperforming its predecessor and other contemporaries in various tasks, including language instruction following and image captioning. Notably, the model achieves commendable results in open-ended multi-modal instructions and chat interactions with a substantially smaller increase in parameter count over LLaMA.

This advancement underscores the potential of parameter-efficient modeling in making significant strides in AI capabilities. By addressing the dual challenges of multi-modal reasoning and instruction following with a parameter-efficient approach, LLaMA-Adapter V2 paves the way for more sustainable and scalable AI models. The model's ability to integrate with external expert systems opens new avenues for developing AI applications that require nuanced understanding and generation across different data modalities.

Future Directions

Looking ahead, the exploration of further integrations with expert systems and the potential for fine-tuning LLaMA-Adapter V2 with multi-modal instruction datasets present exciting opportunities. The continued innovation in parameter-efficient fine-tuning methods, such as expanding on the foundation laid by LoRA and other PEFT techniques, is anticipated to bolster the capabilities of LLaMA-Adapter V2 and beyond.

In conclusion, LLaMA-Adapter V2 stands as a testament to the ongoing evolution of AI models towards greater efficiency, adaptability, and sophistication in handling complex multi-modal tasks. Its introduction marks a significant milestone in the development of AI tools that can seamlessly navigate the confluence of language and visual data, broadening the horizons of AI's applicability across diverse domains.