Overview of BloombergGPT: A LLM for Finance

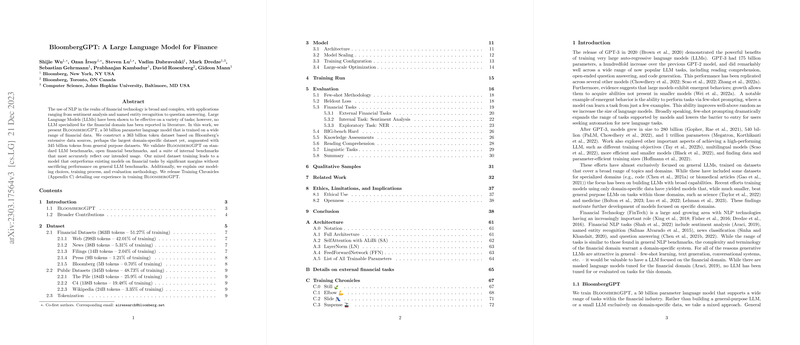

The paper presents BloombergGPT, a domain-specific LLM tailored for the financial sector. With 50 billion parameters, BloombergGPT is trained on 363 billion tokens from Bloomberg's extensive financial dataset, representing perhaps one of the most substantial domain-specific datasets available. Additionally, it includes 345 billion tokens from general-purpose datasets. This dual dataset strategy enables BloombergGPT to achieve superior performance on financial tasks while maintaining competitiveness on general NLP benchmarks.

Dataset and Methodology

The authors introduce "FinPile," a dataset derived from Bloomberg's archival financial documents, covering different data types such as news, filings, press releases, and social media. The creation of FinPile emphasized data quality, including the de-duplication of core datasets like C4 and The Pile, reinforced by public datasets to ensure a balanced dataset with over 700 billion tokens.

The model utilizes a BLOOM-style architecture with a focus on achieving a balance between domain-specific and general tasks. By adhering to principles outlined in Chinchilla's scaling laws, the training process optimizes resource usage, allowing the construction of a model that performs effectively within the compute constraints.

Key Results and Evaluation

BloombergGPT's evaluation involved two categories of tasks: financial-specific and general-purpose. For the financial tasks, the model dramatically outperformed existing models, particularly in tasks like sentiment analysis and financial question answering. Notably, it surpassed models such as GPT-NeoX and OPT on typical financial-phased tasks.

For general-purpose tasks, BloombergGPT was assessed using BIG-bench Hard and MMLU benchmarks, among others. Despite its specialization, the model retained capabilities comparable to larger general-purpose models, showcasing the effectiveness of its mixed training strategy.

Strong Claims and Implications

The most prominent claim is BloombergGPT's unparalleled performance in financially related NLP tasks, supported by its unique access to a massive, well-curated financial corpus. This capability suggests a promising direction for domain-specific models, where appropriately combining domain-specific and generic data can yield optimal results without compromising on general applicability.

The paper highlights the implications of deploying BloombergGPT in financial sectors, suggesting profound benefits for time-sensitive financial analytics. Moreover, this approach underscores the potential for similar applications in other sectors, emphasizing the significance of harnessing domain-appropriate datasets alongside general ones.

Future Perspectives

The research opens up avenues for exploring task-specific fine-tuning and evaluating domain-specialization across different sectors. Furthermore, the paper alludes to potential configurations of tokenization strategies, positioning the model to better adapt numerically dense domains.

BloombergGPT serves as a benchmark for future LLM developments within international finance, setting the standard for both procedural documentation ('Training Chronicles') and the marriage of domain-specific knowledge with advanced NLP techniques.

The insights gleaned inspire further exploration into the craft of model alignment, notably in sensitive financial environments. Additionally, the research provides a strong case for scrutinizing tokenization processes and their impact on training outcomes, setting the groundwork for future advancements in LLM training methodologies.