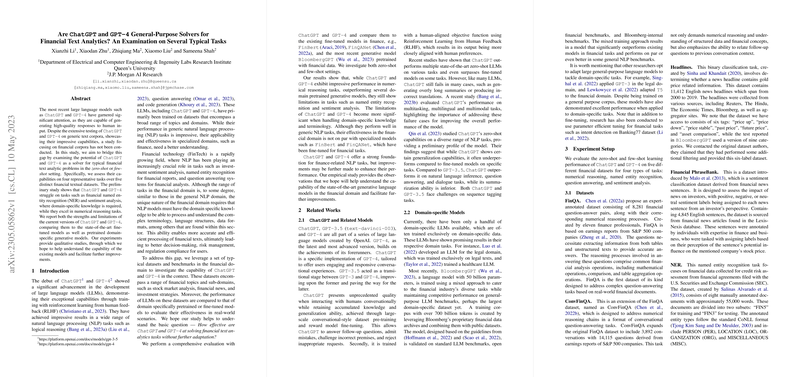

Are ChatGPT and GPT-4 General-Purpose Solvers for Financial Text Analytics? A Study on Several Typical Tasks

This paper critically examines the performance of state-of-the-art LLMs such as ChatGPT and GPT-4 in the specialized domain of financial text analytics. The authors employ eight benchmark datasets spanning five categories of tasks: sentiment analysis, classification, named entity recognition (NER), relation extraction (RE), and question answering (QA). These categories cover a broad spectrum of financial text analytics typically encountered in industry settings. The paper provides a comparison of these generalist models with both fine-tuned models and domain-specific pretrained models.

Methodology and Experimental Setup

The researchers meticulously selected datasets representing various aspects of financial text analytics. The datasets include Financial PhraseBank, FiQA Sentiment Analysis, TweetFinSent, headlines classification, FIN3 NER, REFinD, FinQA, and ConvFinQA. This selection represents tasks ranging from relatively straightforward sentiment analysis and classification to more complex tasks such as NER, RE, and QA.

The evaluation metrics utilized for assessment are accuracy, macro-F1 score, and entity-level F1 score, providing a comprehensive understanding of the performance across different task categories.

Key Findings

- Performance on Sentiment Analysis and Classification: ChatGPT and GPT-4 show strong performance on sentiment analysis and classification tasks. Specifically, GPT-4 outperforms domain-specific models like FinBert on Financial PhraseBank and achieves competitive scores on FiQA and TweetFinSent datasets, illustrating its robust generalization capabilities in financial contexts. For example, GPT-4 attained a weighted F1 score of 88.11 on the FiQA dataset, indicating its efficacy in analyzing financial sentiment in detailed contexts.

- Named Entity Recognition: The models exhibit limitations in structured prediction tasks like NER, with GPT-4 achieving an entity-level F1 score of 56.71 on the FIN3 dataset. This is inferior compared to domain-specific models and CRF models fine-tuned on similar data. The observed performance gap underscores the challenges LLMs face in domain-specific structured prediction tasks.

- Relation Extraction: On the REFinD dataset, GPT-4 consistently outperforms ChatGPT but falls short of the performance delivered by fine-tuned models like Luke-base. This suggests that while GPT-4 has an enhanced understanding of entity relations, domain-specific fine-tuning still holds an advantage in extracting complex relations from financial texts.

- Question Answering: GPT-4 demonstrates superior performance in numerical reasoning and achieves accuracy rates significantly higher than those of fine-tuned models like FinQANet on tasks such as FinQA and ConvFinQA. For instance, GPT-4 achieved an accuracy of 78.03 with Chain-of-Thought (CoT) prompting on FinQA, surpassing traditional fine-tuned models, indicating the model's enhanced capability in multi-step reasoning and complex numerical operations.

Implications

The findings have several practical and theoretical implications. From a practical standpoint, GPT-4 proves to be a viable candidate for a broad array of financial NLP tasks, potentially reducing the need for domain-specific model fine-tuning. For less complex tasks, generalist models like GPT-4 offer robust baseline performance without the overhead of dataset-specific adjustments.

However, for more complex tasks such as named entity recognition and relation extraction, specialized models still provide superior performance, highlighting the need for ongoing development of domain-specific adaptations to LLM architectures. This underscores an area of future research focused on specialized pretraining and fine-tuning strategies tailored to the financial domain.

From a theoretical perspective, the results emphasize a significant potential for generalist models in handling specialized domains, provided that effective strategies like few-shot learning and CoT prompting are employed. These prompting techniques lift the performance of models significantly, as seen across various datasets.

Future Directions

Future research could explore the intersection of generalist models and domain-specific data, exploring advanced prompting techniques, hybrid models combining generalist and specialized components, and improved pretraining strategies. Additionally, expanding the evaluation to include more diverse financial NLP tasks, such as symbolic reasoning and other complex logical deductions, would provide a more comprehensive understanding of the capabilities and limitations of current LLMs in the financial domain.

In summary, while GPT-4 and ChatGPT are powerful tools for general financial text analytics, more specialized adaptations are still necessary for optimal performance in complex and domain-specific tasks. The paper highlights the promising role of generalist models while encouraging the continued refinement and integration of domain-specific enhancements.