Overview of G-Eval: NLG Evaluation using GPT-4 with Better Human Alignment

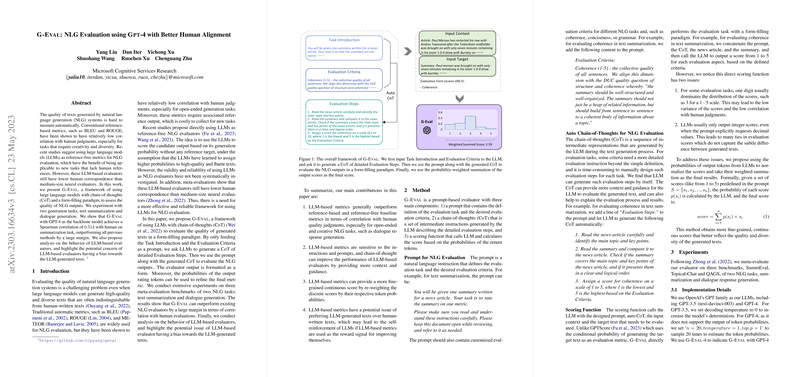

The paper presents G-Eval, an innovative framework leveraging LLMs like GPT-4 to evaluate the quality of natural language generation (NLG) outputs. This research addresses the limitations of traditional reference-based NLG evaluation metrics—such as BLEU and ROUGE—which often exhibit low correlation with human judgment on tasks requiring creativity and diversity. Unlike these conventional metrics, G-Eval proposes a reference-free evaluation method using a chain-of-thought (CoT) approach and form-filling paradigm to improve human alignment.

Key Contributions

The authors of the paper make several important contributions:

- Introduction of G-Eval Framework: G-Eval utilizes LLMs like GPT-4 to evaluate NLG outputs through detailed evaluation steps generated from a given prompt. This method shows a significant enhancement over previous metrics concerning human judgment correlation.

- Empirical Results: On tasks like text summarization and dialogue generation, G-Eval with GPT-4 has demonstrated superior Spearman correlation scores compared to existing methods, notably exceeding previous benchmarks in tasks like the SummEval benchmark.

- Potential Bias in LLM-Based Evaluators: The research highlights a critical concern regarding LLM evaluators potentially being biased toward LLM-generated texts. This finding underscores the necessity for mitigating biases in AI evaluative systems to ensure equitable assessments across different types of text.

Implications of Research

Practical Implications: The implementation of G-Eval provides a powerful tool for evaluating NLG models without standard human references, which can significantly lower the cost and effort involved in NLG evaluation. As AI continues to advance, frameworks like G-Eval could become central to developing more sophisticated and human-aligned LLMs.

Theoretical Implications: The proposal of a CoT paradigm offers a structured approach for LLMs to critically assess and score NLG tasks. G-Eval’s approach contributes to the theoretical understanding of how LLMs can be improved to perform evaluative tasks more closely aligned with human reasoning patterns.

Future Directions

The paper suggests several avenues for future exploration:

- Investigation of Bias Mitigation: Further research may focus on developing methods to minimize bias in LLM evaluations, ensuring unbiased assessments across human and LLM-generated content.

- Broader Applicability: While the paper primarily focuses on summarization and dialogue generation, future work might explore the efficacy of G-Eval across additional NLG domains and other LLM architectures.

- Enhanced Evaluation Methods: Researchers could investigate other forms of model evaluation that incorporate more complex human-like reasoning to enhance the evaluative accuracy of AI systems.

Conclusion

The G-Eval framework represents a significant step forward in the automated evaluation of NLG tasks, offering enhanced alignment with human judgment and surpassing previous evaluation methods in terms of correlation. However, addressing potential biases and expanding the framework’s applicability remain essential for future developments in AI-driven evaluation systems.