- The paper introduces DyGFormer, a Transformer-based architecture that captures historical node interactions using neighbor co-occurrence and patching techniques.

- It proposes DyGLib, a unified library that standardizes model training and evaluation for improved reproducibility across diverse dynamic graph datasets.

- Experimental findings demonstrate state-of-the-art performance in dynamic link prediction and node classification, underscoring the method's robustness and scalability.

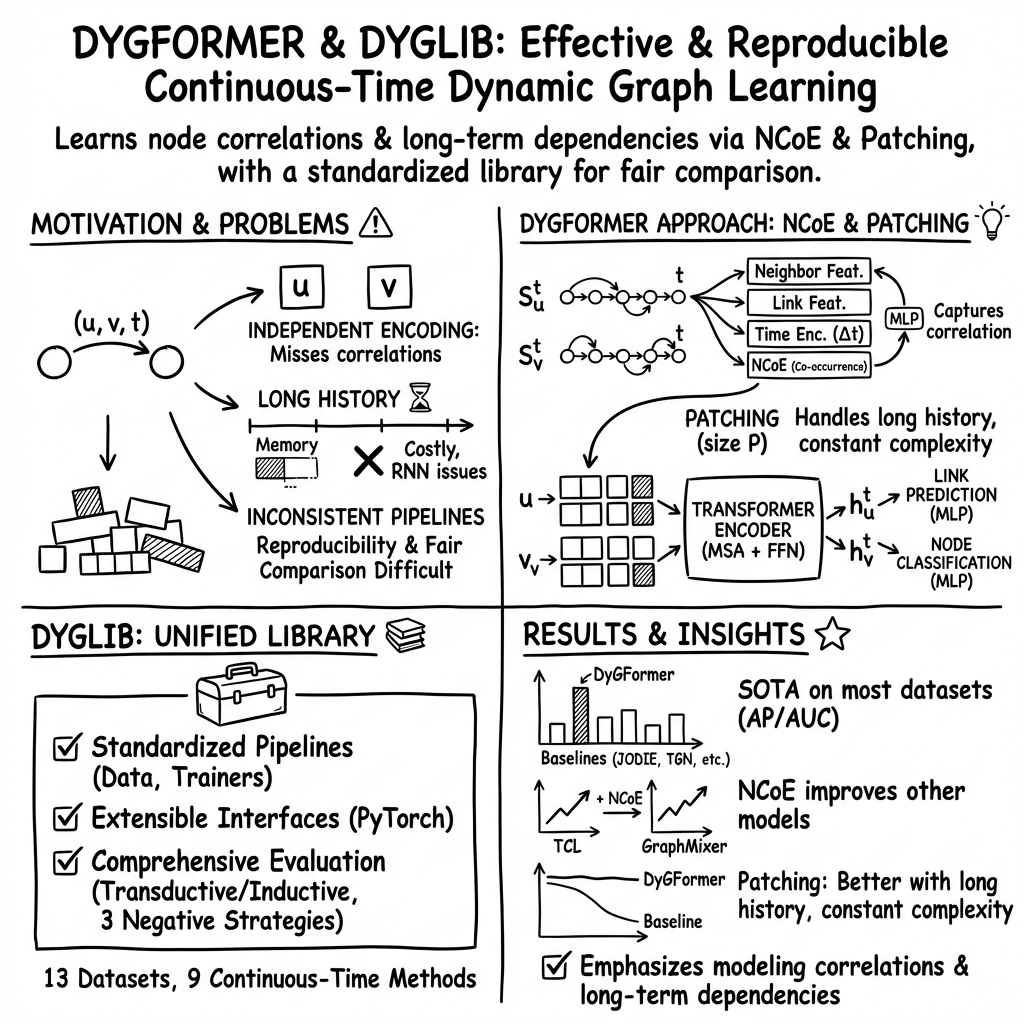

The paper presents a novel approach to dynamic graph learning via the introduction of DyGFormer, a Transformer-based architecture, and DyGLib, a unified library that standardizes the implementation and evaluation of dynamic graph models. DyGFormer addresses key limitations inherent in existing methodologies by facilitating the capture of nodes' historical interactions and exploring correlations between them using simple, yet effective mechanisms. The proposed architecture also incorporates a patching technique designed for efficient learning from extended historical data, while the accompanying DyGLib offers a standardized platform for model training and evaluation, promoting reproducibility and scalability in research efforts.

Key Contributions

1. DyGFormer Architecture:

DyGFormer simplifies the dynamic graph learning task by focusing on the historical first-hop interactions of nodes, which are encoded using:

- A neighbor co-occurrence encoding scheme that quantifies the frequency of historical interactions a node has with its neighbors. This scheme serves to unpack relationships and potential future interactions by examining the shared interaction histories of node pairs.

- A patching technique that divides historical interactions into patches, enabling the Transformer model to effectively and efficiently manage lengthy historical sequences by preserving local temporal proximities and minimizing computational complexity.

2. DyGLib: A Unified Library:

DyGLib offers a comprehensive environment for continuous-time dynamic graph learning with standardized training procedures, extensible interfaces, and complete evaluation protocols. By implementing a variety of continuous-time methods and benchmark datasets, DyGLib aims to alleviate issues around performance reproducibility and scalability, which are prevalent due to the lack of consistency in the training pipelines of varying methods.

Experimental Findings

Thirteen datasets were examined for dynamic link prediction and dynamic node classification tasks using DyGLib. DyGFormer outperformed existing methods on most datasets and achieved state-of-the-art performance in identifying the correlation and long-term temporal dependencies of nodes. Furthermore, the paper highlights inconsistencies in baseline results from previous research and emphasizes the importance of rigorous model evaluation, showcasing the significant performance improvements gained through the adoption of DyGLib's standardized pipelines.

Speculative Implications

The practical implications are twofold. Firstly, DyGFormer introduces an architectural simplicity that highlights the potential of leveraging first-hop interaction sequences in dynamic graph modeling. Secondly, DyGLib's open-source nature and standardization efforts address reproducibility challenges and may stimulate further research by enabling easy comparison of new methodologies against robust benchmarks.

Theoretically, DyGFormer’s focus on capturing node correlations and handling temporal dependencies through simple yet sophisticated methods may drive the development of more generalizable and efficient dynamic graph learning frameworks. This innovation is particularly timely as the need for sophisticated models to handle increasingly complex graph datasets expands across fields such as social network analysis, recommendation systems, and networked traffic analysis.

Future Directions

Looking ahead, DyGFormer and DyGLib prompt several avenues for research:

- Exploring the robustness of DyGFormer’s neighbor co-occurrence encoding in scenarios involving high-order node interactions and diverse dynamic graph typologies.

- Developing enhanced patching techniques that further reduce computational costs without sacrificing the accuracy and depth of dynamic modeling.

- Expanding DyGLib to include a broader array of state-of-the-art methods and datasets, thereby enriching its utility as a central hub for dynamic graph learning research.

The methods presented in this work underline the importance of practical reproducibility and architectural simplicity, suggesting significant potential for advancing the domain of dynamic graph learning. Through continued exploration and refinement, DyGFormer and similar approaches have a promising role in shaping the future of graph-based models across application domains.