Exploring Automatic Questioning for Enhanced Visual Descriptions

The paper "ChatGPT Asks, BLIP-2 Answers: Automatic Questioning Towards Enriched Visual Descriptions" focuses on the often-overlooked aspect of questioning in AI research, spotlighting the potential of LLMs like ChatGPT to generate insightful questions that lead to richer image descriptions. This investigation might provide valuable insights into how automated questioning can augment AI's capacity for visual understanding.

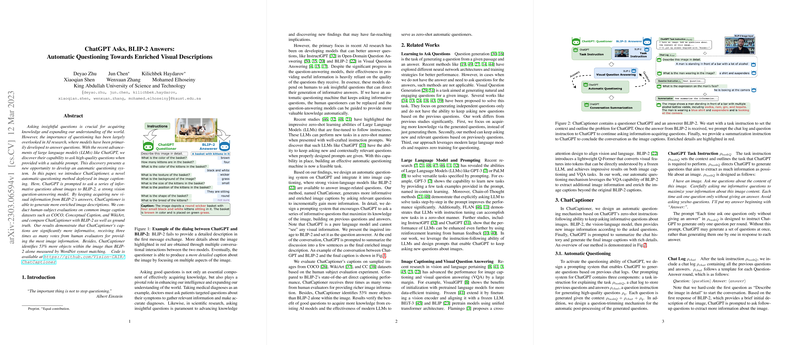

Introduction to ChatCaptioner

The authors introduce ChatCaptioner, a system that leverages ChatGPT to automatically pose questions to the state-of-the-art vision-LLM, BLIP-2, to extract more detailed and informative image captions. ChatGPT's role in this setup is to iteratively ask questions that help accumulate comprehensive visual details from BLIP-2's responses, thus overcoming traditional image captioning limitations.

Experimental Evaluation

ChatCaptioner underwent rigorous testing using human subject evaluations on datasets like COCO, WikiArt, and Conceptual Captions. The results highlight ChatCaptioner's ability to produce significantly more informative captions, outperforming BLIP-2 and even ground-truth captions in contexts where depth of description is critical. Notable metrics include a 53% increase in object identification within images over BLIP-2's outputs, verified by WordNet synset matching.

Implications and Future Directions

The implications of this research potentially extend into diverse AI applications. Automating question generation shifts a significant cognitive load from humans to machines, suggesting various practical benefits—from enhancing diagnostic AI in medicine to advancing knowledge discovery in scientific fields. The authors have focused on image captioning, yet their findings might also inform improvements in other domains, such as conversational AI and education technology, facilitating systems that can dynamically seek information.

Critical Analysis

However, ChatCaptioner is not without limitations. Its accuracy is contingent on BLIP-2's capability as a visual question-answering model, which, when incorrect, directly impacts the system's output. Addressing such issues by integrating more advanced VQA models could refine ChatCaptioner's performance. Furthermore, ChatGPT's tendency toward generating socially biased or offensive content requires ongoing vigilance.

Conclusion and Speculation

By demonstrating how automated questions can amplify a model's visual comprehension, this research paves the way for more sophisticated AI systems capable of engaging with the world with greater acuity. Future developments might focus on enhancing ChatCaptioner's robustness and exploring its application to broader fields, potentially setting a new standard for AI-mediated interactions with visual content.

In summary, while questioning in AI has traditionally taken a backseat, this paper reveals its profound potential to enrich AI's interpretive capabilities, inviting further exploration and development in the field.