Exploring Visual Fact Checker: A Training-Free Pipeline for Detailed 2D and 3D Captioning

Introduction to VisualFactChecker (VFC)

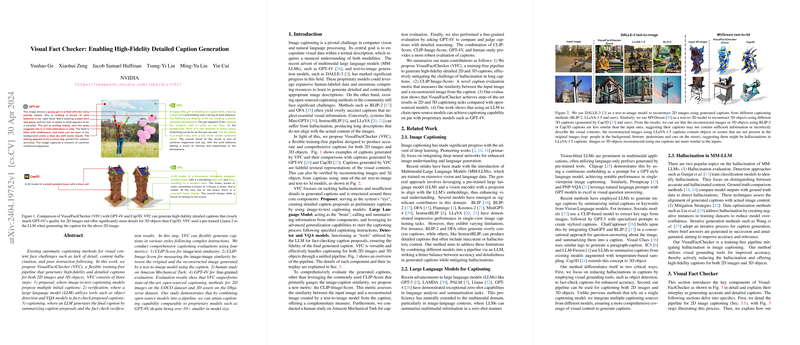

In the landscape of image and 3D object captioning, traditional methods often grapple with challenges like hallucination (where the model creates fictitious details) and overly vague outputs. Addressing these issues, the VisualFactChecker (VFC) emerges as a versatile, training-free solution designed to enhance the accuracy and detail of captions for both 2D images and 3D objects.

VFC operates through a three-stage process:

- Proposal: Utilizes image-to-text models to generate several initial caption options.

- Verification: Leverages LLMs alongside object detection and visual question answering (VQA) models to verify the accuracy of these captions.

- Captioning: The LLM synthesizes the verified information to produce the final detailed and accurate caption.

By harnessing a combination of open-source models linked by an LLM, VFC demonstrates capabilities comparable to proprietary systems like GPT-4V, yet with significantly smaller model size.

Breaking Down the Pipeline

The innovation of VFC lies in its layered approach combining multiple technologies:

- Proposer: Acts as the first filter, generating descriptive captions that might still hold inaccuracies.

- Verifier: Utilizes tools to check these descriptions against the actual image content, targeting detailed accuracy by confirming or denying elements present in the captions.

- Caption Composer: Integrates all verified information to produce the final output that adheres not only to factual correctness but also to the specified writing style or instructional focus.

Superior Performance with Insights on Metrics

VFC's effectiveness isn't just theoretical; it's quantitatively backed by robust metrics:

- CLIP-Score and CLIP-Image-Score: These metrics confirm that VFC outperforms existing open-source captioning methods. Notably, the novel CLIP-Image-Score evaluates how well a caption describes an image by comparing the original image to one recreated from the caption itself, highlighting discrepancies and confirming accuracy.

- Human Studies and GPT-4V Evaluations: Beyond automated metrics, human assessments via Amazon Mechanical Turk and detailed evaluations using GPT-4V further emphasize the reliability and detail orientation of VFC's captioning capability.

Future Implications and Developments

The methodology setup by VFC points to a promising direction for both practical applications and theoretical AI research:

- Enhanced Accessibility: Accurate descriptions can significantly improve accessibility for those with visual impairments, providing detailed comprehension of visual content.

- Richer Data Interactions: In scenarios where detailed object descriptions are crucial, like virtual reality (VR) or online shopping, VFC could provide a more engaging and informative user experience.

- Foundation for Future Research: As a modular system, VFC offers a framework for integrating newer models or enhancing specific components like the proposer or verifier, continuously evolving with AI advancements.

Final Thoughts

The VisualFactChecker stands as a noteworthy development in the sphere of AI-driven captioning, displaying not only high fidelity in its outputs but also versatility across different formats like 2D and 3D. It bridges the gap between detailed visual understanding and natural language processing, paving the way for more immersive and accessible digital experiences. Its open-source nature combined with the effectiveness comparable to larger proprietary models presents a valuable tool for researchers and developers looking to push the boundaries of multimodal AI interactions.