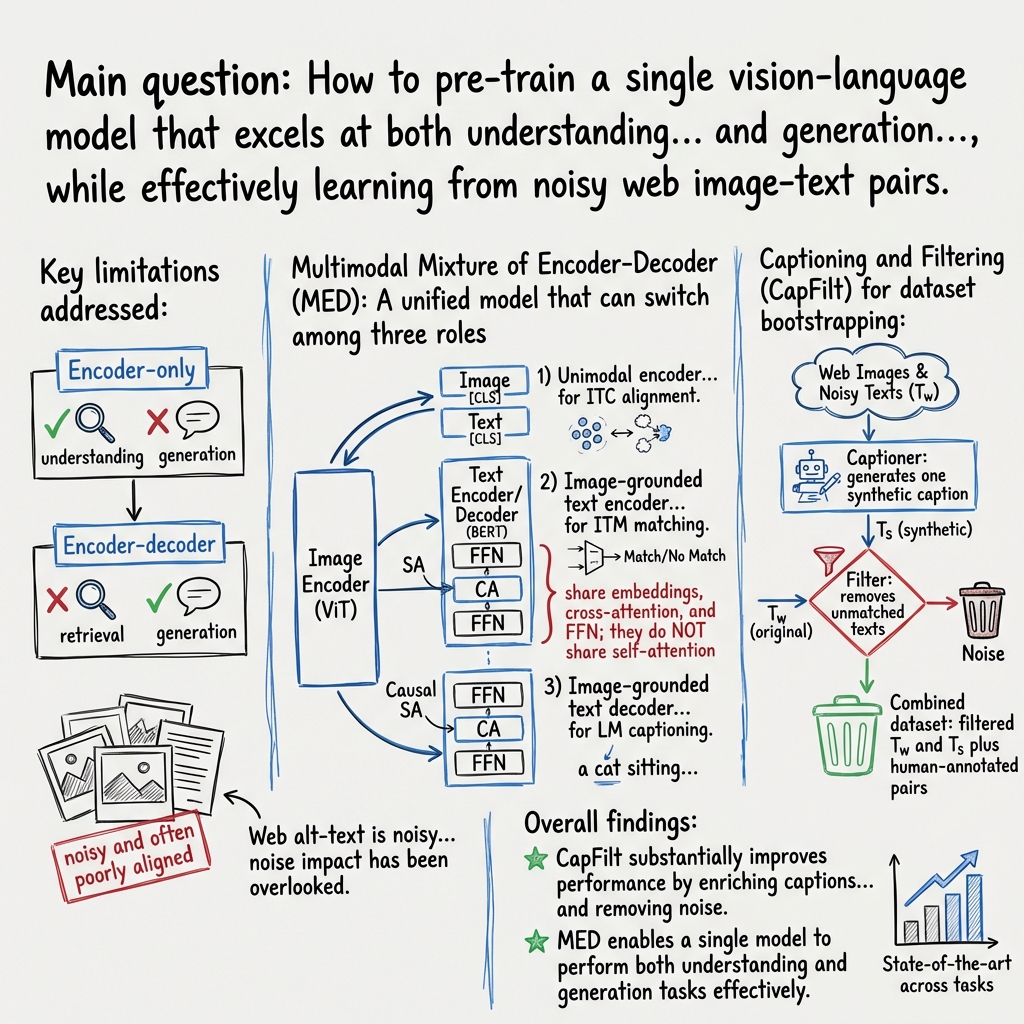

- The paper introduces a unified multimodal encoder-decoder architecture that advances both vision-language understanding and generation tasks.

- It employs the CapFilt method to generate synthetic captions and filter noisy data, thereby enhancing the quality of pre-training datasets.

- Experimental results on image-text retrieval, captioning, and reasoning benchmarks demonstrate state-of-the-art performance, including robust zero-shot video-language capabilities.

A Professional Insight into the BLIP Framework

The paper "BLIP: Bootstrapping Language-Image Pre-training for Unified Vision-Language Understanding and Generation," authored by Junnan Li, Dongxu Li, Caiming Xiong, and Steven Hoi from Salesforce Research, offers a comprehensive vision-language pre-training (VLP) framework aimed at bridging the gap between understanding-based and generation-based vision-language tasks. Below is a detailed summary and analysis of the key contributions and implications of the research.

Model Architecture and Contributions

The BLIP framework introduces a multimodal mixture of encoder-decoder (MED) architecture, which is adept at handling both vision-language understanding and generation tasks. This architecture presents a significant development over existing methods by seamlessly integrating three functionalities:

- Unimodal Encoder: This module separately encodes image and text, facilitating tasks like image-text contrastive (ITC) learning for aligning visual and linguistic representations.

- Image-Grounded Text Encoder: By injecting cross-attention layers, this encoder models intricate vision-language interactions and performs image-text matching (ITM), crucial for distinguishing between positive and negative image-text pairs.

- Image-Grounded Text Decoder: It replaces bi-directional self-attention layers with causal self-attention layers to enable effective language modeling (LM) and generation of textual descriptions from images.

Dataset Bootstrapping: Captioning and Filtering (CapFilt)

A standout feature of this work is the CapFilt method, which addresses the high noise levels in web image-text pairs. CapFilt employs two modules:

- Captioner: Generates synthetic captions for web images, significantly enriching the training dataset.

- Filter: Removes noisy captions from both original and synthetic datasets, enhancing the quality of data used for pre-training.

Experimental Analysis and Results

Extensive experiments validate that BLIP achieves state-of-the-art performance across a variety of benchmarks:

- Image-Text Retrieval: On COCO and Flickr30K datasets, BLIP surpasses previous best models, offering a notable improvement in recall metrics.

- Image Captioning: On COCO and NoCaps datasets, BLIP demonstrates superior CIDEr and SPICE scores, owing to its hybrid architecture capable of robust text generation.

- Visual Question Answering (VQA) and Natural Language Visual Reasoning (NLVR2): BLIP achieves competitive scores, showcasing its utility in reasoning tasks.

- Visual Dialog (VisDial): Outperforms existing models in ranking dialog responses, demonstrating its efficacy in understanding conversation context.

Notably, BLIP's zero-shot generalization to video-language tasks such as text-to-video retrieval and video question answering showcases its robustness. The performance on these tasks, even without task-specific temporal modeling, indicates the strength of the BLIP framework in capturing semantic coherence across modalities.

Implications and Future Directions

Theoretical implications of BLIP's design suggest that incorporating diverse and high-quality pretrained datasets can significantly uplift the performance of multimodal tasks. Practically, the framework can contend with noisy datasets more effectively, which is pivotal given the prevalent reliance on large-scale web data in VLP models.

Future research avenues could explore:

- Multi-round Bootstrapping: Iteratively refining the dataset by generating multiple synthetic captions, thus further enhancing the model's learning capacity.

- Ensemble Models: Training multiple captioners and filters to create a more robust dataset bootstrapping pipeline.

- Task-Specific Enhancements: Integrating temporal modeling for video-language tasks and other task-specific nuances to further improve downstream performance.

Overall, BLIP represents a significant advancement in unified VLP frameworks, proving itself capable of excelling across a comprehensive suite of vision-language tasks with improved dataset handling and innovative architectural design.