Dictionary-based Phrase-level Prompting of LLMs for Machine Translation

The paper "Dictionary-based Phrase-level Prompting of LLMs for Machine Translation" offers a novel approach to enhance the performance of LLMs in machine translation (MT), particularly addressing issues with rare word translations prevalent in low-resource or domain-specific scenarios. The authors present a method called DiPMT, which leverages bilingual dictionaries to provide phrase-level control hints within the prompts used for LLMs.

Summary of Approach

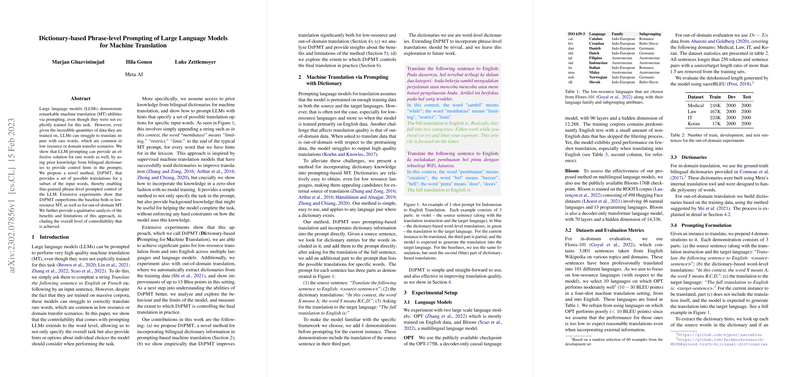

The researchers build on the already established capacity of LLMs to perform MT tasks via prompting without explicit training for translation. However, these models face significant challenges in translating rare words, a common issue in low-resource or domain transfer contexts. DiPMT addresses this shortcoming by incorporating dictionary knowledge directly into the prompts. This approach is inspired by previous success in supervised MT models that used dictionary information, but here, it is applied in a zero-shot fashion. The method enables LLMs to consider multiple potential translations for specified input words, thereby enhancing controllability at the word level.

This technique involves appending dictionary-based hints to the end of MT prompts, suggesting possible meanings for challenging words within the input. This method is designed to enrich the LLMs' knowledge of low-resource contexts without imposing hard constraints on the translations produced.

Experimental Results

The experimentation conducted by the authors spans multiple configurations involving LLMs like OPT and Bloom and languages selected from the Flores-101 dataset. The results underscore the ability of DiPMT to outperform baseline models in both low-resource and out-of-domain MT settings. Significant numerical improvements are reported, with BLEU score enhancements up to 13 points in particular domains. Moreover, analyses reveal that DiPMT maintains a level of controllability over translation outputs, which is a critical factor in improving MT quality.

Implications and Future Directions

From a practical perspective, DiPMT has the potential to improve translation quality in scenarios where access to comprehensive training data is limited. By integrating easily obtainable bilingual dictionaries into the prompting framework, DiPMT makes significant strides in translating rare and complex terminology. This carries substantial implications for contexts requiring specialized vocabulary, such as medical or technical domains.

Theoretically, this work highlights the potential of prompt engineering not only in tailoring LLM outputs but also in enriching them with structured external knowledge. The strategy of using dictionaries could be extrapolated to other tasks where domain-specific knowledge is crucial.

Looking ahead, further investigation could explore extending DiPMT to phrase-level translations beyond word-level hints. Moreover, enhancing the method of selecting dictionary entries and refining prompt structures could yield even greater improvements in translation accuracy and control, especially as more sophisticated bilingual and multilingual models are developed.

In conclusion, the proposed DiPMT method represents a noteworthy advancement in using prompting techniques to adapt LLMs effectively for machine translation, especially in scenarios involving low-resource language pairs and specialized domains.