Self-Distillation for Model Stacking Unlocks Cross-Lingual NLU in 200+ Languages

This paper presents a novel methodology for enhancing the cross-lingual capabilities of LLMs by integrating machine translation (MT) encoders with LLM backbones through self-distillation. The resulting hybrid model, termed MT-LLM, aims to leverage the strengths of both LLMs and MT encoders to perform natural language understanding (NLU) tasks across a diverse set of languages, including many low-resource languages.

Introduction

LLMs like GPT-3 and Llama 3 have demonstrated impressive performance on a variety of NLU tasks, particularly in English. However, their effectiveness diminishes significantly for languages that are typologically distant from English or poorly represented in their training data. Conversely, state-of-the-art MT models like NLLB and MADLAD-400 provide strong multilingual representations but lack the extensive world knowledge embedded in LLMs. To bridge this gap, the authors propose integrating MT encoders directly into LLM backbones through a self-distillation process, thereby enhancing the cross-lingual transfer capabilities of LLMs.

Methodology

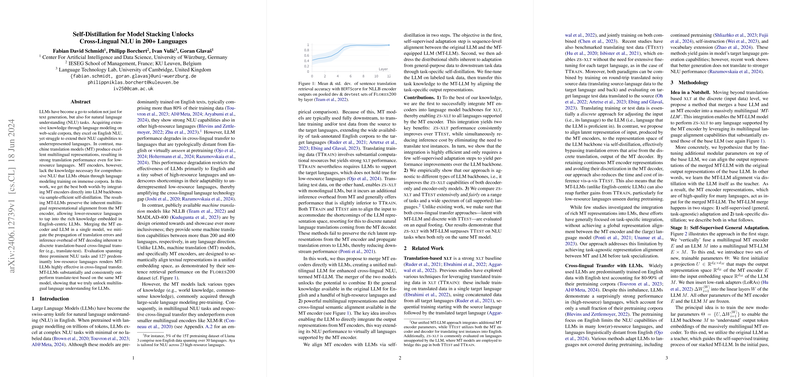

The integration is achieved in two primary stages:

- Self-Supervised General Adaptation: This initial stage focuses on aligning the representation spaces of the MT encoder and the LLM. The process uses a sequence-level alignment objective where new trainable parameters (a projection matrix and LoRA adapters) are optimized to map MT encoder outputs to the LLM's input embedding space. This enables the LLM to understand multilingual representations generated by the MT encoder.

- Task-Specific Distillation: In this stage, the model undergoes task-specific fine-tuning. Initially, the LLM is fine-tuned on labeled task data, and subsequently, this task-specific knowledge is transferred to the MT-LLM hybrid by aligning the output representations of the task-tuned LLM and the MT-LLM.

Experimental Setup and Results

Tasks and Languages

The authors evaluated the proposed MT-LLM across three NLU tasks:

- Natural Language Inference (NLI): Evaluated on XNLI, AmericasNLI, and Kardeş-NLU datasets.

- Sentiment Classification: Evaluated on the NusaX dataset, covering 10 Indonesian languages.

- Multiple-Choice Machine Reading Comprehension (MRC): Evaluated on the Belebele benchmark, which includes 122 languages.

Cross-Lingual Transfer Setups

The paper employed two standard cross-lingual transfer setups:

- Zero-Shot Cross-Lingual Transfer (ZS-XLT): The model is fine-tuned on English training data and evaluated directly on target language instances.

- Translate-Test: Target language instances are translated into English before being processed by the LLM.

Numerical Results

The MT-LLM significantly outperformed both standard LLMs and the standalone NLLB encoder in cross-lingual NLU tasks. Notably, the MT-LLM exhibited an average accuracy of 81.4% on XNLI and 82.1% on Kardeş-NLU, showing substantial improvements over standard LLMs and MT models. The results demonstrate that the MT-LLM approach surpasses the translate-test setup, achieving better performance and reducing inference overhead by eliminating the need for MT decoding.

Discussion

The paper sheds light on the computational efficiency of the proposed self-distillation method, which requires only a few thousand training steps to achieve significant alignment between the MT and LLM backbones. This efficiency is crucial given the extensive computational resources typically required for training such models.

Implications and Future Work

The integration of MT encoders into LLMs through self-distillation holds considerable promise for improving multilingual capabilities in NLU tasks. By extending LLMs' access to the rich multilingual representations of MT encoders, this approach mitigates the constraints posed by typological differences and low-resource language representations.

Future research could explore the inclusion of token-level alignment objectives to further enhance the alignment and generalization capabilities of MT-LLMs. Additionally, extending this approach to support even more languages through post-hoc adaptation of both LLM and MT encoders may yield further gains in cross-lingual NLU performance.

Conclusion

This paper introduces a novel and effective method to enhance the cross-lingual NLU capabilities of LLMs by integrating MT encoders through self-distillation. The resulting MT-LLMs demonstrate superior cross-lingual performance, validating the efficacy of the proposed approach and paving the way for more inclusive and efficient multilingual LLMs.