In-Context Retrieval-Augmented LLMs

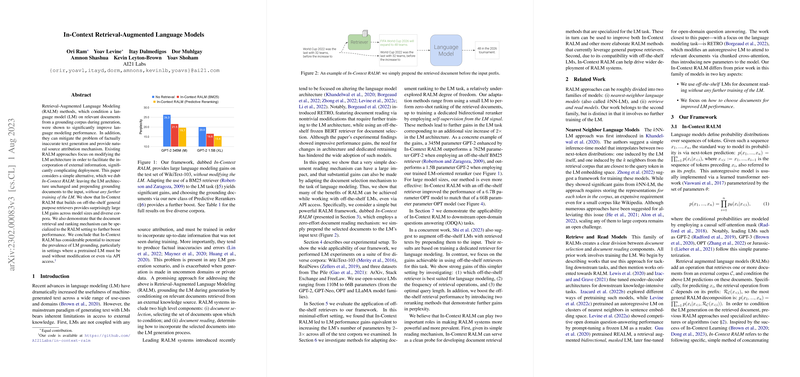

The paper "In-Context Retrieval-Augmented LLMs" presents a novel approach to enhancing LLM (LM) performance through a technique that requires minimal alteration to existing models. The authors investigate an alternative method, termed In-Context RALM, which allows pre-trained LMs to benefit from external information without necessitating changes to their architecture or additional training. This approach involves simply appending retrieved documents to the input sequence of the LM.

Traditionally, Retrieval-Augmented LLMing (RALM) necessitates architectural changes to incorporate external data, often complicating deployment and reducing flexibility. Such transformations have typically required dense retrievers and additional training phases, which are both time-consuming and computationally costly. In contrast, In-Context RALM leverages off-the-shelf general-purpose retrievers, particularly favoring BM25, a sparse retrieval method. This strategy yields significant improvements in model performance, analogous to increasing model sizes by 2-3 times, without any further parameter adjustment or fine-tuning.

The paper measures the success of the In-Context RALM across several datasets: WikiText-103, RealNews, and subsets of The Pile such as ArXiv, Stack Exchange, and FreeLaw. These datasets provide diverse testing grounds for validating the proposed method. The demonstrated gains suggest that when grounding documents are wisely chosen, even a substantial LM can achieve performance levels akin to much larger counterparts. For example, using an off-the-shelf retriever improved the performance of a 6.7B parameter model to match that of a 66B parameter model for the OPT model family.

The authors further explore the customization of document retrieval mechanisms. Beyond using BM25's lexical retrieval out-of-the-box, they delve into reranking strategies to better align selected documents with LM tasks. A zero-shot ranking technique using smaller LMs was employed before training a dedicated bidirectional reranker using self-supervision derived from the LM's own signals. This approach demonstrates additional perceptible improvements, proving effective without additional changes to the existing LMs.

This paper's contributions are practical, offering methodological insights for enhancing LM outputs' factual accuracy and reliability, a salient concern for applications where LMs generate information-sensitive documents. The strategy facilitates retrieval augmentation in scenarios where models are accessible only through an API, broadening the applicability to diverse use cases.

In the context of open-domain question answering, the authors confirm that the advantages of In-Context RALM extend beyond pure LLMing tasks. By using retrieval-augmented LMs without specialized training for the ODQA setting, they show that it is feasible to significantly boost zero-shot performance for varied model sizes.

The paper's findings hold implications for future RALM developments, hinting that significant LM improvements can be achieved through careful retrieval design rather than more extensive LM architecture tailoring or retraining. This line of research suggests promising directions for future AI systems, where increasing model size is not the only path to better performance—but rather, informed interaction with curated, external information sources might yield equal, if not superior, results in efficiency and accuracy. As the availability and complexity of information grow, methods for seamlessly integrating this information into the decision-making processes of LMs will become increasingly vital. The simplicity, coupled with the efficacy of In-Context RALM, is a step toward more accessible and deployable AI systems that maintain competitive performance.