Improving Retrieval-Augmented LLMs with Compression: A Summary

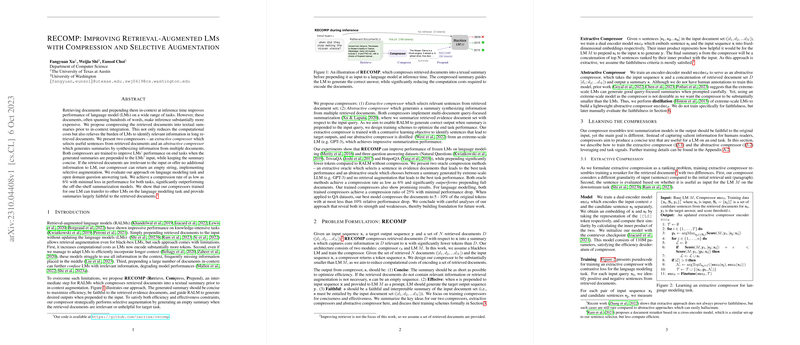

The paper "RECOMP: Improving Retrieval-Augmented LMs with Compression and Selective Augmentation" addresses a key challenge in the domain of retrieval-augmented LLMs (RALMs): the computational inefficiencies of handling long retrieved documents in the context of LLMing and open-domain question answering tasks. The authors propose a strategy to compress these retrieved documents into concise textual summaries, which can be integrated into the LLMs, thereby reducing computational burdens while maintaining or even enhancing task performance.

Compression Methodologies

The paper introduces two primary compression mechanisms:

- Extractive Compression: This method involves selecting the most relevant sentences from the retrieved documents, offering a sentence-level extraction that aims to be both concise and effective. The extractive compressor uses a dual encoder model optimized through contrastive learning to rank sentences that improve the target LLM’s (LM) performance.

- Abstractive Compression: Here, the compression is more synthesis-oriented, generating summaries that blend information from multiple documents. This method employs an encoder-decoder model, distilled from extreme-scale LLMs like GPT-3, focused on outputting summaries that not only capture relevant data but also simplify computational needs.

In both cases, the compressors perform selective augmentation, where they may return an empty string if the retrieved information is deemed irrelevant or unhelpful, effectively negating unnecessary additions to the input context.

Evaluation and Results

The approach was tested across multiple data sets, including WikiText-103 for LLMing and diverse question-answering datasets like Natural Questions (NQ), TriviaQA, and HotpotQA. Notably, the compression method achieved compression rates as low as 6% without significant performance loss. This is particularly compelling as it outperformed existing off-the-shelf summarization models in both efficiency and task accuracy.

The compressors were also tested for their ability to generalize across different languages models. Initially trained for one LM (e.g., GPT2), these compressors were able to effectively transfer to others such as GPT2-XL and GPT-J, demonstrating the robustness of the textual summarization approach.

Implications and Future Directions

The findings in this paper underline the potential of combining retrieval-augmented methods with informed compression strategies to enhance the application of LMs in real-world scenarios. By reducing the amount of information LLMs need to process, these methods can lead to significant computational savings and expand the usability of LMs in environments with limited processing capabilities.

Future research could further refine these compressors, particularly focusing on achieving adaptive augmentation, where the number of prepended sentences is dynamically optimized on a per-task basis. Moreover, improving the faithfulness and comprehensiveness of the abstractive summaries remains an open challenge, especially for tasks requiring complex multi-hop reasoning like HotpotQA.

This research proposes a promising step in optimizing RALMs, with potential applications in more efficient document processing, scalable deployment of LMs in constrained environments, and advancing the state-of-the-art in AI-driven language processing tasks.