Essay on "Learning To Retrieve Prompts for In-Context Learning"

The paper "Learning To Retrieve Prompts for In-Context Learning" explores the paradigm of in-context learning in large pre-trained LLMs (LMs). This paradigm leverages few-shot training examples (prompts) alongside a test instance as input to generate an output without parameter updates. The research highlights the impact of prompt selection on performance, and proposes a novel, efficient method for retrieving prompts using annotated data and an LM, delivering superior results over prior approaches.

Key Contributions

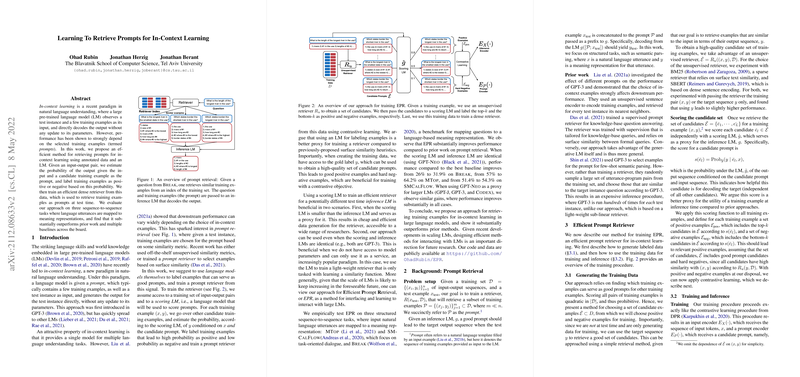

The paper presents a new method termed Efficient Prompt Retrieval (EPR) which utilizes LMs for labeling effective prompts. This approach contrasts previously adopted surface similarity heuristics. The key idea is to estimate the probability of an output given a test input and candidate prompt using a scoring LM. Candidates with high probabilities are labeled as positive examples, while those with low probabilities are negative. A dense retriever is then trained using these labeled examples through contrastive learning.

Methodology

- Data Generation: The method involves generating labeled data by first retrieving a candidate set of prompts using unsupervised retrievers like BM25 or SBERT. The candidates are then scored using a LLM to determine their helpfulness in generating desired outputs.

- Training: Using positive and negative examples, a dense retriever is trained to learn a similarity metric, aimed at retrieving contextually relevant examples that facilitate effective in-context learning.

- Inference: At test time, the retriever employs maximum inner-product search to determine suitable training examples for use as prompts, optimizing the selection to fit the inference LM's context size constraints.

Empirical Evaluation

The researchers evaluated their approach on three structured sequence-to-sequence tasks: Break, MTop, and SMCalFlow. EPR outperformed both unsupervised retrieval methods (BM25, SBERT) and supervised baselines (Case-based Reasoning). Notable performance gains were reported compared to these baselines, with performance improvements from 26% to 31.9% on Break, 57% to 64.2% on MTop, and 51.4% to 54.3% on SMCalFlow when using the same LM for both scoring and inference.

Theoretical and Practical Implications

The proposed method shows significant promise in advancing prompt retrieval techniques for LMs. It provides a scalable approach for interacting with increasingly large LMs by developing light-weight retrievers that can effectively select prompt examples. Moreover, the results suggest that using the LM itself as a scoring function offers stronger signals than traditional surface text similarity heuristics.

Future Directions

As LMs continue to expand in scale, further refinement and extension of EPR could enhance its ability to operate with even larger models and diverse tasks. Exploring ways to seamlessly integrate prompt selection into LM training regimes or advancing the scoring function beyond current capacities are viable avenues for subsequent research.

Conclusion

The paper presents a robust contribution to the field of in-context learning, demonstrating that using LMs as a tool for prompt selection significantly enhances performance across multiple tasks. This work sets a precedent for future explorations into efficient interactions with LMs, addressing both theoretical frameworks and practical solutions for prompt retrieval in LLMs.