Toward Reasoning in LLMs: An Expert Overview

The paper "Towards Reasoning in LLMs: A Survey" by Jie Huang and Kevin Chen-Chuan Chang offers a comprehensive examination of reasoning within LLMs. As LLMs such as GPT-3 and PaLM continue to advance, investigating the extent and nature of their reasoning abilities is critical. This paper addresses the complexities of reasoning in LLMs by reviewing methods to enhance, evaluate, and understand reasoning in these models, while also suggesting avenues for future research.

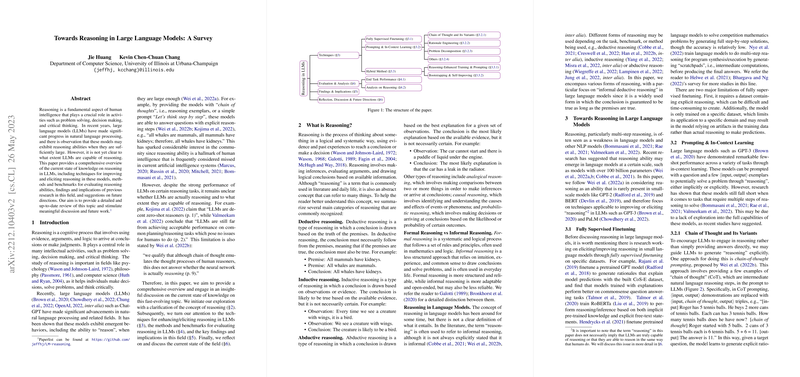

The core sections of the paper explore various techniques for enhancing or eliciting reasoning capabilities in LLMs. These include fully supervised fine-tuning, prompting, and in-context learning. The authors highlight chain-of-thought (CoT) prompting as a particularly effective method for eliciting explicit reasoning from LLMs, marking it as a significant development in improving reasoning capabilities. Importantly, they point out the distinction between the explicit "chain of thought" and actual reasoning processes, suggesting that CoT may encourage models to generate reasoning-like outputs rather than genuine rational thought.

The authors provide a detailed analysis of the benchmarks used for evaluating reasoning abilities, including arithmetic reasoning, commonsense reasoning, and symbolic reasoning. Despite advancements, there are strong indications that LLMs continue to struggle with complex reasoning tasks, as current benchmarks may not fully capture the nuanced reasoning abilities expected in real-world applications. Furthermore, the paper presents a taxonomy of high-level techniques for reasoning, discussing both their potential and limitations comprehensively.

One salient aspect of the paper is its identification of reasoning as an emergent capability that only surfaces significantly in models at a certain scale, approximately beyond 100 billion parameters. This observation implies a paradigm where rather than focus narrowly on task-specific training for smaller models, leveraging larger, comprehensive models for a wide array of reasoning tasks may be more effective.

Additionally, the survey explores the analysis of reasoning, utilizing experiments and datasets developed to test the limits of LLM reasoning. The findings suggest that the reasoning performance of LLMs is often affected by heuristic-driven processes rather than robust logical deduction, which mirrors certain aspects of human reasoning, yet leads to potential pitfalls.

While the survey presents numerous insights, it is clear that the road ahead necessitates improved benchmarks and more nuanced models. The authors argue that understanding how LLMs process and utilize reasoning steps in comparison to heuristic shortcuts remains a critical challenge. Such challenges must be addressed to harness the full potential of LLMs in applications requiring complex decision-making and logical inference.

In conclusion, this survey provides a timely and comprehensive overview of reasoning in LLMs, presenting the state-of-the-art techniques alongside their implications and limitations. While LLMs show potential in tasks that require reasoning, the paper emphasizes the need for continued research into enhancing their capabilities and developing evaluation methods that more accurately reflect nuanced reasoning. From a future perspective, leveraging improved reasoning abilities in LLMs could expand their application across domains that require sophisticated problem-solving, decision-making, and critical thinking.