Efficient Long Sequence Modeling via State Space Augmented Transformer

Introduction

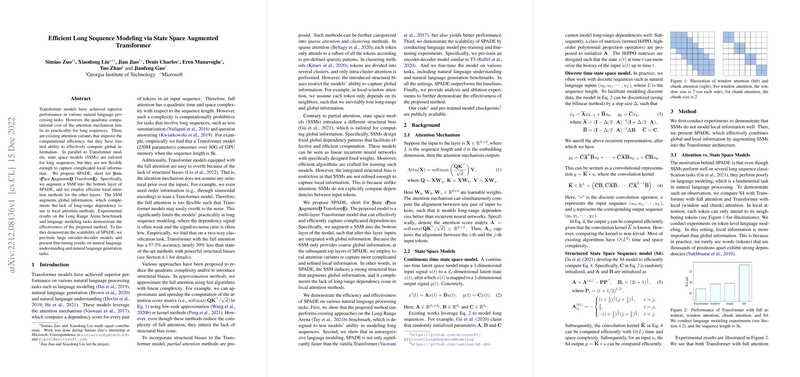

The paper presents SPADE (State sPace AugmenteD TransformEr), a novel approach to model long sequences efficiently. The advent of Transformer models revolutionized natural language processing tasks; however, their quadratic complexity regarding sequence length makes them computationally prohibitive for longer sequences. By integrating State Space Models (SSMs) and efficient local attention mechanisms, SPADE aims to overcome these limitations, offering a promising solution to balance global and local information processing.

Background

Transformers rely on full attention mechanisms to calculate dependencies between all token pairs, leading to O(L2) complexity. Although effective in handling short sequences, this poses a challenge for longer sequences due to escalated computational resources and the potential for overfitting. SSMs, tailored for long sequences processing, lack the flexibility to capture intricate local dependencies. This paper proposes a hybrid approach that leverages the strengths of both models, aiming to effectively manage the trade-off between computational efficiency and modeling capabilities for long sequence tasks.

Methodology

The SPADE architecture integrates a SSM at its bottom layer for coarse global information processing, followed by layers equipped with local attention mechanisms to refine this information and capture detailed local dependencies. This design leverages the efficiency of SSMs in handling global dependencies and the effectiveness of local attention mechanisms for detailed context capture without incurring the quadratic computational penalty.

Efficiency and Scalability

Empirical results demonstrate SPADE's efficiency and scalability, significantly outperforming baseline models on various tasks. For instance, SPADE outperforms all baselines on the Long Range Arena benchmark, indicative of its superior modeling capability for long sequences. Moreover, SPADE achieves impressive results in autoregressive LLMing, showcasing its applicability to both understanding and generation tasks without necessitating the SSM layer's retraining.

Implications and Future Developments

The introduction of SPADE marks a significant step towards efficient long-range dependency modeling, highlighting the potential of hybrid models in tackling the challenges associated with long sequence processing. It opens avenues for future research in exploring other combinations of global and local mechanisms, further optimization of the architecture, and the expansion of its applications beyond natural language processing.

Conclusions

SPADE represents a novel and efficient approach to long sequence modeling, addressing the limitations of existing Transformer models and SSMs. By combining global and local information processing strategies, it achieves superior performance across multiple benchmarks while maintaining computational efficiency. This research advances the field of AI, particularly in handling long sequences, and sets the stage for future explorations in the field of efficient, scalable LLMs.