Overview of "Hungry Hungry Hippos: Towards LLMing with State Space Models"

This paper explores the application of State Space Models (SSMs) in LLMing, specifically addressing the challenges and inefficiencies associated with traditional attention-based models. The authors present two key contributions: the development of a novel SSM-based layer labeled H3, and the introduction of a hardware-efficient algorithm, FlashConv, to enhance the computational performance of SSMs.

H3: Bridging the Gap Between SSMs and Attention

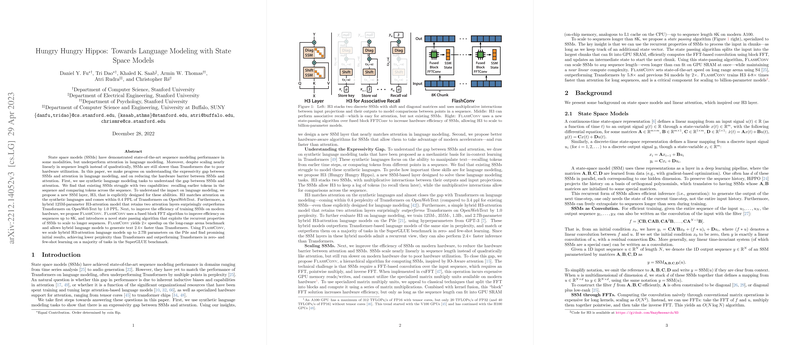

The paper identifies specific deficiencies in SSMs compared to Transformers in handling LLMing tasks. They note that SSMs lack the ability to effectively recall and compare tokens across sequences, which are critical for language understanding. To address this, the H3 layer is introduced, integrating two discrete SSMs with multiplicative interactions that emulate the capabilities of attention mechanisms.

The authors demonstrate that H3 performs competitively with attention on synthetic language tasks designed to simulate in-context learning, and brings SSM performance on par with Transformers on natural language benchmarks like OpenWebText. Notably, a hybrid model combining H3 and attention layers surpasses traditional Transformers by 1.0 PPL on OpenWebText, signifying a step forward in SSM's applicability to LLMing.

FlashConv: Addressing Computational Inefficiency

While SSMs theoretically offer linear time complexity concerning sequence length, their practical implementation has lagged behind Transformers due to inefficient hardware utilization. The authors propose FlashConv, an advanced FFT-based convolution algorithm that leverages block FFTs to exploit modern GPU architectures.

FlashConv is shown to deliver substantial speedups—doubling performance over previous implementations on long sequences and achieving 2.4x faster text generation than Transformers in hybrid models. This improvement is critical for training large-scale models, evidenced by successful scaling of hybrid H3-attention models to 2.7 billion parameters without compromising the speed benefits.

Implications and Future Directions

This research makes significant progress in enhancing SSMs for LLMing by addressing expressivity gaps and computational inefficiencies. The transformation provided by the H3 layer and the efficiency achieved with FlashConv suggest that SSMs could be pivotal in future NLP applications, especially in scenarios where long sequence processing and reduced computational cost are essential.

Looking forward, the work opens up opportunities to further refine SSM architectures, potentially leading to models that combine the best traits of both SSMs and attention mechanisms for broader and more robust language understanding. Moreover, with the encouraging results in large model scalabilities, future research might explore even larger SSM-based models, exploring their capacities in diverse AI tasks beyond language.

In conclusion, the paper presents a substantial advance in LLMing paradigms, providing exciting prospects for future research in both the theoretical and applied dimensions of AI and LLMs.