A Comprehensive Overview of Long-Short Transformer: Efficient Transformers for Language and Vision

Introduction

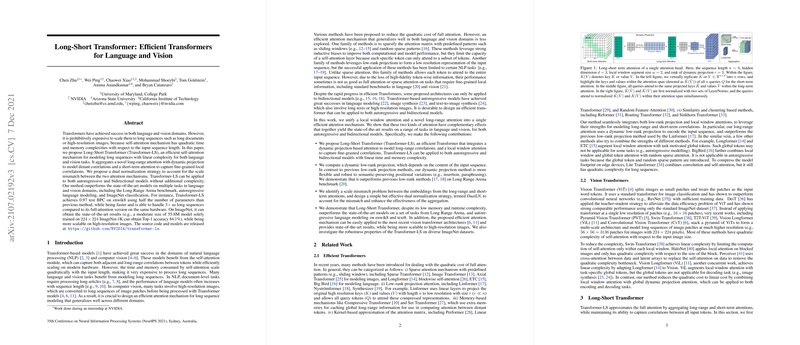

The paper presents the Long-Short Transformer (Transformer-LS), an innovative architectural approach crafted to address inherent inefficiencies in self-attention mechanisms when applied to long sequences in both language and vision tasks. Transformer-based models, while markedly successful across domains of natural language processing and computer vision, encounter prohibitive computational complexities when scaling to lengthy input sequences. This paper introduces a model that substantially reduces such barriers by integrating long-range and short-term attention mechanisms with linear complexity, thereby optimizing performance across various tasks.

Methodology

The authors propose the Transformer-LS, featuring a sophisticated integration of two types of attention processes:

- Long-Range Attention via Dynamic Projection: This aspect of the Transformer-LS employs a dynamic low-rank projection to model distant correlations between tokens efficiently. The crucial innovation here is the replacement of fixed low-rank projections, as employed in methods like Linformer, with projections that dynamically adapt to the content of input sequences. This adaptation yields robustness against semantic-preserving positional variations, enhancing efficacy especially for inputs with high sequence length variance.

- Short-Term Attention via Sliding Window: The sliding window attention mechanism enables the capture of fine-grained local correlations. By implementing a segment-wise approach, this method ensures effective scaling and computational efficiency.

Moreover, a dual normalization strategy (DualLN) is introduced to mitigate scale mismatch between embeddings from the long and short-term attention mechanisms, thus improving the aggregation of these two complementary processes.

Results

- Numerical Evaluation: Transformer-LS demonstrates improved performance over state-of-the-art models across multiple benchmarks. For example, in autoregressive LLMing on enwik8, it achieves a test BPC of 0.97 using half the parameters of previous methods, while being faster and handling sequences three times longer on equivalent hardware.

- Bidirectional and Autoregressive Models: The model shows competitive performance on both types of models, handling tasks like Long Range Arena benchmarks, autoregressive LLMing, and ImageNet classification with enhanced efficacy.

- Robustness and Scalability: The transformer displays impressive robustness against perturbations and positional changes. Additionally, it shows scalability to high-resolution images, achieving a top-1 accuracy of 84.1% on ImageNet-1K.

Implications

The theoretical implications of this work are significant, hinting at a profound evolution in the design of transformer architectures. The dynamic projection method not only provides computational savings but also enhances representation robustness, a critical need for tasks involving fluctuating input lengths. In practical terms, Transformer-LS sets a new standard in handling complex, long-sequence tasks with reduced resource demands. This efficiency is crucial for real-world applications where hardware limitations persist.

Future Developments

Looking forward, the Transformer-LS model promises to impact further research in transformers by paving the way for the development of more adaptable and efficient architectures. Potential future directions include exploring its application in other domains requiring robust long-sequence modeling, such as video processing and long-form dialogue systems. Additionally, the integration of this model into more advanced, domain-specific architectures could push performance further, particularly in areas like semantic segmentation and high-resolution object detection.

In conclusion, Transformer-LS marks a significant step forward in transformer efficiency and adaptability, offering a blueprint for future architectural innovations in both NLP and computer vision domains.